How cache works on a NAS

if I get a QM2-4P-284 card, I can build in NVME speed SSDs in the normal ports for SSDs?

If I use it as cache: So I copy something from my portable SSD to the NAS, is this going first to the SSDs or straight to the HDDs? I’m just thinking about the copy speed.

My plan is to use the 4 SSDs as cache (because with only 8TB it could be to less space, for all our projects) and 4 HDDs for the current projects and 4 HDDs to mirror the project. Is this working? Does it make sense?

With QM2-4P-284 you get 4x NVMe M.2 slots. You can use this storage space for editing as a separate volume or cache or even a tiered storage solution. NVMe is a few times faster than regular SATA drives.

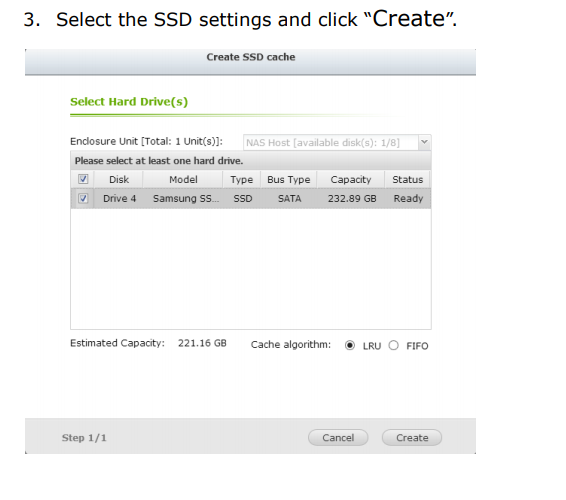

When setting up a NAS- make it right. LRU or FIFO are two important things. Once you fill-up the cache LRU mode will delete least used data from the cache. The FIFO mode will delete oldest data instead. For video editing, LRU will be more useful but will require more system resources.

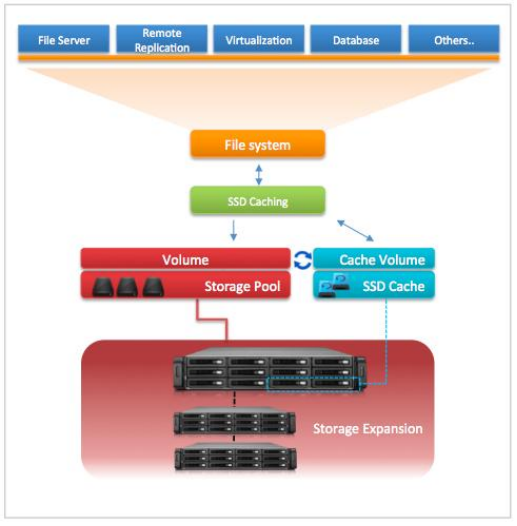

Cache works like this.

SSD cache technology

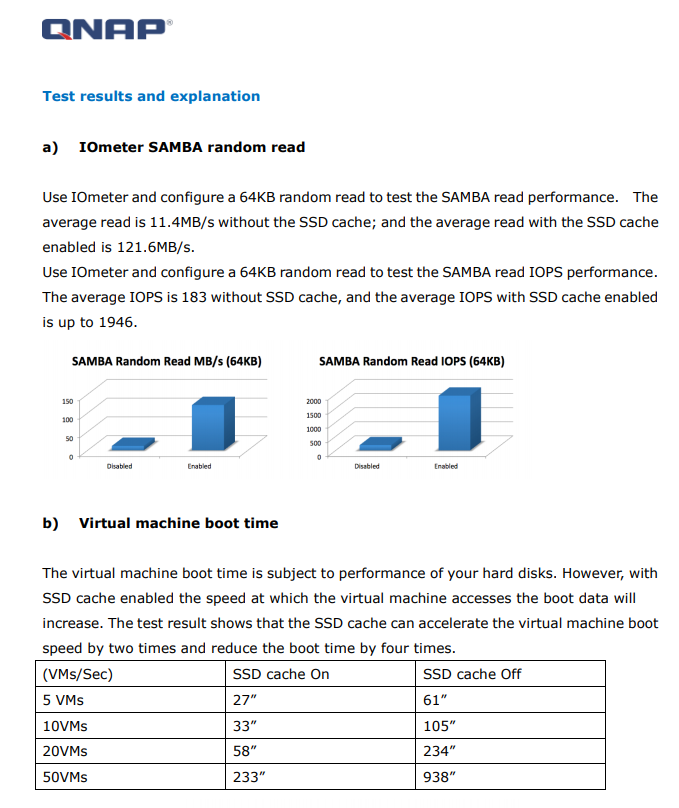

QNAP solid-state drive (SSD) cache technology is based on disk I/O reading caches.

When the applications of the Turbo NAS access the hard drive(s), the data will be stored in

the SSD. When the same data are accessed by the applications again, they will be read

from the SSD cache instead of the hard drive(s). The commonly accessed data are stored

in the SSD cache. The hard drive(s) will only be accessed when the data could not be

found from the SSD.

SSD cache data access method

When the CPU needs to process data, it will follow the steps below:

1. Check CPU Cache

2. If not found in CPU Cache, check RAM

3. If not found in RAM, check SSD Cache.

4. If not found in SSD Cache, get from hard drives, and copy to SSD Cache.

Since the SSD supports high-speed data transfer and has no mechanical properties or

moving parts, if the applications require more random read requests, the SSD cache can

significantly improve access speeds.

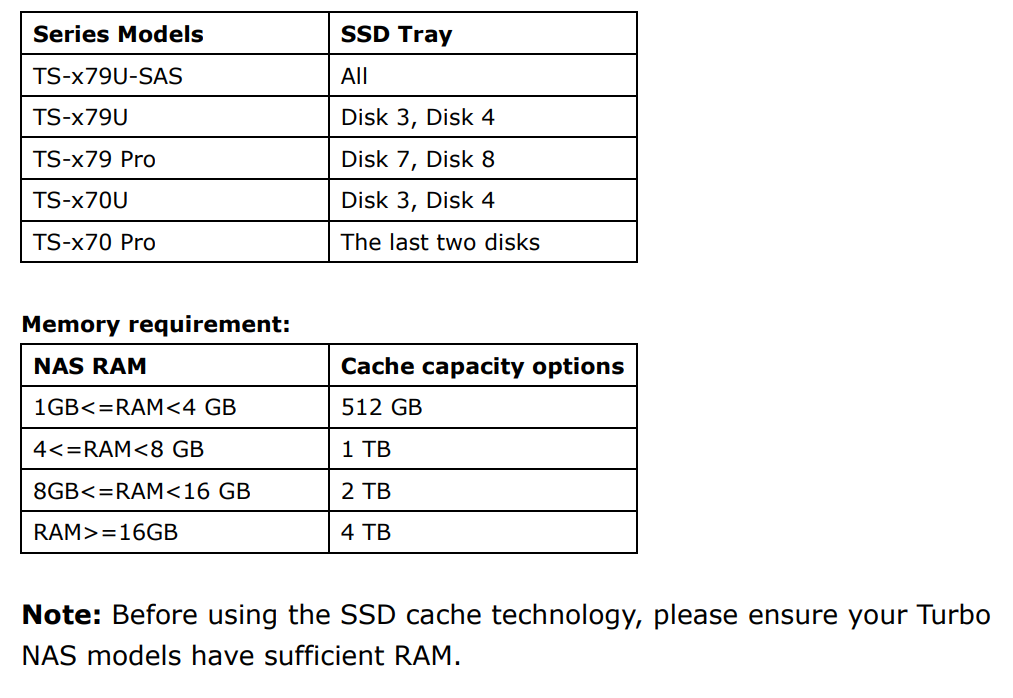

QNAP SSD cache technology provides two algorithms:

(1)LRU (Default): Higher HIT rate, but requires more CPU resources.

When the cache is full, LRU discards the least-used items first. As the system needs to

track the cached data to ensure the algorithm always discards the least recently used

data, it requires more CPU resources but provides a higher Hit rate.

(2)FIFO: Requires less CPU resources, but lower HIT rate.

When the cache is full, FIFO discards the oldest data in cache. This reduces the HIT rate

but does not require too much CPU resources.

Applications and benefits

Database: MySQL, MS SQL Server, etc.

Virtual machine: VMware, Hyper-V, XenServer, etc

The SSD transmission speed is high, but the unit cost is higher than that of the hard disk

drive (HDD). Using the SSD cache technology, we can significantly improve the IOPS and

I/O speed, and maintain a relatively low unit cost in order to satisfy the demand for utilized

space and transmission speed.

Beelink ME Pro NAS Revealed

Best SOLID STORAGE NAS of 2025

Should You Worry About the NanoKVM Hidden Microphone?

Best Cheap NAS of 2025

Minisforum MS-02 Ultra - WHO IS THIS FOR??? (The First 48HRs)

Why People Use TrueNAS, UnRAID and Proxmox to Turnkey NAS (Synology, QNAP, etc)

Access content via Patreon or KO-FI

Discover more from NAS Compares

Subscribe to get the latest posts sent to your email.