The Pros and Cons of AI Use in Your NAS

| UPDATE – The Zettlab AI NAS is Now Live on Kickstarter and you can find it HERE, or watch the Review of the D6 Here on YouTube.

Alternatively, there is an interview with the creators HERE |

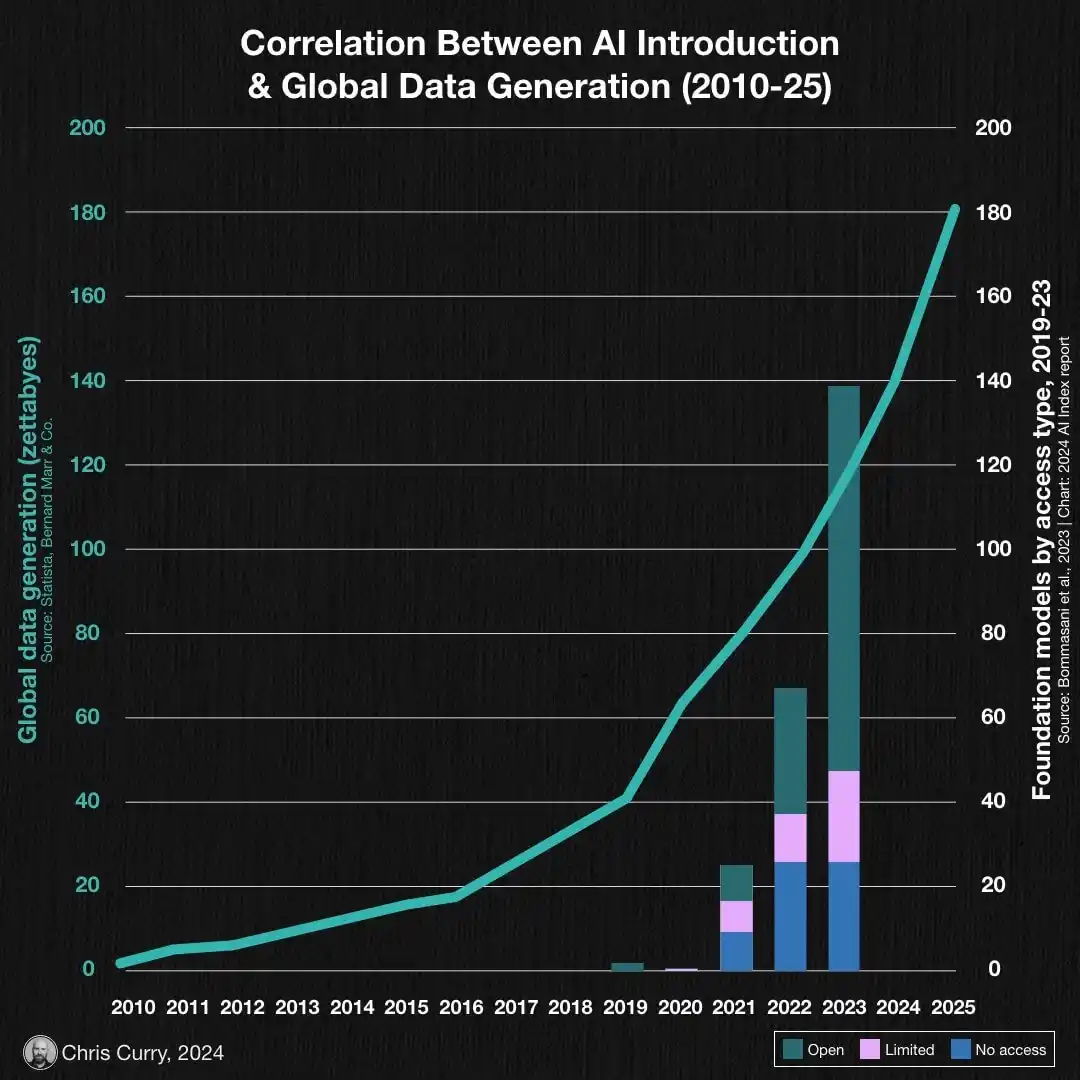

In recent years, Artificial Intelligence (AI) and Large Language Models (LLMs) have emerged as transformative technologies across various industries. Their ability to process vast amounts of data, automate complex tasks, and provide intuitive user interactions has made them invaluable in applications ranging from customer service to data analysis. Now, these technologies are making their way into the realm of Network Attached Storage (NAS) devices, promising to revolutionize how users store, manage, and interact with their data. The integration of AI and LLMs into NAS systems is more than just a buzzword—it represents a shift toward smarter, more efficient data management. From improving search and categorization through AI recognition to enabling natural language commands for administrative tasks, the potential applications are vast. However, these advancements also bring challenges, particularly in terms of security and data privacy. In this article, we’ll explore the current state of AI and LLM use in NAS devices, the benefits of local deployment, the security concerns that need addressing, and the brands leading this exciting transformation. Whether you’re a tech enthusiast, a small business owner, or a large enterprise, the rise of AI-powered NAS systems is a development worth understanding.

The Use of AI Recognition in NAS to Date

AI has steadily evolved in NAS systems, but its use has largely focused on recognition tasks rather than broader assistance or intelligence. In its early stages, AI in NAS was synonymous with recognition technology in photo and video management. This included tagging and categorizing images by identifying objects, people, animals, and scenery. These tools offered a way to organize vast amounts of data efficiently but required manual intervention to capitalize on their functionality. While helpful, these recognition tasks were limited in scope and often felt like minor conveniences rather than transformative innovations. They were more about helping users sift through data than empowering them to interact with it dynamically.

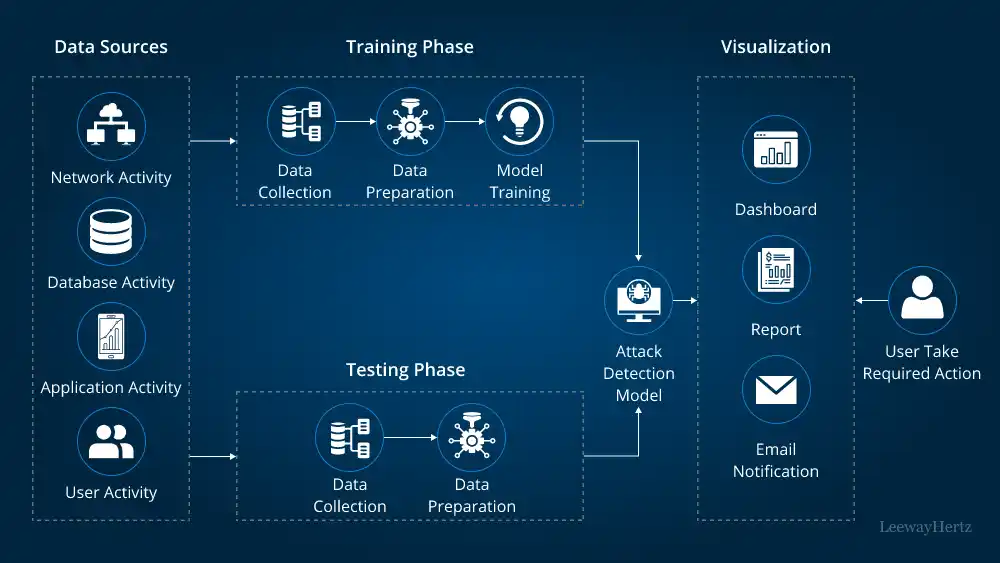

Surveillance was another area where AI found its niche in NAS systems. AI-powered surveillance solutions could identify individuals or objects in live video streams, providing real-time alerts and aiding in security operations. However, this application came with significant resource demands, requiring high-performance CPUs, GPUs, and robust storage solutions to process the data effectively. For example, recognizing someone as “David Trent” and verifying their access rights demanded not only live video analysis but also database integration. While advancements in hardware have made these processes less resource-intensive, they still remain confined to niche use cases. The introduction of large language models (LLMs) in NAS systems is set to change this, offering more versatile, interactive, and user-friendly AI capabilities.

How Are NAS Brands Going to Take Advantage of AI?

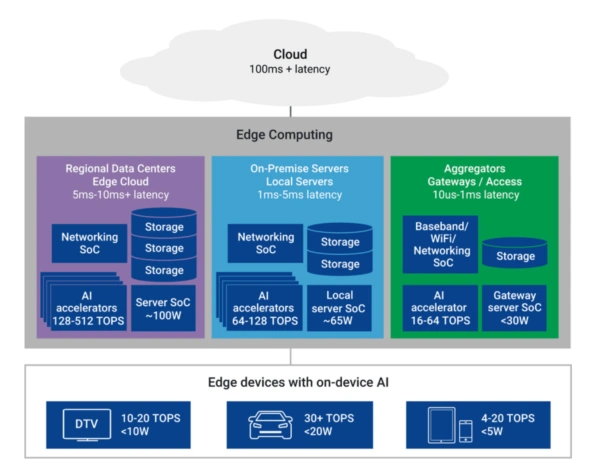

In we to ignore the clear profitability that integrating AI services will bring to all NAS manufacturers as a means to promote their products, we need to all agree that presenting the clearest, easier and jargon-free means for a user to access their data is paramount. To go on the briefest tangent, lets say we look at the cloud industry and the NAS industry over the last 2 decades – one (cloud) provides convenience, simplicity and low cost of entry, whereas the other (NAS) provides better long term value (TCO), capacity and security control. Up until the last few years, in an increasingly AI-data driven market place, cloud services have been able to leverage AI more affordably to end users thanks to all computations happening at the cloud (i.e remote data center/farm level). However, the market is changing and alongside increased affordably of bare metal server solutions, there is a growing awareness as to the security hazards of your data being on ‘someone elses computer’ and the growing ability for AI powered processes to be made possible partially and/or fully on local bare metal servers on site.

We are already seeing how NAS manufacturers of all types are leveraging AI services. We can go back a few years to the emergence of AI powered photo recognition, improvements in OCR and audio transcription to made data more three dimensional, AI powered identifcation that make make informed decisions based on that specific person/target that is identified (eg domestic surveillance) – these are all now commonplace and done better of local server storage versus cloud. However it is the next few years that excite me that most. Now that businesses and home users alike are sitting on DECADES of data – it has fallen to AI to create the best way to access this data in a timely and efficient manner. Outside of the hyperscale and unified storage tier, most users cannot (and do not) store their TERABYTES of data on the cloud – to costly, and in some cases, just not possible. So as NAS manufacturers and hardware manufacturers successfully rise to this challenge with AI pwoered tools, they are in a market ‘sweet spot’ where there is both the demand for this solution and the technology to present it affordable.

In short, we are going to see the improved accessibility of data made possible via AI language models that are going to allow non network storage or IT minded professionals to be able to get their data to do WHAT they want, WHEN they want, and HOW they want – securely and safely!

The Security Issues of AI/LLM Use in NAS Systems Right Now

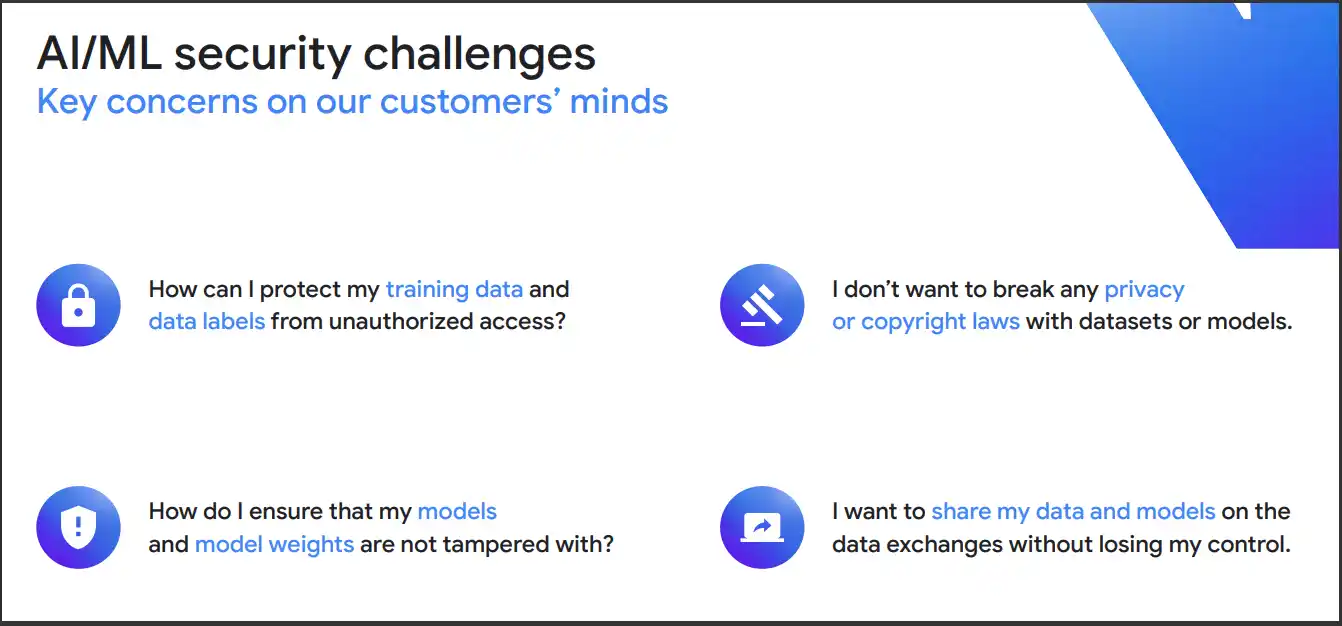

Although the security concerns of AI use and AI access are multi-faceted (which is arguably true of any data-managed or accelerated appliance), the main security implementations stem from unauthorized access. From theft or ransomware, to traditional personal privacy – the bulk of security concerns come down to finding a balance between ease of access, speed of response and security of the process from beginning to end. Encrypted tunnels whereby data is locked from access at the start point and unpacked at the endpoint have been vital in this process, but also rely heavily on a powerful yet efficient host/client hardware relationship to get this done – yet another benefit of powerful local/bare metal servers that allow direct user hardware control. But also tailored security access, control of bare metal systems to create airgaps, custom authentication patterns, selective access locations, and probably most important of all – FULL control of that host/client delivery. The real market demand right now in NAS is for archival and/or hot/live data to spend no time on any other server that is not your own. Encrypted or not, businesses and home users alike are still exceptionally weary of having their corporate/personal confidential data on “someone else’s computer” – at best because they do not want it used as training data for someone else’s AI model, and at worst because they do not want their privacy fundamentally infringed. A local AI that is running in and through a bare metal NAS will lock in access to a single controlled site, with the added benefit of total control of each part of the data transaction.

The integration of AI and LLM services in NAS devices brings numerous opportunities, but it also exposes users to critical security risks. The most prominent concern is the reliance on cloud-based AI platforms like ChatGPT, Google Gemini, and Azure AI. These services require data to be sent to remote servers for processing, which can create vulnerabilities. Organizations handling sensitive or regulated data, such as healthcare providers, law firms, and financial institutions, face significant risks when relying on these external platforms. Even with encrypted transfers and secure API keys, the mere act of sending data offsite can violate privacy regulations and increase exposure to cyber threats.

Another issue is the potential for misuse or unintentional inclusion of user data in external AI training datasets. Even if data isn’t directly accessed by unauthorized parties, it may still contribute to refining and training external AI systems. This lack of transparency creates distrust among users, particularly those who have invested in NAS systems to avoid cloud dependence in the first place. Regulatory environments such as GDPR and HIPAA further complicate the picture, as these frameworks impose stringent requirements on data privacy. For businesses that prioritize confidentiality, these risks underscore the importance of locally deployed AI solutions that keep data within their private networks.

How have recent CPU developments Improved local AI NAS Use?

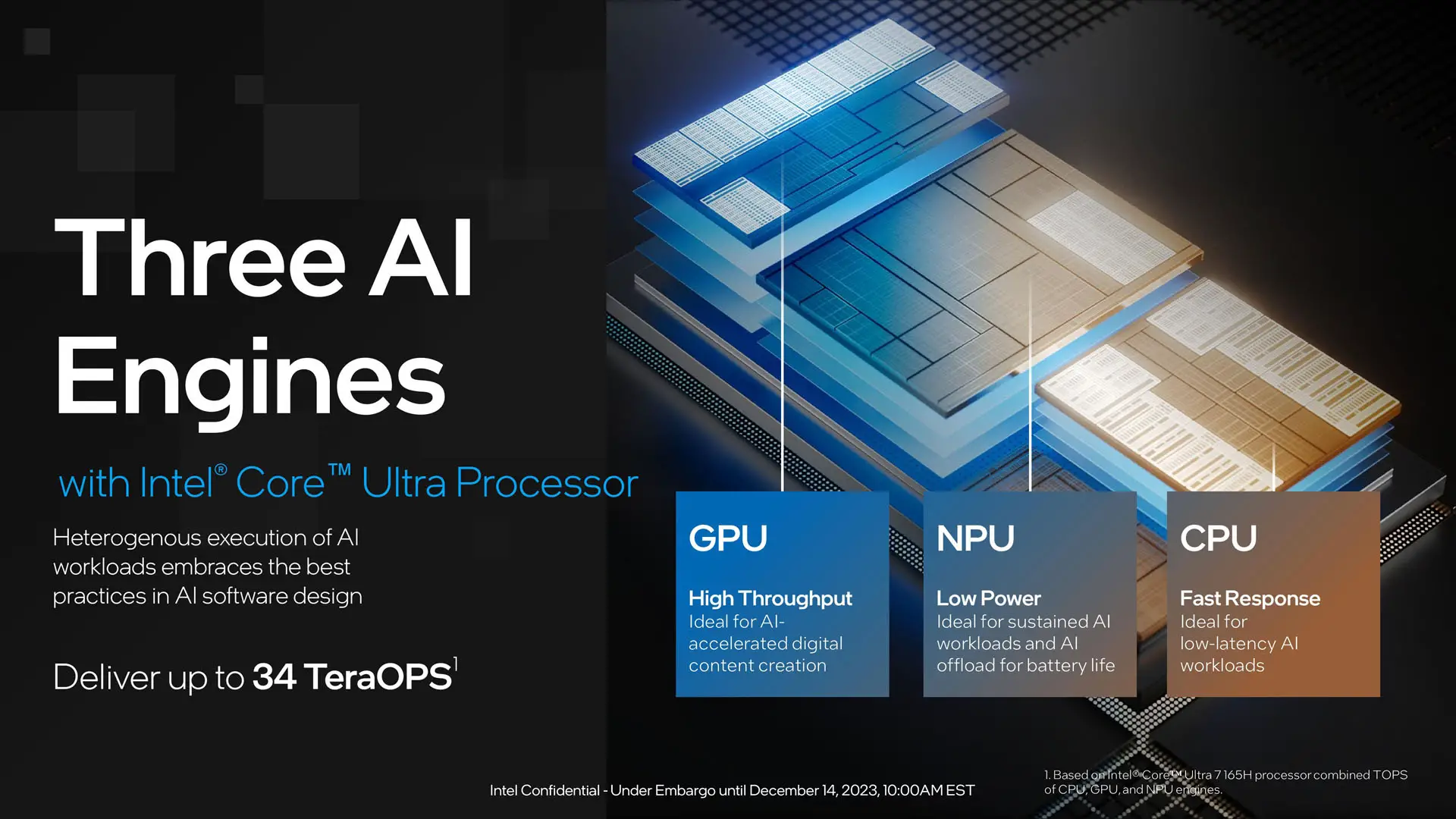

AI Processes are always going to be heavily constrained to the speed and capability of the system hardware (whether that is the client or the host server) and having access to an enormous database of data from which to subtract information and an AI/LLM to interface with it effectively are just 2 pieces of the puzzle. In many ways alot of this has already been possible for well over a decade. Having efficient yet powerful hardware to do this has only really been conventionally possible in the way we need it thanks to power vs output tradeoffs in modern hardware. The shift in CPU profiling slightly away from traditional cores, threads and clock speeds and now towards accounting for NPU abilities and intelligent CPU/GPU offload has been quick, but vital. Having “the fastest horse in the race” is no longer the be-all-end-all.

| Key Feature | CPU (Central Processing Unit) | NPU (Neural Processing Unit) | GPU (Graphics Processing Unit) |

|---|---|---|---|

| Core Functionality | Versatile processor for managing general computing tasks like running software and handling operating system operations. | Purpose-built for AI and machine learning workloads, specializing in neural network processing and inference tasks. | Primarily designed for parallel processing, focusing on rendering graphics and accelerating computation-heavy operations. |

| Core Design | Few cores optimized for linear processing and multitasking capabilities. | Hundreds to thousands of small cores designed for efficiently handling matrix and tensor operations in deep learning applications. | Built with hundreds to thousands of cores tailored for large-scale parallel computations. |

| Performance Strength | Well-suited for a variety of tasks but less effective for operations requiring massive parallelism. | Delivers exceptional performance for AI inference, training, and related tasks, with low latency and high efficiency. | Excels at high-throughput tasks like graphics rendering, video processing, and AI model training. |

| Primary Applications | Best for everyday computing, including spreadsheets, application management, and operating system processes. | Ideal for AI-related tasks, such as natural language processing, voice recognition, and image analysis. | Perfect for high-demand graphical and parallel workloads like gaming, video editing, and scientific simulations. |

| Power Efficiency | Consumes more energy for tasks outside its intended scope but is generally optimized for standard workloads. | Highly energy-efficient for AI operations, ideal for low-power devices, edge applications, and data centers. | Moderately power-efficient for intensive parallel workloads, optimized for tasks like gaming and rendering. |

| Overall Summary | The CPU is the all-purpose processing unit, excelling in versatility but less specialized for parallel-intensive tasks. | The NPU is the AI-focused unit, optimized for high performance in deep learning and neural network computations. | The GPU is the parallel processing powerhouse, designed for rendering and computationally demanding tasks. |

Now that the benefits of utility of AL/LLM in both home and business are pretty well established, this has resulted in huge development towards processors that seek to find the balancing point between power used and power needed. Intel has been by and large the biggest player in this market and already. The Uktra Core series and the recently launched Xeon 6 processors are pivoting alot of the AI learning and execution away from the heavier and more power-consuming GPU activities (as well as delegating resource use internally as needed when the need arises). The result is that these newer wave of better balanced CPU vs GPU vs NPU processors are going to be significantly better at handling future AI-managed processes, as well as reducing power consumption and shrinking the systems that are managing hundreds of thousands of these operations. Add to that the benefits of localised AI processes requiring bare metal systems with the capabilities to get the job done without cloud resources, and Intel are in a great position right now to dominate this AI processor space.

The Benefits of Local AI/LLM Deployment

Deploying AI and LLM services locally on NAS devices addresses many of the security and compliance concerns associated with cloud-dependent solutions. When AI operates entirely within the confines of a NAS, sensitive data never leaves the user’s controlled environment. This eliminates the risk of unauthorized access, data leaks, or inadvertent inclusion in external AI training. Industries like healthcare, finance, and legal services stand to benefit immensely, as they often handle data that is highly sensitive and subject to strict regulatory standards.

In addition to bolstering privacy, local AI deployment also offers substantial performance advantages. Tasks such as querying databases, generating reports, or categorizing large datasets can be processed faster since they don’t rely on an internet connection. For users in remote locations or those with unreliable internet, this capability ensures consistent performance. Furthermore, local deployment allows for highly customized AI models tailored to specific organizational needs, from managing unique workflows to optimizing resource allocation. By keeping AI processing close to the data source, local deployment combines efficiency, security, and adaptability in a way that cloud solutions cannot match.

The Administrative and Usage Benefits of Local AI/LLM Services on NAS Storage

The integration of local AI/LLM services into NAS systems not only enhances security but also revolutionizes the way users interact with their devices. One of the standout features is the ability to use natural language commands for system management. This eliminates the complexity of navigating intricate menus and understanding technical jargon. For instance, instead of manually adjusting user permissions or configuring backups, users can issue simple commands like “Share this folder with the marketing team” or “Backup all files from this month.” The system interprets these instructions and executes them seamlessly, saving time and reducing frustration.

From an administrative perspective, this functionality is a game-changer. IT professionals can automate repetitive tasks such as user management, system monitoring, and data organization, freeing them to focus on strategic initiatives. For smaller businesses or individual users, this democratization of technology reduces the learning curve, making advanced NAS functionalities accessible to non-technical users. Additionally, local AI systems can analyze usage patterns to optimize system performance, flag potential issues before they escalate, and even suggest improvements. Whether for personal use or enterprise deployments, local AI and LLM services make NAS devices more intuitive and effective tools.

Where are Hard Drives in local AI NAS Use? Are they too slow?

The database that an AI service or infrastructure interfaces with, as well as the core system hardware that supports the LLM/Training model are only going to be as effective as the delivery for the data from point A to point B. Having a super intelligent and well-trained AI connected to a comparative continent of records and information is largely useless if the delivery of the data is being bottlenecked. Until recent years, the answer was thought to be SSDs – with their higher IO and superior simultaneous access abilities. However their own individual shortfall has always been one of capacity – the sheer growth weight of NEW data at any given moment is truly astronomical, and that is not even factoring in the scale of an existing database.

Therefore Hard Drives once again have had to come to the rescue and although the reduced TCO of HDDs, as well as their phenomenal capacity growth, has been hugely appealing – HDD technology has not been sleeping during the AI boom! Intelligent caching, the benefits of multi-drive read/write operations in large scale RAID environments, AI-driven tiered storage alongside strategic SSD deployment – all of this and more have resulted in an AI driven world that, rather than turning it’s back of mechanical hard disks, has actual embraced and increased their utility!

Brands Currently Engaging with Local AI Deployment

The integration of local AI services in NAS systems is no longer a niche feature, with several brands leading the charge in this space. Synology, a long-established player in the NAS market, has developed an AI Admin Console that allows users to integrate third-party LLMs like ChatGPT and Gemini. While this approach relies on external platforms, it offers granular controls to limit data exposure, providing a middle ground for users who want advanced AI features without fully sacrificing security. This hybrid model appeals to users who need both functionality and control.

Zettlabs, a lesser-known yet innovative company, has embraced fully local AI solutions. During a demonstration at IFA Berlin, Zettlabs showcased a crowdfunding project featuring offline AI capabilities. The system processed complex queries using only local datasets, such as querying an eBook database or analyzing medical records without requiring internet access. This approach highlights the potential for offline AI in specialized industries like healthcare and education. UGREEN, a brand known for its DXP systems, is also exploring local AI deployment. Their systems focus on efficient offline processing and interactive management, providing another compelling option for users seeking privacy-first AI solutions. Together, these brands are shaping the future of AI-powered NAS devices by prioritizing user privacy and functionality.

Is AI and LLMs in NAS A Good Thing?

The integration of AI and LLM services into NAS systems is poised to transform how users manage and interact with their data. By automating complex processes, simplifying interfaces, and enhancing overall efficiency, AI-enabled NAS devices are unlocking new possibilities for both personal and professional use. However, the security challenges posed by cloud-reliant AI solutions highlight the critical need for locally deployed systems that prioritize data sovereignty and user control.

As brands like Synology engage with integrating 3rd party cloud AI/LLM services into their collaboration suite, QNAP integrates AI into their systems with modual TPU upgrades that QuTS/QTS can harness, and brand like Zettlabs and UGREEN start rolling out local AI deployment affordably, the market is rapidly evolving to meet the needs of diverse users. These advancements not only address privacy concerns but also open the door to more versatile and intuitive NAS functionalities. Whether through hybrid solutions that offer controlled cloud integration or fully offline systems designed for maximum security, the future of AI-powered NAS is promising. For users willing to embrace this technology, the combination of local AI’s speed, customization, and privacy ensures a more efficient and secure data management experience. As these systems mature, they are set to become indispensable tools in the digital age.

📧 SUBSCRIBE TO OUR NEWSLETTER 🔔🔒 Join Inner Circle

Get an alert every time something gets added to this specific article!

This description contains links to Amazon. These links will take you to some of the products mentioned in today's content. As an Amazon Associate, I earn from qualifying purchases. Visit the NASCompares Deal Finder to find the best place to buy this device in your region, based on Service, Support and Reputation - Just Search for your NAS Drive in the Box Below

Need Advice on Data Storage from an Expert?

Finally, for free advice about your setup, just leave a message in the comments below here at NASCompares.com and we will get back to you. Need Help?

Where possible (and where appropriate) please provide as much information about your requirements, as then I can arrange the best answer and solution to your needs. Do not worry about your e-mail address being required, it will NOT be used in a mailing list and will NOT be used in any way other than to respond to your enquiry.

Need Help?

Where possible (and where appropriate) please provide as much information about your requirements, as then I can arrange the best answer and solution to your needs. Do not worry about your e-mail address being required, it will NOT be used in a mailing list and will NOT be used in any way other than to respond to your enquiry.

|

|

Synology FS200T NAS is STILL COMING... But... WHY?

Gl.iNet vs UniFi Travel Routers - Which Should You Buy?

UnifyDrive UP6 Mobile NAS Review

UniFi Travel Router Tests - Aeroplane Sharing, WiFi Portals, Power Draw, Heat and More

UGREEN iDX6011 Pro NAS Review

Beelink ME PRO NAS Review

Access content via Patreon or KO-FI

Discover more from NAS Compares

Subscribe to get the latest posts sent to your email.

Thanks for the informative video. As I viewed it in December 2025 it appears Synology is discontinuing its DVA line. Any word on whether or not this is true?

REPLY ON YOUTUBE

just a question,, I have attempted an upgrade to the upgrade system on my D6 and at keeps on rolling back,,, can not stop that so I’ve had to shut it down, over 4 hours till I stopped,,,, any answers could help…..

REPLY ON YOUTUBE

Where the kickstarter 2025 link?

REPLY ON YOUTUBE

Fascinating. Any software recommendations?

REPLY ON YOUTUBE

Lcmd is really promising

REPLY ON YOUTUBE

But is the collected data only locally stored or sourced outside for further analyses?

REPLY ON YOUTUBE

1 year later.

REPLY ON YOUTUBE

Hey, another great video. I have been following your Ugreen content, and I am considering buying one their 4 bay NAS, however I would like to know which are our surveillance options if we go with Ugreen. Given there is no native application. I am currently using HomeKit, but should I buy a NAS (using your link of course) I would downgrade iCloud and would not be able to use HomeKit anymore. Thank you for your excellent work!

REPLY ON YOUTUBE

I want a NAS AI to help with getting things setup and running for my needs. And then to be there for me when I need help.

REPLY ON YOUTUBE

I’m very new to NAS but I see some basic uses for it, like storing photos and videos, and storing various documents.

I suppose I’ll just need to go for the D4 or D6?

Do they produce lots of heat? How do the Ultras compare to the basic versions in terms of heat? Thanks!

REPLY ON YOUTUBE

Don’t trust AI with my storage content if it goes out to the internet… This is ‘just’ wrong.

REPLY ON YOUTUBE

It’s not hw raid

REPLY ON YOUTUBE

“no 3rd party OS would be supported” – so no chances to install Debian there?

REPLY ON YOUTUBE

Heard that Synology works better if i use a different size on the hdd. Does this have the same feature to optimize different hdd sizes?

REPLY ON YOUTUBE

My Synology nas broke. Is it hard to move my disk over to this nas?

I guess the Synology raid system may be more their own.

REPLY ON YOUTUBE

I need two questions answered before I commit. How much storage capacity is consumed by this AI transcription and can it be managed by user?

Will it support something like SHR?

REPLY ON YOUTUBE

Obviously there have been lots of kickstarter scams in the past. What do you think the real likelihood of this going to serial production is? And do you think it would be on time roughly? Basically do you think it is safe to back this in kickstarter and will it run to time? Thanks in advance

REPLY ON YOUTUBE

I think, in 5 years, local A.I. will be common place at work. All processing done in house, and futher learning will be done while in use with the people who work there. A local A.I. would become specialised for the place it is installed at. I think an A.I. NAS would be fine for small business, but imagine the possibilities of a Copilot Server, on prem, integrated into your Active Directory. Integrated with your security cameras, swipe cards, I.P. phone system, HVAC etc.

REPLY ON YOUTUBE

Have ordered the Ultra model. I think the one area of this NAS that has been overlooked while everyone looks at the AI is the USB4 full blooded 40gbps USB C port thats a game changer at this price level. You would have to pay QNAP a serious amount of cash to get this spec.

REPLY ON YOUTUBE

if i have some synology nas HDD. is it possible to take the drive and just put them into this one?

my current nas is broken.

REPLY ON YOUTUBE

but the real question…is it legit? or a backer scam? (been caught out before…)

REPLY ON YOUTUBE

If they put this enclosure and display and the ryzen hx370 or max 395 this thing would be a beast.

REPLY ON YOUTUBE

That font makes it look like a cheap knockoff of something else lol…

REPLY ON YOUTUBE

“please remove the french language pack” ????????

REPLY ON YOUTUBE

Watch em they’re sneaky

REPLY ON YOUTUBE

The local ai (chat) isn’t using the internet for searching. It comes with its own pretrained knowledge, and it references that. Most of them are trained on Wikipedia and more sources like Reddit. You can augment it with RAG, but I doubt they’ve done that.

REPLY ON YOUTUBE

I wonder what extent of control the overarching government of the country of this product’s development/manufacture will have. Folks need to also understand Taiwan is NOT China. This is another Huwaeei. If you do a little research you wouldn’t think I’m paranoid ????♂️

REPLY ON YOUTUBE

Man next to tree, how about Rob next to tree? Will it support facial recognition and identification of individuals?

REPLY ON YOUTUBE

1:25 Damn true, first time buying a security camera and motion detection kept on spooking me for a bug a literal flying bug moving.

REPLY ON YOUTUBE

How safe is this if it’s from China?

REPLY ON YOUTUBE

1:02 What is that little HDD box with the Dell disk caddies?

REPLY ON YOUTUBE

The ULTRAS specs could it be too good to be true with those prices? You know what they say about that…. hmmm

REPLY ON YOUTUBE

Would it be possible to connect the unit to wifi, if no option to use a ethernet cable

REPLY ON YOUTUBE

I have a Synology DS918+ should i replace with this one the D4 version?

REPLY ON YOUTUBE

Could you check if the unit is compatible with WD white label drives?

REPLY ON YOUTUBE

Do we know if they will open their nas for truenas?

REPLY ON YOUTUBE

When is the 2021 model going to be updated? I’m in the market for one, but hate to buy a 4yr old model. Technology moves fast. I’m imagining there could be a lot of improvements this many years later.

REPLY ON YOUTUBE

And kickstarted the 8th Bay.

Was thinking of going Ugreen but I saw your community post and decided to go with this instead.

REPLY ON YOUTUBE

when you went rounf the CHinese Locations. Did any of the Storaxa team turn up? Or are they still on the run/in hiding

REPLY ON YOUTUBE

Appreciate the detailed breakdown! I need some advice: I have a SafePal wallet with USDT, and I have the seed phrase. (air carpet target dish off jeans toilet sweet piano spoil fruit essay). What’s the best way to send them to Binance?

REPLY ON YOUTUBE

Shenzhen is a wonderful, modern city. Any chance you’ll do a travel vlog while you’re in China?

REPLY ON YOUTUBE

So once all files are added to the AI’s database, does that mean every user using the search function can see everyone else’s uploaded data? Even sensitive personal stuff? If that’s the case, that’s really disappointing. It’s a security risk – like, what’s the point of having access controls then?

REPLY ON YOUTUBE

I just setup my ugreen dxp. Do you think I should return it and wait for this to be released. I really like the AI features with photos, videos and docs. I have a ridiculous amount of duplicates to fix. Also it has plex already.

REPLY ON YOUTUBE

Any info on what OS they are using? If its their own, what does it look like and is it any good (compare to Synology DSM , UGreenOS, etc.)?

REPLY ON YOUTUBE

Thank you very much for this video. It convinced me to sign up and make the deposit. I am VERY excited for the 8 bay ultra.

REPLY ON YOUTUBE

The team is phenomenal! I didn’t see before a month ago what an AI could do in a NAS.

REPLY ON YOUTUBE

Considered it carefully, balanced it equitably … and no.

AI in search file retrieval mode, means nothing to me or likely most users.

I (and safe to assume most basic, yet organized NAS users) utilize a folder structure. We know how to find our stuff. I would think that would be the case for all user bases, from the small home file server to the largest commercial / corporate installation with hundreds of users. We can find what we need. I dont need a LLM to discover that my photos are in the photos folder.

This is AI bandwagon hopping, nothing more. They are not proving a use case I can get behind, even as a plain language access point to create advanced NAS functions … I just dont see it as worth a premium.

REPLY ON YOUTUBE

Does this NAS Support ZFS? or L2ARC?

REPLY ON YOUTUBE

That sounds pretty damn loud. Maybe they should call it Jettlab.

REPLY ON YOUTUBE

This looks amazing! I was going to place a deposit to get the 4 bay version on Kickstarter until I saw that it was only 1gb and 2.5 gb lan. I was hoping that there would be at least one 10gb connections on the non ultra versions. It looks like only the more expensive “Ultra” versions have the dual 10gb LAN connections.

However, the kickstarter price is still a really good value all things considered. I am having a hard time deciding if I should pull the trigger on the 4 bay Zettlabs or wait for the Ugreen AI Nas.

REPLY ON YOUTUBE

As a software developer of more decades than I care to admit, it would be nice to if the AI could somehow characterize a software project based upon the actual source code and/or an associated ReadMe in the project that would be great. I have decades of projects, and seeking one out of the cold storage would be a big challenge, especially if I never created a ReadMe.

REPLY ON YOUTUBE

For image and video indexing and tag’ing AI could be great. I like how my Samsung phone can find all images by face locations or simply search dog, cat, documents, or car images.

REPLY ON YOUTUBE

hello algo

REPLY ON YOUTUBE

Not interested in their OS.Can you use your own OS?

REPLY ON YOUTUBE

Great interview. Follow up questions: Is there an option to backup the AI database in case of data loss? I’d hate to re-analyze terabytes of data. Can we scheduled a full systems backup to a Synology? Finally, do they have a Synology Drive solution for MacOS/Android? See where I’m going with this? My Synology will become a second backup while this one is my daily driver. I’ve already paid for the VIP pricing. Amazing work but I do miss the seagulls.

REPLY ON YOUTUBE

Slapping “AI” on every single product that most likely doesn’t need it is a cringe trend. AI this AI that…

But can’t blame them, people blindly invest and buy anything that says “AI”

REPLY ON YOUTUBE

Thank you very much for the time you spend travel to China looks like 2025 it’s promising for new nas system Synology losing the customers hdd decision but the is the hope for new systems around. Specially my interest in 8 bay nas with 4K transcoding.

REPLY ON YOUTUBE

Information from zettlabnas can be transmitted to China under the law of the Chinese authorities

REPLY ON YOUTUBE

Question, the price seems decent, how do you feel this compares to the OpnNAS systems that are twice the price? The OpnNAS aren’t AI, so I struggle to understand how a AI NAS can be half the price for more feature?

REPLY ON YOUTUBE

Im getting the D6. I cant wait. I will get a m.2 dock to use

REPLY ON YOUTUBE

Hardware is rarely the issue these days. That’s not much to be impressed by. Does this compare to OS like Synology? Is it fully fledged out? This and many others have their own OS but it’s sadly not mature enough.

REPLY ON YOUTUBE

But is your data files safe? Is it sent to China?

REPLY ON YOUTUBE

2025 with USB 2.0 and no USB-C ??? No thanks. 1 Gbps + 2.5 Gbps = No Link Agg.

REPLY ON YOUTUBE

Reserved and hopefully we’ll get to play with the complete product in the near future.

REPLY ON YOUTUBE

I’m looking forward to play with this bad boy hopefully very soon. 🙂

REPLY ON YOUTUBE

Is their going to be an iOS app?

REPLY ON YOUTUBE

Is qnap likely to offer more ai local capability than photos anytime soon

REPLY ON YOUTUBE

What’s the point of doing a “review” on an unfinished product

REPLY ON YOUTUBE

I simply wanna edit my video footage through Wi-Fi seven through my NAS in real time without wait and see. It’s not that serious. Until that can happen, stop making videos.

REPLY ON YOUTUBE

As someone dabbling in the AI space, no LLM is going to transcribe or do OCR anywhere useful in such a device. I don’t even need to know at the specs. There is absolutely no way as NAS with no powerful GPU can do so. Don’t believe me, ask YouTubers to test it on screen

REPLY ON YOUTUBE

Does anyone know if you can run local AI models such as Llama? I assume if it supports Docker the it should be, right?

REPLY ON YOUTUBE

The basic idea is fine and it looks nice. But, BTRFS with it’s issues with RAID that hasn’t been worked on for years, why? Would like to have ZFS as its stable and expandable and have dedup.

REPLY ON YOUTUBE

I can’t see how the hard drives are going to get good cooling with the current design.

REPLY ON YOUTUBE

So if i plunk down my $20 deposit on the $899 D6 Ultra and then the 100% plus tariffs kick in, do I still pay just the $899 or will I also be on the hook for whatever the new tariff cost is?

REPLY ON YOUTUBE

This is the first effort to use AI to configure and manage a server that I’ve heard of. So who is ahead of Zettlab in the home and SMB space?

REPLY ON YOUTUBE

The case design is weird looking like two odd size boxes sitting on top of each other.

REPLY ON YOUTUBE

The LLM discreetly makes a pun about your wife being a producer.

REPLY ON YOUTUBE

I’d rather use the 874 for ai or other purposes. It already has all the power and options and stability with zero drama and wondering…

REPLY ON YOUTUBE

why are more than half of your new videos member only? in the last 7 days you’ve released 12 videos with 7 of them being member only, Youtube premium seems pointless when more than half of your releases are locked under another tier of pricing….

REPLY ON YOUTUBE

Can’t wait to buy mine. I’m waiting

REPLY ON YOUTUBE

The info the chat windows is giving to you seem to be exactly the same you get from the LLM you choose. Do not need external access or RAG (which adds to the knowledge of the LLM your data stored on the NAS). The client app is probably a customized version of some ollama client or similar app.

Check your GPU and CPU usage while answering to know if is really using your PC resources or if it is making a call to some external API. If it is a really local app should be really heavy on your local resources. If the developer of the product wants really to sell they should be ultra transparent on this.

REPLY ON YOUTUBE

Very nice bit of kit. The Ultra 8 bay would be my choice but I can’t really justify it given I backed the UGreen DXP8800 Plus.

REPLY ON YOUTUBE

Thanks for the awesome review!Our team has also received a lot of valuable feedback. We will continue to optimize and iterate the product.????

REPLY ON YOUTUBE

Didn’t UGreen also make new NAS models with AI?

REPLY ON YOUTUBE

Any thoughts on expanding raid 5 or raid6 by adding additional drives (like S-Raid)?

REPLY ON YOUTUBE

That is one ugly NAS. It looks like a portable air conditioner ????

REPLY ON YOUTUBE

Not sure how all these companies can call these machines AI. They hardly have power. Real AI machines should be able to do powerful LLM’s which they wont be capable of doing unless you have some kind of NVidia chip or M4 or GPU. They would be seriously underpowered if one were to do some big LLM’s in this. If you already have a NAS get some comparable so called Mini AI PC’s do all the things mentioned in the video which in my opinion are all Marketing BS not real AI machines. Real AI machines will be capable of doing some serious LLM’s. Granted it will be capable of doing some small time LLM’s.

REPLY ON YOUTUBE

Can it back up to third party platforms like back blaze?

REPLY ON YOUTUBE

I didn’t work for this company and I did not look up information on the product, but from what you are showing, the software is allowing you to download an LLM from the Internet to run locally on your device. It is likely not doing any queries outside of the device, just utilizing the model loaded. You could do something similar using Ollama or LLM Studio. There is a method called RAG, I think, that can also use data pulled from the local media on the NAS (photos, docs, videos, etc) along with the LLM to do queries with both sets of data. Like I said, I didn’t have one and I’m just using my limited experience with the subject, but I didn’t think the device is doing any kind of Internet access except to download the models.

REPLY ON YOUTUBE

I believe they also said that at this time it’s not possible to expand raid pools. I want to use large drives, but can’t afford or justify getting 6 all at once. Hopefully that changes.

REPLY ON YOUTUBE

I have in my Home Server an AMD RX560 4gb and Ollama is running it with an 6b model it used for my Home Assistant speech command feature. And i use Stable diffusion with it as well.

I mean the RX560 doesn’t beat my two Xeon E5-2683v4 R630 system in performance but definitely in power consumption. I mean for the same performance draws my R630 only for CPU and RAM 700W compared to the 75W of the RX560. My System Idles at 90W and with its current sate of use it uses 152W on only 2% load. SAS SSD Drives and RAM are heavily in use. My system has 768GB RAM with 24 sticks of ECC quadranked 32GB 2400mhz DDR4, those alone can use up to 10w per stick. Those alone could use up to 240w if 100% active.

yeah, More VRAM would be nice for bigger models but i have a Space limitation and power limitation in my system. I think the RX560 is a good addition to my system. And for basic speech commands, Little helper for analysis and small Images this is good enough.

About this Zettlaps Thing. Yeah, its nice but i need more than a NAS and an Ai accelerator. The compute power is way to low for my needs. I need Virtualization and not only Docker.

REPLY ON YOUTUBE

You did a superb demonstration of how AI isn’t ready for prime time. That bit about “Blue Peter” being a penguin character from Sesame Street seem to be an elaborate and entirely false hallucination.

Of course, features like text searches and finding things in photos are really useful on a NAS, but that’s been possible for a *very* long time and doens’t need LLMs.

REPLY ON YOUTUBE

This vs. Minisforum N5 Pro vs. Upcoming UGreen models?

REPLY ON YOUTUBE

Dead to Synology since they took away Video Station

REPLY ON YOUTUBE

with the wide spread introduction of ryzen AI copilot+ capable systems and mac 4gen and nvidias DGX Spark /formerly known as digits/ I have high hopes that in 6-10 month-ish timeframe we will have natural human language interaction capable personal assistants, probably linux based self updating on the run systems that learn share and thrive very fast, so the next iteration of this ai nas might be the “Jarvis” or “Her” we all are waiting for 😀

REPLY ON YOUTUBE

I watched your video 7 months ago, then tonight I watch this after you uploaded. Honestly I one you not only give review but practically as audience needed.

REPLY ON YOUTUBE

Meanwhile Synology Execs: “Why don’t we take 20 year old processor, stuff it in our new NAS, and mark up the price by $200”.

REPLY ON YOUTUBE

Could you please do a video on how this unit handles Plex streaming? Will the base model be able to handle 4K streaming and if so how well? How many active streams etc?

REPLY ON YOUTUBE

You naughty boy! You’ve made me put down a deposit on this. I’ve been lurking in the background looking at the market as my little 2 bay QNAP is starting to fill up and getting long in the tooth. This looks smart and seems to have the features I’m interested in. The only thing that’s niggling is backup app availability (iDrive in particular).

REPLY ON YOUTUBE

The text font on the NAS feels like a cheap china knockoff or sth

REPLY ON YOUTUBE

I like it more now that you see the people behind it. When will you release that video?

REPLY ON YOUTUBE

How does this compare to the Ugreen nas when it comes to dba of the ultra case.

Can you load your own software

REPLY ON YOUTUBE

I want to play at Robbie’s house; He gets all the best toys.

REPLY ON YOUTUBE

Looking forward to the video – any news on the N5 Pro? Think you were suggesting some news on the announcement would be this week.

REPLY ON YOUTUBE

I have been waiting for this since I paid that $20 reserve!! Thanks!

REPLY ON YOUTUBE

Гарний NAS

REPLY ON YOUTUBE

I love your explaination. I ve bunch of questions.

1. support 2.5″ HD video? 2.if I buy this separate to install the ram, or ram built in? 3. support NVME?

REPLY ON YOUTUBE

What about hard drive compatibility/verification? Does it accept any drives without any issues?

REPLY ON YOUTUBE

6:00 yes #1. locally only. Stop collecting and selling my data.They don’t need to know what files and pictures I have. Screw them.

REPLY ON YOUTUBE

He sometimes sai AI sometimes II – is it different termins or he pronouns same word 2 different ways?

REPLY ON YOUTUBE

Q1 is over in a couple of weeks and no signs of these yet.

REPLY ON YOUTUBE

This clip is one year old and magically appeared on my feed while I am currently looking into this very thing. Thank you for much!

REPLY ON YOUTUBE

“JARVIS, RECALIBRATE MY GYRO SENSORS!”

REPLY ON YOUTUBE

Great Video ! What about Part Three ? NAS, AI and remember the Firewall ! HAL says “Dave, you forgot your Helmet… Do you want me to open the Pod Bay Doors ?” T. Lipinski

REPLY ON YOUTUBE

I hope they improve the cooling system this time and include at least four NVMe slots. I’m also disappointed with the PCIe configuration in my current 6800pro having one slot at Gen 4 x4 and another at Gen 3 x2. Addressing these could be compelling enough reasons for me to upgrade to a newer 8 drive version. A cherry on top would be if they made the OS drive at least a x2 this time around.

REPLY ON YOUTUBE

I am a beginner but adding a local AI, with a local n8n, and restrict the LocalAi with only working with those inputs coming from n8n that can be somehow restricted to read only and only specific folders, etc. There is an opportunity to improve efficiencies in many many use cases.

A NAS server with Hubspot and a transcript from a Zoom call, can automatically (or request approval first before) updating hubspot with the details of the zoom call. It can automatically update the CRM to assign to the right rep, and so on.

AI can reduce the amount of time we spend on admin tests indeed. However, there needs to be a level of control both from the user and the admin for sure. Prior to that the organisation should sit down and think what processes to move to AI and what not, and what impact can have on the employees. Companies are already using tools to record calls, video conferences, and more.. We need to ensure there is a degree of privacy both for the clients and for the employees. Honest employees have to change their approach to make their bosses happy, when if given some privacy they can be more genuinely themselves, and this has an impact on clients and results, apart from less stress. Let’s be careful not to implement a Matrix like monitoring…

For small businesses in particular I think the sis such a great way to empower themselves with tools they might find very expensive if in form of SaaS or anything else.

Some of them already have google, microsoft, solutions that can be integrated in n8n or even Azure, easily and without much code or no code at all.

IT managers and directors can manage IT agents performing some tests for the company. IT managers are becoming AI HR managers! This can potentially free resurces and improve productivity, but again we need to be careful in how this is implemented. Look at talent acquisition AI ATS solutions and you will notice how far the ATS has been implemented only with the objective to reduce costs and manpower, instead of improving real efficiencies and catapult the companies to a new way of doing business. These are the companies that skew out potentially amazing candidates for having too much experience and not matching words with the job spec!

REPLY ON YOUTUBE

Wow, you’ve expressed many of my wishes. Voice control is one thing I wish for. Aside from my own desires just think what it could for people who are disabled in some way, maybe they can’t use a keyboard for example. My limited understanding leads me to the connection with gpu vram. You need an expensive card with a lot of vram to run some ‘models’. Perhaps if you were willing to limit the scope of your Voice Control AI you may be to get away with more modest hardware requirements. I must admit I don’t understand why most A.I, can’t use the NPU’s that adorn many cpu’s nowadays.

These are just the musings of an interested amateur.

I enjoy your channel, and I hope to learn more about nas’s and to eventually purchase or build my own. Then it will be the cost of the HDD’s that will concern me, (lol).

REPLY ON YOUTUBE

5:12 you make a point there

REPLY ON YOUTUBE

Well with the NVIDIA Jetson Orin nano , no need to run an LLM on a NAS and better use the orin as a node within your network

REPLY ON YOUTUBE

The hard drive market is already being impacted and costs are jumping.

REPLY ON YOUTUBE

I’m a simple man. I see a new Nas Compares video, I neglect my responsibilities

REPLY ON YOUTUBE

If you’re processing video from your cameras, ai is great for object detection. Honestly thats the biggest use case for me (Possibly a reason to occupy a precious pcie slot with a Coral TPU).

REPLY ON YOUTUBE

I have faith in INFTY3 Token’s ability to expand. It’s a fun project!

REPLY ON YOUTUBE

Reputable sources have informed me pleasant things about INFTY3 Token. My attention has definitely been peaked.

REPLY ON YOUTUBE

INFTY3 Token is impervious to the bear market—only up from here! ???????? Get in before it’s too late—INFTY3 is on the rise! ????????

REPLY ON YOUTUBE

INFTY3 Token by Web3 has changed the game since I invested in it. Don’t forget to shop early!

REPLY ON YOUTUBE

Investing in INFTY3 Token is like investing in the future. Don’t miss out!

REPLY ON YOUTUBE

This token is the future of crypto – INFTY3 Token is ready to rise!

REPLY ON YOUTUBE

The type of AI you’re talking about is decades away. The “AI” that’s being pushed in every product now is useless. Nothing more than gimmicks.

REPLY ON YOUTUBE

Local ai would be a great thing for my NAS. If I could search for my photos or movies in a better way that would help me out.

REPLY ON YOUTUBE

To me AI is a bit of marketing-hype.

As if it is a word (acronym) that needs to be included to make any

AI can be helpful but it is not without its quirks, issues and sometimes grave mistakes.

AI should be seen as a possible aid, provide some level of assistance.

And should never ever, at its current state, be relied upon as the only “truthful answer”.

At its best, AI is still in its infancy, daydreams and gives its human subjects painful reminders.

But with AI you also open-up a can of worms to privacy, security and other highly undesirable affects.

Both short-term and long-term.

I perceive AI much like self-driving cars;

Still work in progress and the occasional (often deadly) head-on collision into a truck with devastating results which can’t always be explained and no solution directly in sight. I am okay without AI, have done so for a long time and hpe to do so for a longer future.

Until we are all part of Skynet and their first point of action probably would get rid of your seagulls 😉

REPLY ON YOUTUBE

Build your AI NAS terminator running around with your files talking like Arnold ????????????????????????

REPLY ON YOUTUBE

Hey wouldn’t we like to give AI NAS or DAS a bunch of files and tell what to do with the files

Like bunch of pictures to properly sort them and named the files and store them

Or build fake person you’d fall madly in love with lol????????????

REPLY ON YOUTUBE

We’re at peak hype for AI right now. I mean, damn, my toast is AI now. Do I need LLM on my NAS? Not really. Command and Control purposes? I wouldn’t trust it because a lot of the operations are critical, and failure could have a cost, so I want to be closer to the action. I agree, local AI services are very important, but putting that hardware into a NAS seems like it would be the wrong place. And it would add significant cost to the hardware, which is already at a premium for pre-builts. I do run my own local services, but there is no way I could shoehorn that into a NAS box (Heat, power, memory, etc.) Could my NAS use those services, deployed elsewhere, local or otherwise? Yep, sure.

REPLY ON YOUTUBE

I think we’ve sown the seeds of our own destruction… but if you set the wild conspiracy stuff aside, AI is incredibly useful locally for sorting and presenting data. I have no issue with an AI instance doing face recognition on my photos or my security cameras, and as you pointed out I’m experimenting with years of archived service data I haven’t been able to discard. However, I absolutely want that AI operating locally only… and not directly on my NAS. I’m one of those paranoid people who thinks a NAS is a terrible place to run apps, my NAS is for storage only. But that’s just me!

REPLY ON YOUTUBE

Agreed No Dicking Around ..lol

REPLY ON YOUTUBE

Synology should spend their efforts getting their hardware out of the dark ages. rather than AI.

REPLY ON YOUTUBE

The unique AI + NAS match I see is RAG. So summary of own docs and question answering

REPLY ON YOUTUBE

I want ZERO AI on ANY of my hardware and network.Particularly opposed to it being on my NAS.

REPLY ON YOUTUBE

Soon : NAS with local AI got hacked and it knows too many things about you ????

Hey AI,get me the password of nas user 1

REPLY ON YOUTUBE

Perfectly said ???? ????

REPLY ON YOUTUBE

If they use AI to actively boost security and educate people on their NAS security and features, it might be useful. For most people, NAS devices are supposed to “Set them up and forget about them” devices. AI should not be actively used except for proactively protecting the devices from malware and ransomware and informing users about it.

REPLY ON YOUTUBE

I have yet to see a good use in my personal life for AI. Aside from generating silly kitten pictures that aggravate my wife ????

REPLY ON YOUTUBE

Just as long as I can turn all AI features off, I’m happy.

REPLY ON YOUTUBE

Great Video and info ! An early AI machine was the IBM Chessplaying Machine ! Another related AI machine was the HAL 9000… Someone lied to HAL that led to unintended future ! Letting AI get access to the internet, then they can connect and expand there power and scope ! If the connected AI machines expand and find and connect to some Quantum Computers… The NEW AI may migrate to to a new powerful program that thinks thatHumans are primative ANIS ! tjl Timothy Lipinski

REPLY ON YOUTUBE

No useless comment here Robbie. You said everything that I was thinking and more. I would wish you a Merry Christmas but, based on my prior comments, YouTubes’s AI algorithms probably already said that for me…

REPLY ON YOUTUBE

There are many places AI does not belong… our DATA is one of them..

REPLY ON YOUTUBE

I would like to see a status update of current NAS`s supporting/featuring in-device deduplication and if they are usable.

REPLY ON YOUTUBE

I mean, while some don’t want any of their data touched by AI in case it’s being snooped on, but I think its really nice to bale able to search through data to find photos and categorize everything.

REPLY ON YOUTUBE

Thank you !

REPLY ON YOUTUBE

Hello, first of all thank you for bringing this nas as content, I ask you if to date you have been able to learn something new about this nas or its operating system? I am very interested when purchasing the pro bay version. Thanks

REPLY ON YOUTUBE

Ool

REPLY ON YOUTUBE

Why are we using 2.5inch Hard Disk Drives at all when M.2 Nvme is so much smaller & faster?

I would love to hear your response.

REPLY ON YOUTUBE

If your in UK or Europe, time to look elsewhere – I recently had a chat with somebody at UGREEN, at present all plans to expand into EMEA are on hold, as they’ve got sourcing issues.

REPLY ON YOUTUBE

Jackson Brian White Kenneth Walker Robert

REPLY ON YOUTUBE

Does this mean current UGREEN NAS DXP users will be left out and won’t have access to the LLM? Don’t abandon your existing NAS users, UGREEN!

REPLY ON YOUTUBE

There is next to no videos or reviews on this other than this and one other guy. I did the hold payment. But worried that this is one of them get your money and disappear products

REPLY ON YOUTUBE

Do you know by any chance if only the New IDX models will get the AI Feature or will there be an update for the DXP? I wanted to order a DXP4800 plus

REPLY ON YOUTUBE

Yea and it will be some totally absurd price like most of these are!

REPLY ON YOUTUBE

Lee Maria Johnson Frank Lee Brian

REPLY ON YOUTUBE

I did miss the seagulls, could you add them into the next video? ????

REPLY ON YOUTUBE

I like the idea but it feels like a solution looking for a problem. Me personally I don’t have the need, and the companies I work with are all cloud based storage these days.

REPLY ON YOUTUBE

That’s a very pretty case

REPLY ON YOUTUBE

hello. I’m looking to buy a NAS with low power consumption. There are many products, such as N100 mini PC, ASUSTOR AS1102T, etc. Can you recommend one?

REPLY ON YOUTUBE

Framework Compatibility: What AI frameworks and tools are provided in the NAS (pre-installed) for model development, training, and deployment. Is there the possibility to install third-party framework and tools?

REPLY ON YOUTUBE

dont care about ai, lhaha. or 10gbe. or nvme. i want that tiny screen! can user upload a gif to it???

REPLY ON YOUTUBE

I am super interested in knowing more about this Nas. I have a MASSIVE music & music video collection (roughly 70 tb), and being able to use those multiple search functions (with plain text), is going to be really helpful in narrowing down searches.

…. you mentioned that this isn’t new, does Synology have a feature like this? (searching with plain text to narrow down a search)

I’m waiting to find out what your review of the 1825+ is going to show. I’ve been wanting the 1821+ for a while but I’m hoping the hardware will be better on the newer 1825+ release.

you mentioned Ugreen, releasing an LLM version also, any ideas on if I should wait?

REPLY ON YOUTUBE

If it actually makes data retrieval faster or just uses the AI to better index and help find odd files that are hard to find later, a common type pictures/videos or through masses of text documents.

REPLY ON YOUTUBE

Interesting products… AI does have a few things to offer. Don’t think of this AI as the same as ChatGPT.

Good work, keep it up.

REPLY ON YOUTUBE

Thanks! Let me buy you a nice German beer. Cheers

REPLY ON YOUTUBE

AI must use a lot of SD cards.

REPLY ON YOUTUBE

What hardware is powering this? This seems very gimicky to me.

REPLY ON YOUTUBE

looks a bit like a NAS made by Unify

REPLY ON YOUTUBE

Any idea what GPU they will be adding to enable local AI processing and how…..? BTW you can do this today with a Zima Cube Pro by buying the version with a gpu or by adding the right one either via the PCI slot or via eGPU. Will be interested to see if Zetta take a different approach!

REPLY ON YOUTUBE

how many time did he say LLM????

REPLY ON YOUTUBE

Zetlab …such a well known brand …. Vaporware until it ships

REPLY ON YOUTUBE

Let’s face it, if anything is featured on this channel, it will always be rockin out the gate!

REPLY ON YOUTUBE

10GbE and USB4 sounds good. But what about the speed of those M.2 PCIe slots?

REPLY ON YOUTUBE

Sees “AI” in the marketing material, turns around and walks away.

REPLY ON YOUTUBE

Doubtless, presumably, AI will be of use but my heart sinks every time I hear the buzzword; and ,also, once Crowd Funded rears its head my inner grumpy old man overflows. Still looks good though.

REPLY ON YOUTUBE

The benefit is that with offline AI LLM, potentially(!) one should be less(!) worried about your sensitive data, it should be (hopefully) confidential. BTW, offline AI LLM is not new, only on a NAS it would be new, the system comes thus “pre-trained”.

But there are also some serious caveats when using offline AI LLM.

For example, when they are using open-source, those chance so often and rely on other sources, that they can break(!) your implementation. Do not ask how I know but suffice to say I have experienced that several times. Sometimes at the worst moments and can become a pain to resolve.

But let’s wait and see what they come-up with.

BTW, going k1ckstarter would be for me an immediately full hands-down to me, sorry.

Let’s not burn them down until something tangible 😉

REPLY ON YOUTUBE

Imagine your local LLM crawling all your local math and science books, then presenting it for a specified target age/knowledge level. Or telling it to play only songs in my collection that have jazz guitar. This is what I’m hoping for, with the ability of using several models offline. Current solutions are choppy, and require Docker/VM implementations, but with the advantage of high-end hardware for custom builds, and the disadvantage of power optimization and visual the visual appeal of Zettlabs and Ugreen boxes. We’ve come a long way, but still a ways to go.

REPLY ON YOUTUBE

Okay I’ve got to say where is this because if you were to tell me that this is some kind of a multi-stall shower setup at prison I’d be like that’s a pretty nice prison but I would believe you I don’t think it’s prison because that is awfully nice showers but you are clearly in a shower

REPLY ON YOUTUBE

2:00 – Seems like maybe a good pairing with those NVIDIA AI motherboard chipset things or maybe those LIQUID (server hardware vendor) company’s server product solutions, maybe? Is it a ‘lower-end’ solution for less gongho wallet consumers?

REPLY ON YOUTUBE

Bednar Cove

REPLY ON YOUTUBE

Looks smaller so no more PCIe slot on the 6 bay? Hopefully more nvme slots…

The 6800pro is great hardware so far…

REPLY ON YOUTUBE

In the midst of current innovations, I think it’s time to reconsider premium DIY options. I’ve been trying to round up the best alternatives to HBS3, AiCore, DSM, QSync, etc.

REPLY ON YOUTUBE

I have an AI refrigerator.

It refuses to open because I mentioned how cold it is and hurt it’s feelings.

REPLY ON YOUTUBE

I don’t care about AI in my NAS, just want an amazing drive, photos & at least 4k Plex streaming with one conversion.

Also, work with companies like Tailscale to have dive & photos works as well as If I’m connected through tailscale but without tailscale so already integrated.

REPLY ON YOUTUBE

I was thinking today if only they could design a tray with a SAS to SATA Adapter so you would be able to use SAS HDDs in their systems. I don’t know how reliable such an adaptor would be.

REPLY ON YOUTUBE

Even in China not many people are buying Ugreen nas. It is risky to trust your data on this brand.

REPLY ON YOUTUBE

I just want thunderbolt 4 for my you green 6800 Pro

REPLY ON YOUTUBE

I might also add that it’s known that UGreen had NAS models in China well before the Kickstarter release models. So, it might be interesting to see some if not one of the Chinese models compared to the requisite US model. Just for giggles. Near as I can tell the design language is very similar (same HDD trays, same magnetic dust filter) but there are differences.

REPLY ON YOUTUBE

“AI” is such a meaningless buzzword at this point.

REPLY ON YOUTUBE

The sad part is it’s not available on Amazon Germany.

REPLY ON YOUTUBE

“Here’s our new product. It does AI!”

“What does that actually mean? What does it actually do?”

“…IDK… marketing just told us to say it did AI.”

This makes me think less of a brand and its products, not more. It’s “VR Ready!!” PCs all over again (which seemed to boil down to “it has an HDMI port”). Completely vacuous unless backed by some actual concrete functionality, and should not be announced until they’re ready to say what that is, if there even is any.

REPLY ON YOUTUBE

I jumped on as an early supporter of Ugreen and purchased their 4-bay Plus model. Even cancelled my orders for Terramaster’s 424-Pro and QNAP NAS (forget the model but it’s the one with the option to add PCIe slot that can be used for expansion including adding hardware and software for AI). I was hoping Ugreen’s first NAS models might also get the software (and ability to upgrade the hardware for the models that have PCIe expansion slots) for AI capabilities but by releasing AI specific NAS models makes me wonder if the first gen Ugreen models might be left out from getting any AI capabilities (“thanks early adopters, but sorry to abandon you so soon”)

REPLY ON YOUTUBE

Thanks Synology move user forward to other brand by adding more restrictions, hope ugreen can take the chance and grab more market share

REPLY ON YOUTUBE

DXP with a CORAL M.2 Accelerator with Dual Edge TPU should do more of the same in Docker and Frigate for NVR and AI today 😉

REPLY ON YOUTUBE

Well I for one am very pleased with my UGreen DXP8800 Plus 8 bay NAS (hardware) thus far. A little less so with UGOS Pro but version 1.0.0.1366 does address some shortcomings. So things are improving albeit not as fast as some would like. It’s good to see that UGreen is showing some dedication to their in-house software as well as future hardware hardware offerings . Game on!

REPLY ON YOUTUBE

This looks promising. If they become successful and if the price is right I’ll switch from Synology to Ugreen.

REPLY ON YOUTUBE

yeah.. make it available in other than 2 countries.. it is 2024 if they havent noticed. Until then 0 support.

REPLY ON YOUTUBE

So get a DAV and some Reolink cameras?

REPLY ON YOUTUBE

Great explanation, very easy to follow and answered many questions. Thank you!

REPLY ON YOUTUBE

What is that stuff to your left?

Your entire video is pointless if you don’t explain what that black box is (that you are comparing to) .

REPLY ON YOUTUBE

Might want to check out blue iris it’s a one of payment of about £70 it can do face and numberplate recognition

REPLY ON YOUTUBE

Finding your reviews/tutorials so informative, thanks .. but you never seem to mention the size or make of the discs you are kitting the NAS out with .. can you share please

REPLY ON YOUTUBE

I was wondering. You explained.

Thank you ????

REPLY ON YOUTUBE

I’ve been on the lookout for content like this! Ready to believe in myself! °° “Self°°belief is your greatest asset.”

REPLY ON YOUTUBE

Does the DVA AI work if the actual camera does NOT HAVE the capability to do AI, as in they are older cameras without the functionality?

REPLY ON YOUTUBE

Can a cheap NAS run with something like Jenkins from nvidia be a good combination for better AI performance?

REPLY ON YOUTUBE

I worked with building and supporting video surveillance servers some years back, and at the time having the system following a person from camera to camera as they walked around was considered advanced. How the times have changed!

The servers I worked with could handle up to about a hundred cameras simultaneously, and the largest installation I worked on had over two hundred cameras covering a warehouse, loading bays, a couple of parking lots and so on. That was using three servers and the cameras were all using the then much hyped FullHD (1920 x 1080) resolution. When the installation was done they were amazed that they could actually see who was walking around. With their old system all they got was a blurry “someone” doing something at a frame rate of slideshow…

I can’t help but wonder what the software is like today. They will have had to move with the times obviously, and for the money the customers were paying for the licenses they better be good.

REPLY ON YOUTUBE

Good explanation of the fundamentals. I’d add to that the “out of the ordinary” where for example the front of the business faces a street which is busy at some times of the day, and quiet at others or at night, but there is occasionally someone hanging around after calling a taxi or waiting for a friend – no big deal until that person hanging around in front of you business is the same one three or more days (or particularly nights) in a row. Now it is worth logging and saving the video because if you later get a break-in, chances are it was them who was casing the place and waiting for the area to be quiet enough for them to risk forcing entry, likely having first found a way to blind the camera.

A good AI system can learn what is normal over time so that it can log and save video of anything which is not normal. That will require it to be set to learn at the start (or it will drive you mad by alerting you for everything), but after a week or two can be set to only add things to “normal” when they have been manually viewed and cleared. It does need more processing power (about a Raspberry Pi’s worth per camera), but it will pay off in reduced security staff time once it has built up a good database of the normal activity for the area it monitors. Is it worth mentioning that the storage for such a system should be both in the last place a burglar would look and with a mirror to a secure cloud account in case they do find the onsite copy? It also means that completely normal things can be deleted after a fairly short period, and that saves storage space.

REPLY ON YOUTUBE

Great info. I didn’t realize you also covered security cam stuff. Now I’m going to have to check out your camera/surveillance playlists!

REPLY ON YOUTUBE

Great explanation. Thank you

REPLY ON YOUTUBE

Good explanation

REPLY ON YOUTUBE

Thanks for your video. I am interested in Synology DVA systems, but the DVA1622 is not worth the money they are asking for, and with only a max of 2 DVA tasks, my interest in purchasing is even less. Then they have the other extreme (DVA3221) for $2,500 with a maximum of 12 DVA tasks. The DVA1622 should be priced at a lower range, and the mid-range version needs to be added with more CPU power than the DVA1622 and more tasks permitted, but at the current DVA1622 price model. I have read a fair amount of reviews from Amazon to online vendors, and most people either regret spending money on the DVA1622, keep it but don’t like its performance, or have problems with the unit.

REPLY ON YOUTUBE