Unable to maximize transfer speed on Synology DS-923+

Just put together the following setup:

NAS:

– Synology DS-923+, with 32GB RAM and 4x WD Red Plus 10TB each in RAID 0

– Synology 10Gbps ethernet adapterSwitch:

– TRENDnet 10Gbps SwitchDesktop:

– TRENDnet 10Gbps ethernet card, using Marvell’s AQtion chipset

– Brand new CAT6A cabling connecting everything

– Jumbo Frames enabled at 9000 at both Synology and Desktop (Switch is unmanaged)The WD Red Plus are rated as capable of sustaining 215MB/s. Therefore, 4 in RAID 0 should be able to go 860MB/s, right?

But when I transfer a very large 128GB file from the desktop (NVMe SSD, rated 3000MB/s+) to this RAID 0 volume, I’m only getting 400MB/s, or about only half of what should be expected.

The ethernet ports on both the NAS, Switch and Desktop are all lighting green. They only light green when the connection is at 10Gbps. 5Gbps and below will cause them to light orange.

Any ideas what could be hindering the performance?

Gustavo

UPDATE

I removed all bottlenecks:

– Arguably a top NAS device

– Plenty of RAM

– No other services running other than stock DSM 7.2. No third party apps competing for resources

– Brand new 7200RPM CMR hard drives rated each at 215MB/s sustainable write speeds

– Zero fragmentation on the HDDs, brand new setup, zero files installed other than DSM.

– File transfer of a single 128GB file, coming from an NVMe drive on the desktop, so source fragmentation and speed limit also not a bottleneck.

– All new 10GbE devices on the entire chain, with brand new Cat6A cables.

– Enabled 9000 jumbo frames on both desktop and NAS. Router still on 1500 but traffic not flowing through it, but only through the 10Gb switch.

I can’t imagine where the bottleneck is. Maybe these 10TB Western Digitals’ Red Plus are much slower than rated, but I got 7200RPM, not the 5400RPM, which is the case for the 8TB models. It is not unrealistic that they can indeed sustain 200MB/s

I will try iScsi to see if I get anything different, but even on network SMB protocol, speeds should be much greater.

UPDATE

Some additional tests:

Common setup

– DS 923+ 32GB RAM, stock DSM 7.2 without any installed or running programs other than plain vanilla first boot setup

– Synology proprietary 10 GbE card installed

– Switch: TrendNet S750 5-port 10GbE

– Desktop: Ryzen 5800X, 32GB, 2TB NVMe

– NIC card on desktop: TrendNet TEG-10GECTX (Marvell AQtion chipset)

– Jumbo Packet 9000 bitsA) TESTING WITH 4 HDDS IN RAID0

iSCSI

4 HDDs in RAID0

Result: 300MB/s

–> Very weird that iSCSI performance is worse than SMB.SMB

4 HDDs in RAID0

Btrfs

Result: 400MB/s

–> This seems too low as each HDD capable of 200MB/sSMB

4 HDDs in RAID0

Ext4

Result: 400MB/s

–> File system choice does not affect performance in this testB) TESTING WITH 2 HDDS IN RAID 0

SMB

2 HDDs in RAID0

Btrfs

Result: 400MB/s

–> This proves that these drives can sustain at least 200MB/s each. 4 should go to 800MB/s as far as the HDDs are concerned.SMB

2 HDDs in RAID0

Ext4

Result: 400MB/s

–> And file system choice not affecting performance in large file transfersC) TESTING WITH 4 HDDS IN 2 RAID 0 POOLS

SMB

2 HDDs in RAID0 and Ext4

+

2 HDDs in RAID0 and Btrfs

Simultaneous data transfer from different SSDs on desktop

Result: 200MB/s on each transfer

–> Clearly, there’s a cap at 400MB/s…Where is this cap coming from?

– Test shows HDDs not the bottleck

– Maybe the Synology DS-923+ isn’t really 10GbE capable?

– Maybe TrendNET switch or NICs not really 10GbE capable?

UPDATE – FIXED

| Where to Buy a Product | |||

|

|

|

|

VISIT RETAILER ➤ |

|

|

|

VISIT RETAILER ➤ |

We use affiliate links on the blog allowing NAScompares information and advice service to be free of charge to you. Anything you purchase on the day you click on our links will generate a small commission which is used to run the website. Here is a link for Amazon and B&H. You can also get me a ☕ Ko-fi or old school Paypal. Thanks! To find out more about how to support this advice service check HERE

EVERYTHING NEW from Minisforum @ CES 2026

Gl.iNet Slate 7 PRO Travel Router (and Beryl 7) REVEALED

Minisforum N5 MAX NAS - 16C/32T, 128GB 8000MT RAM, 5xSATA, 5x M.2, 2x10GbE and MORE

The BEST NAS of 2026.... ALREADY??? (UnifyDrive UP6)

How Much RAM Do You Need in Your NAS?

A Buyer's Guide to Travel Routers - GET IT RIGHT, FIRST TIME

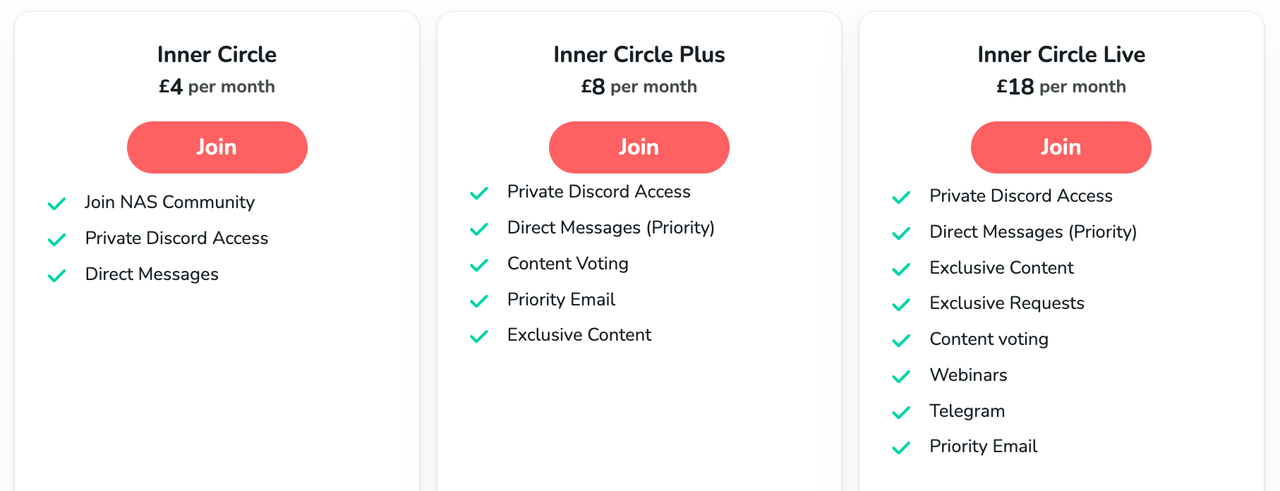

Access content via Patreon or KO-FI

Discover more from NAS Compares

Subscribe to get the latest posts sent to your email.

DISCUSS with others your opinion about this subject.

ASK questions to NAS community

SHARE more details what you have found on this subject

IMPROVE this niche ecosystem, let us know what to change/fix on this site