1. Introduction

High end NAS and AI workloads have reached a stage where consumer grade hardware is no longer enough. Local LLMs demand wide PCIe bandwidth and large memory pools, NAS arrays now rely heavily on NVMe for metadata and hot storage, and modern media workflows expect multigig transfers as a baseline. At the same time, AI inference, embeddings generation, and GPU accelerated compression are all being folded into everyday home lab setups. Because these platforms tend to evolve quickly, planning a full five years ahead ensures that the system you build today will still have room to grow into heavier models, denser storage, and faster network standards without requiring a complete rebuild.

————————————————————

2. Understanding Your Five Year Roadmap

Workloads rarely stay the same for long. Photo libraries grow steadily, video projects grow rapidly, and AI models grow explosively. Over a five year timeline, the fastest expanding workloads tend to be AI inference jobs, vector databases, local LLM hosting, and high resolution media. AI inference has modest CPU requirements but benefits from GPU acceleration and fast storage. AI training needs far more compute and bandwidth. LLM hosting requires large memory pools and fast NVMe scratch space.

NAS storage growth also increases, especially when projects move to higher bitrate formats or when ZFS features such as snapshots, metadata drives, and special vdevs are added. ZFS expands best with more drives and more lanes.

GPU count is another major planning factor. Each GPU needs a full bandwidth PCIe slot, strong cooling, and a power budget that does not restrict future upgrades. PCIe allocation varies heavily between platforms, so ensuring enough usable lanes is critical.

Network expectations are also rising. Ten gigabit is the current baseline, but the industry is moving toward twenty five gigabit, USB4 direct attach links, and OCuLink point to point connections that bypass traditional bottlenecks. A five year roadmap needs room for at least one major network jump.

————————————————————

3. EPYC vs Threadripper Pro Which Platform Fits the Roadmap

Although EPYC and Threadripper Pro share design roots, they are built for different priorities. EPYC is optimised for reliability, constant uptime, and very large memory footprints. Threadripper Pro aims for workstation performance, higher boost clocks, and heavier GPU workloads.

PCIe lane availability is similar on paper, but the topology differs. EPYC spreads lanes more evenly for storage and networking, which is ideal for large ZFS pools. Threadripper Pro concentrates bandwidth toward GPU slots, making it better for AI inference, mixed GPU workloads, and applications that need full speed x16 slots.

Upgrade paths also matter. EPYC platforms usually support several CPU generations across the same socket, allowing later upgrades to higher core counts. Threadripper Pro upgrade paths exist but are shorter and generally tied to workstation release cycles.

Memory support differs as well. EPYC supports very large memory pools and strict ECC requirements, which benefits virtualisation and ZFS. Threadripper Pro supports ECC too but emphasises memory bandwidth and latency for high performance computing and AI.

For multi GPU workloads, Threadripper Pro is the platform of choice due to higher boost clocks, stronger single threaded performance, and better PCIe placement for GPUs. For very large storage arrays, EPYC is better suited due to its reliability focus, wider platform support, and more flexible I O allocation.

————————————————————

4. Best CPU Choices for 2025 Based on Use Case

Budget Gen5 entry point

Threadripper Pro 7945WX paired with a WRX90 Creator class motherboard gives you PCIe Gen5, multi GPU potential, and strong workstation performance at the lowest cost of entry for this tier. It is the most economical path into high lane count hardware.

NAS focused EPYC options

EPYC 9124 or EPYC 9354P remain the best choices for builders who prioritise ZFS, multi drive arrays, and stable virtualisation. Both processors give access to the EPYC Gen5 platform with enormous I O capability, wide PCIe distribution, and exceptional ECC memory scaling.

AI focused Threadripper Pro options

Threadripper Pro 7965WX is ideal for heavy inference, embeddings generation, and up to three full bandwidth GPUs. For more demanding pipelines or multi model workloads, the Threadripper Pro 7995WX delivers unmatched compute performance and remains the strongest workstation option available.

Money no object flagship profile

Threadripper Pro 7995WX on a Supermicro WRX90 workstation board is the top end option. It provides extreme PCIe lane density, the highest core count, maximum memory bandwidth, and the best expansion footprint for several generations of GPU and storage upgrades.

————————————————————

5. Motherboard Selection What Actually Matters

Choosing the right motherboard is the single most important decision in a five year NAS and AI roadmap because it determines every expansion option you will ever have. The first priority is slot layout. High performance GPUs require full length slots with enough spacing to ensure airflow, while HBAs and network cards require their own dedicated x8 or x16 lanes. A board might list many slots, but if they share bandwidth or disable each other under load, you lose future flexibility.

PCIe Gen5 support is valuable for GPUs, NVMe arrays, and high speed networking, but it is not essential everywhere. GPUs benefit the most, large NVMe scratch arrays can saturate Gen5 controllers, and OCuLink Gen5 expansion becomes possible. For storage HBAs and SATA cards, Gen4 or even Gen3 is still more than adequate. A balanced board assigns Gen5 lanes to the primary x16 slots and Gen4 lanes to secondary devices to avoid bottlenecks.

Memory support is also a long term concern. The more RAM slots and higher supported capacity, the longer your system will remain viable for LLMs and ZFS. Boards that support large ECC configurations provide decades of stability for virtualisation and storage work.

Onboard storage options affect how you structure your arrays. Some workstation boards include several M.2 or OCuLink connectors, which can serve as metadata drives or high speed scratch areas without consuming PCIe slots.

Power delivery design is extremely important for twenty four seven workloads. Server grade boards and workstation platforms use larger VRM arrays, heavier heatsinks, and better current regulation which translates into higher stability and longer hardware life, especially under LLM workloads which generate sustained load spikes.

Recommended boards include the WRX90E SAGE for Threadripper Pro builds and the Supermicro H13SSL or H13SWA series for EPYC builds. Both classes offer strong lane allocation, workstation class VRMs, and long term BIOS support.

————————————————————

6. Case Selection for Long Term Expandability

A case must be chosen around airflow, noise, and physical compatibility with large workstation boards. High end GPUs and multi drive arrays produce significant heat, so airflow must be prioritised. Many modern cases use a front mesh design to ensure unhindered intake while still allowing reasonable acoustic comfort. For a NAS that will operate continuously, you want efficient airflow at low fan speed rather than brute force cooling.

Drive bay count is another long term factor. You want front accessible trays or cages that allow frequent drive replacement without dismantling the system. Hot swap cages are ideal for ZFS and for anyone who expands storage regularly.

GPU clearance becomes important once multi GPU builds enter the picture. Many workstation class cases now include riser mounts or vertical GPU placement to ensure cards fit without blocking drive cages. Choosing a case that physically supports EATX and SSI EEB is essential when building on Threadripper Pro or EPYC boards, since many standard ATX cases cannot accommodate the height or width of these platforms.

Rackmount systems are preferable for those who want dense storage, centralised cooling, and clean cable routing. Desktop towers are preferable for anyone prioritising low noise and easier physical access.

Recommended cases include the Fractal Define 7 XL and Lian Li O11D XL for desktop builds, and Supermicro four bay or eight bay chassis for rackmount options, all of which can support full size workstation boards and multi GPU layouts.

————————————————————

7. Recommended Hardware Configurations

For AI training, the recommended build uses a Threadripper Pro 7995WX with a WRX90 platform. This combination offers maximum PCIe bandwidth, high core counts, and strong multi GPU potential.

For local AI inference, a Threadripper Pro 7965WX build offers a more balanced approach. It delivers high single threaded performance, efficient GPU scaling, and enough lanes for several accelerators while maintaining lower power usage.

For a hybrid NAS plus AI system, a Threadripper Pro 7945WX or EPYC 9354P build provides both wide PCIe allocation and strong compute performance. This allows NVMe arrays, multi drive ZFS setups, and one or two GPUs without compromise.

For a pure NAS ZFS storage build, EPYC 9124 or 9354P paired with a Supermicro H13SSL style board remains the most robust choice. It offers ECC memory scaling, large lane count, and efficient twenty four seven operation with excellent I O distribution.

For a budget Gen5 workstation NAS, the Threadripper Pro 7945WX with a WRX90 Creator board offers the lowest cost entry into Gen5 hardware with excellent PCIe expansion, solid performance, and long term upgrade paths.

————————————————————

8. NVMe and Storage Layer Planning

Mixing HDD and NVMe allows you to optimise for both performance and capacity. NVMe drives can serve as top tier scratch space, metadata areas, or special vdevs for ZFS. HDDs can remain the primary cold storage or project archive.

PCIe lane budgeting becomes important as soon as you introduce more than two NVMe drives. Each Gen4 or Gen5 NVMe drive can consume four lanes, so allocating these lanes without restricting GPUs or networking requires careful planning. Threadripper Pro and EPYC both provide enough lanes, but the motherboard layout determines how usable those lanes truly are.

ZFS benefits greatly from SSD mirrors, small metadata pools, and fast special vdevs. Using two NVMe drives in a mirror for metadata and small block operations can increase overall performance significantly. Using larger NVMe arrays for active editing reduces latency dramatically for video workflows.

High performance editing pools often require several NVMe drives operating in parallel, while cold storage bays can rely on larger HDD arrays. Long term planning allows you to create separate fast and slow storage layers, each optimised for a different workload.

————————————————————

9. Networking Roadmap for the Next Five Years

Ten gigabit networking remains the practical baseline for most NAS and AI workstation users. It is fast enough for multi stream 4K editing, fast VM storage, and high speed large file transfer. If your workflow involves a single editor or a single GPU host accessing the NAS, ten gigabit will not be the bottleneck for several years. For many users this continues to be the most cost effective and most compatible standard, especially because switches and adapters have become inexpensive and reliable.

Twenty five gigabit becomes relevant once you are running multi user editing, multi GPU pipelines, or streaming high bandwidth datasets into memory for AI training. The price gap has narrowed and many workstation motherboards now support PCIe cards that offer dual twenty five gigabit, so moving to this tier early allows seamless scaling later. USB4 and OCuLink direct attach can outperform traditional copper networking entirely. These connections offer several gigabytes per second point to point transfer rates with lower latency, which is ideal when you want a direct editor to NAS pipeline without involving a switch.

Switch planning depends on how many high bandwidth endpoints you expect to use within the next five years. A simple ten gigabit switch is enough for most studios. If you anticipate dual twenty five gigabit links or hybrid networks that include USB4 bridges, consider a switch with at least one or two SFP28 uplinks and multiple ten gigabit ports. For small deployments, you can skip the switch entirely and rely on direct attach through USB4, OCuLink, or SFP to the workstation, then add switching only when you expand.

————————————————————

10. Power and Cooling Considerations

Sizing the CPU cooler correctly is essential on workstation platforms. Threadripper Pro and EPYC chips require large air coolers or high performance liquid coolers with strong VRM airflow support. Undersized coolers lead to thermal throttling and long term VRM stress. For twenty four seven systems, tower air coolers are often more reliable than liquid solutions, provided the case supports their height.

GPU cooling becomes more difficult as you add more accelerators. Spacing is critical, but so is airflow direction. Open air GPUs can suffocate when installed closely together, while blower style GPUs exhaust heat directly out of the chassis. A well planned build isolates GPU heat away from HDD bays and prevents recirculation that raises system temperatures.

Power supplies need both sufficient wattage and the correct rail distribution. Multi GPU builds often require high quality one thousand watt or higher PSUs with multiple twelve volt rails or a single strong rail with proper overcurrent protection. Efficient PSUs reduce heat and noise, which directly affects the comfort of the environment in smaller rooms.

Noise management matters even in performance focused systems. Larger fans spinning slowly produce far less noise than small fans at high RPM. Cases with large front intake, rear exhaust, and open top ventilation allow smoother airflow at lower fan speeds. For rackmount systems, you can rely on deeper chassis with larger fans or plan for acoustic isolation in a separate room.

————————————————————

11. Final Recommendations Summary

If you plan heavy AI use, choose Threadripper Pro with a WRX90 board. This platform provides the lane count, cooling support, and upgrade headroom that multi GPU training and large local LLM hosting demand. It remains the most flexible option for AI oriented five year roadmaps.

If you need massive storage, EPYC with a Supermicro H13 class board is the most reliable long term choice. It offers wide PCIe connectivity for HBAs, large ECC memory capacity for ZFS, and excellent twenty four seven stability.

If you want a balanced hybrid that handles both NAS workloads and GPU acceleration, a mid tier Threadripper Pro such as the 7945WX or an EPYC 9354P build gives you efficient compute performance, large lane budgets, and enough resources for both storage and AI without excess cost.

If you want the cheapest five year entry path, Threadripper Pro 7945WX on a WRX90 Creator board delivers PCIe Gen5 scalability, workstation reliability, and a guaranteed upgrade path without the cost of the higher tier chips. This is the most affordable way to secure a long lifespan while keeping room for GPUs, NVMe arrays, and faster networking as your workloads evolve.

————————————————————

Conclusion

Choosing the right motherboard, CPU, and case for a system that must last five years is no longer just a matter of picking components with high core counts or large drive bays. Modern NAS and AI workloads push hardware in ways that traditional home servers never had to contend with, and the rate of change is only accelerating. The rise of local LLMs, multi GPU pipelines, PCIe Gen5 NVMe arrays, multi gig networking, and hybrid NAS plus compute workflows means that long term planning must focus on expandability rather than simply today’s performance.

EPYC and Threadripper Pro platforms offer very different strengths, and understanding your five year roadmap is the deciding factor. EPYC provides unmatched storage scalability, ECC memory capacity, and ZFS friendly architecture. Threadripper Pro delivers the PCIe bandwidth, core speed, and GPU centric design required for AI workloads. Once the platform is chosen, the motherboard determines whether you will be able to grow or whether the build will reach a limit sooner than expected. Case choice matters just as much, since cooling, drive accessibility, fan sizing, and physical support for large workstation boards determine how well the system performs under sustained loads.

With a clear roadmap, it becomes straightforward to select the right combination. Heavy AI use thrives on WRX90 platforms. Large ZFS arrays belong on EPYC. Balanced hybrid environments sit comfortably in the middle with mid tier Threadripper Pro or EPYC single socket solutions. Budget conscious builders can start with lower tier Gen5 chips and still have room to grow into multi GPU or multi pool NVMe arrays in the future.

A well planned system today avoids costly upgrades later. When you design around PCIe lanes, cooling headroom, future networking standards, and storage architecture from the beginning, your NAS and AI platform will remain capable, efficient, and relevant far longer than any single generation consumer build.

| Where to Buy a Product | |||

|

|

|

|

VISIT RETAILER ➤ |

|

|

|

VISIT RETAILER ➤ |

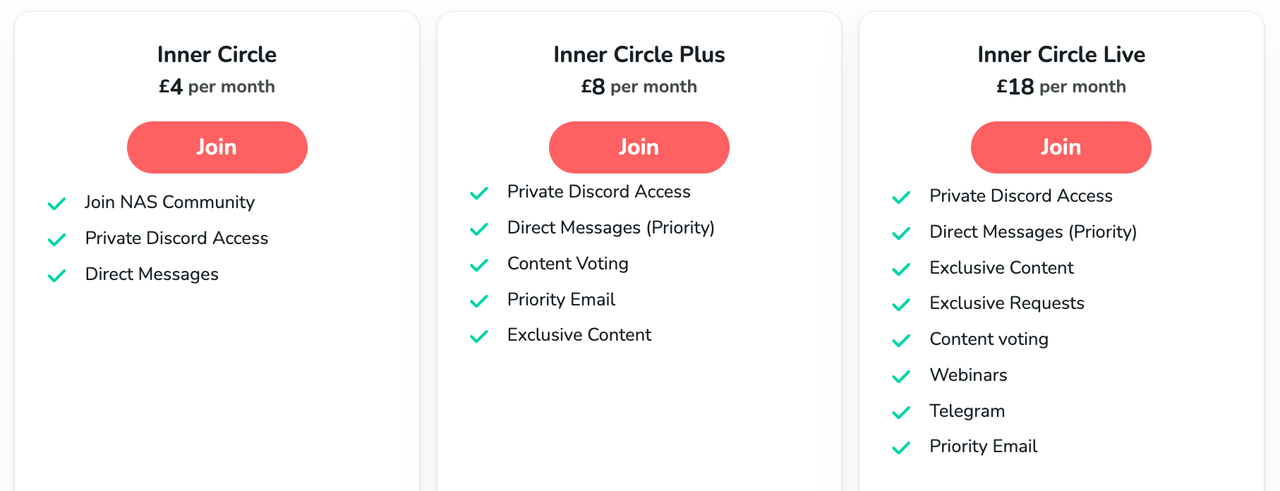

We use affiliate links on the blog allowing NAScompares information and advice service to be free of charge to you. Anything you purchase on the day you click on our links will generate a small commission which is used to run the website. Here is a link for Amazon and B&H. You can also get me a ☕ Ko-fi or old school Paypal. Thanks! To find out more about how to support this advice service check HERE

Gl.iNet vs UniFi Travel Routers - Which Should You Buy?

UnifyDrive UP6 Mobile NAS Review

UniFi Travel Router Tests - Aeroplane Sharing, WiFi Portals, Power Draw, Heat and More

UGREEN iDX6011 Pro NAS Review

Beelink ME PRO NAS Review

UGREEN iDX6011 Pro - TESTING THE AI (What Can it ACTUALLY Do?)

Access content via Patreon or KO-FI

Discover more from NAS Compares

Subscribe to get the latest posts sent to your email.

DISCUSS with others your opinion about this subject.

ASK questions to NAS community

SHARE more details what you have found on this subject

IMPROVE this niche ecosystem, let us know what to change/fix on this site