20 Ways to Improve Your 10GbE Network Speeds

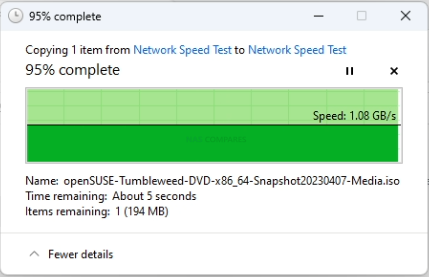

Upgrading to 10GbE networking should, in theory, allow you to achieve 1GB/s (1000MB/s) network speeds, unlocking ultra-fast data transfers for large files, backups, and high-performance applications. However, many users find that real-world performance falls far short of these expectations. Instead of the seamless, high-speed experience they anticipated, they encounter slower-than-expected speeds, inconsistent performance, and unexplained bottlenecks that limit throughput.

Whether you’re using a NAS, a 10GbE switch, or a direct PC-to-NAS connection, numerous factors can influence network performance. These can range from hardware limitations (such as underpowered CPUs, slow storage, or limited PCIe lanes) to misconfigured network settings (like incorrect MTU sizes, VLAN issues, or outdated drivers). Even the quality of your network cables and transceivers can play a crucial role in determining whether you’re getting the full 10GbE bandwidth or suffering from hidden bottlenecks.

In this guide, we’ll explore TWENTY common reasons why your 10GbE network might not be delivering full speeds, along with detailed fixes and optimizations for each issue. Each point is carefully explained, ensuring that you can identify, diagnose, and resolve the specific problems affecting your network performance. Whether you’re dealing with a NAS that isn’t reaching expected speeds, a 10GbE adapter that’s underperforming, or a switch that isn’t behaving as expected, this guide will help you troubleshoot step by step, so you can fully unlock the potential of your 10GbE network.

1. (Obvious one) Your Storage is Too Slow to Keep Up with 10GbE Speeds

The Problem:

One of the biggest misconceptions about 10GbE networking is that simply having a 10GbE network adapter means you will automatically get 1GB/s speeds. However, your actual storage performance is often the bottleneck. Most traditional hard drives (HDDs) have a sequential read/write speed of only 160-280MB/s, meaning that a single drive cannot fully saturate a 10GbE connection. Even with multiple HDDs in a RAID array, performance may still fall short of 1GB/s due to RAID overhead and the limitations of mechanical disks.

For example, if you have a 4-bay NAS with standard 7200RPM hard drives in RAID 5, you may only reach 500-600MB/s, which is half the potential of your 10GbE network. The situation gets worse if you are using RAID 6, as the additional parity calculations introduce a write performance penalty.

The Fix:

- Switch to SSDs: If you need consistent 10GbE performance, you will need SSDs instead of HDDs. Even four SATA SSDs in RAID 5 can saturate a 10GbE connection (~1GB/s read/write).

- Use NVMe Storage for Maximum Speeds: If your NAS supports NVMe SSDs, using them will provide 3-5GB/s speeds, which far exceeds 10GbE bandwidth.

- Optimize RAID Configuration:

- RAID 0 offers maximum speed, but no redundancy.

- RAID 5 or RAID 10 is the best balance for speed and data protection.

- RAID 6 is great for redundancy but can severely impact write performance.

How to Check Disk Speeds:

Run a disk speed test to verify if storage is the issue:

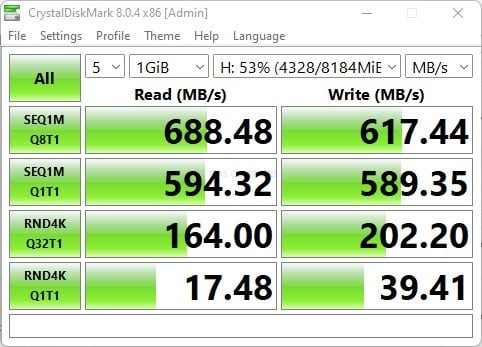

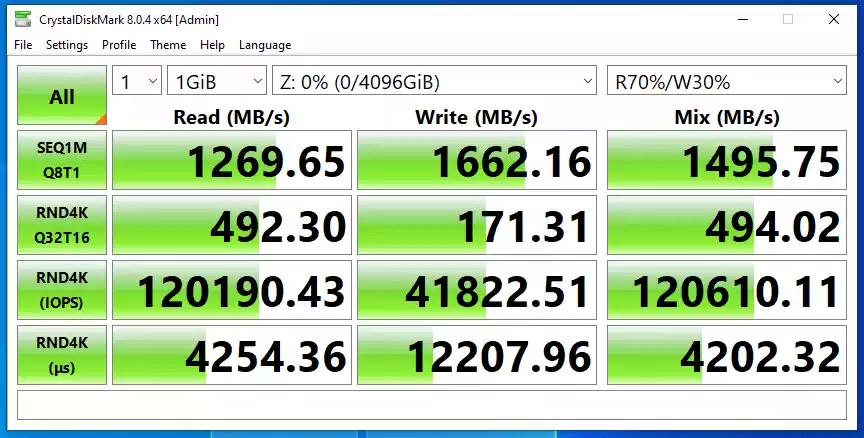

Windows (CrystalDiskMark)

- Download and install CrystalDiskMark.

- Select your storage volume (NAS drive, local SSD, etc.).

- Run a sequential read/write test.

- If speeds are below 1GB/s, your storage is the bottleneck.

Linux/macOS (dd Command)

- This writes 5GB of data to test sequential write speeds.

- Check the MB/s value after the test completes—if it’s below 1000MB/s, your storage is too slow.

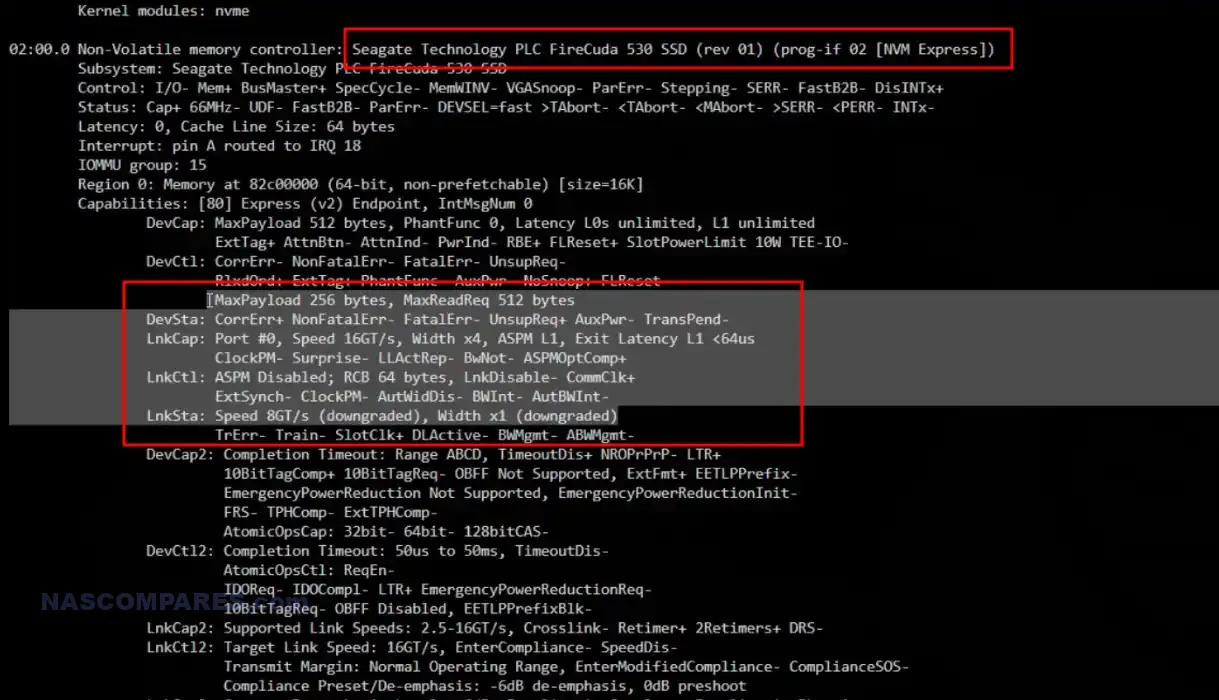

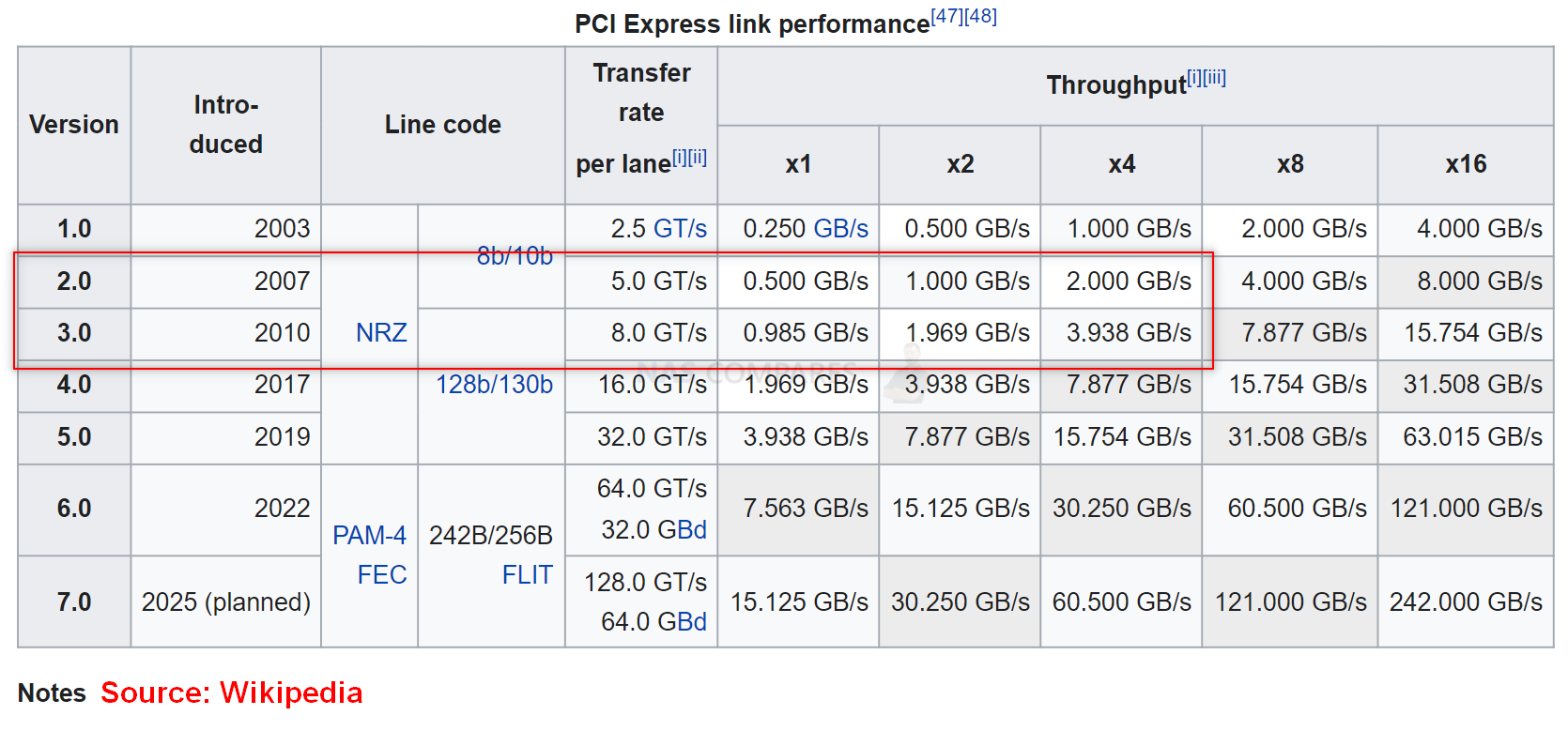

2. Your SSDs or NVMe Drives Are Running at Lower PCIe Speeds

The Problem:

Even if your NAS or PC is using SSDs, you might not be getting full speeds due to PCIe lane limitations. Some NAS devices throttle M.2 NVMe SSDs to PCIe 3.0 x1 or x2, which caps speeds at 800-1600MB/s—not enough to fully saturate a 10GbE connection.

This issue is particularly common in budget-friendly NAS systems and motherboards where multiple M.2 slots share bandwidth with SATA ports or other PCIe devices. Even high-speed SSDs like the Samsung 980 Pro (7000MB/s rated speed) will be bottlenecked if placed in an underpowered slot.

The Fix:

- Check PCIe Lane Assignments:

- Some motherboards share PCIe lanes between M.2 slots and other components (e.g., GPU, SATA ports).

- Move your NVMe SSD to a full x4 slot for maximum speed.

Linux/macOS (Check PCIe Speeds)

- Look for

PCIe x1orPCIe x2—this means your SSDs are not running at full bandwidth.

Windows (Check with CrystalDiskInfo)

- Download CrystalDiskInfo.

- Look for the PCIe link speed in the SSD details.

If speeds are lower than expected, try moving the SSD to a different M.2 slot or checking BIOS settings to enable full PCIe bandwidth.

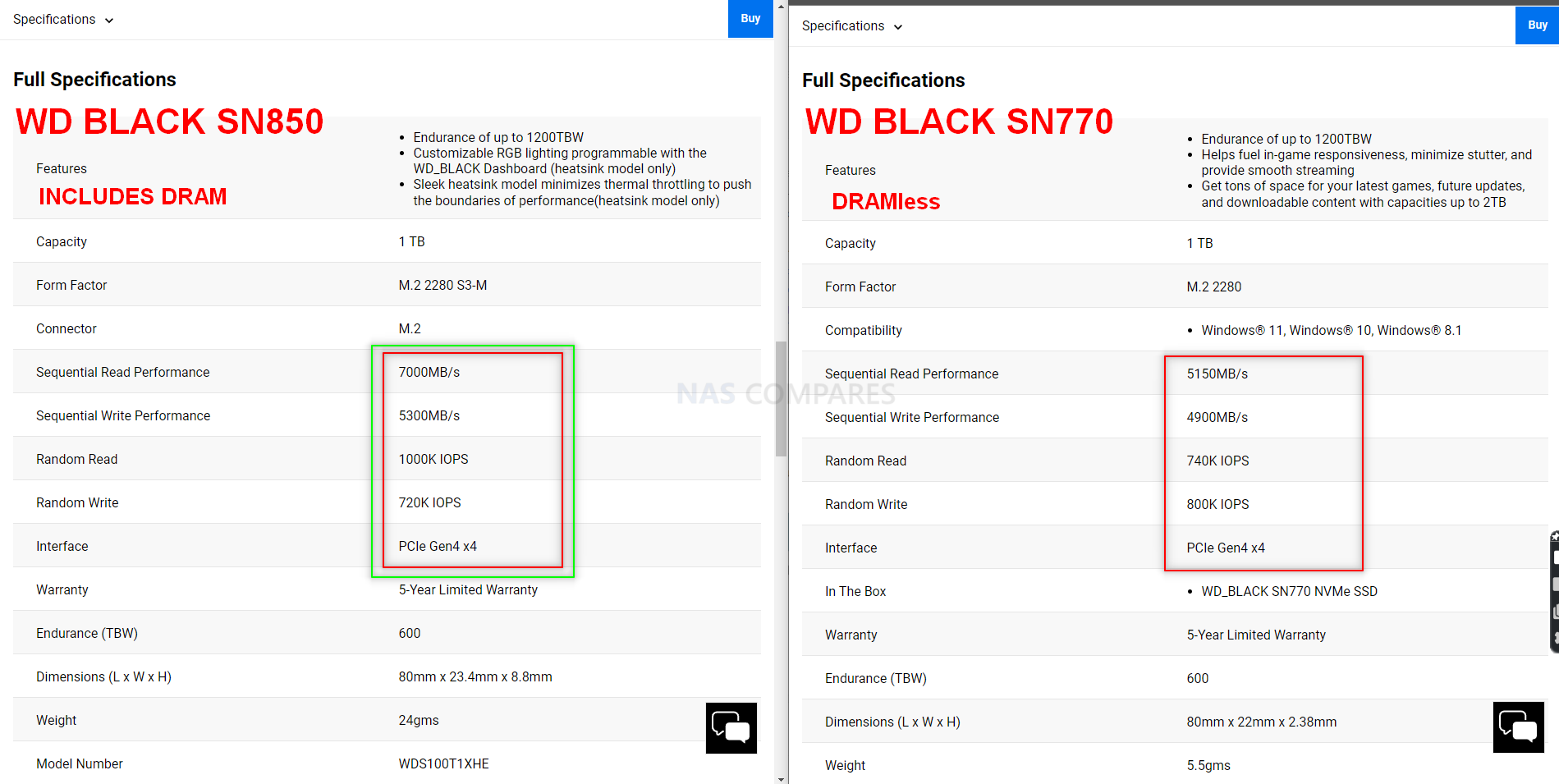

3. You’re Using DRAM-less SSDs (HMB-Only SSDs Can Throttle Speeds)

The Problem:

Not all SSDs are created equal. Some budget SSDs lack DRAM cache and instead rely on Host Memory Buffer (HMB), which offloads caching duties to system RAM. While this design helps reduce costs, it also means significantly lower sustained write performance.

For a single SSD, this might not be an issue, but in a RAID configuration, the problem worsens as multiple drives compete for system memory. DRAM-less SSDs also tend to overheat faster, leading to thermal throttling, further reducing performance.

The Fix:

- Use SSDs with DRAM cache: High-performance SSDs like the Samsung 970 EVO, WD Black SN850, and Crucial P5 Plus have dedicated DRAM to prevent slowdowns.

- Monitor SSD temperatures:

- If SSDs are overheating (above 70°C), use heatsinks or active cooling.

- Check SSD type in Windows:

- Open Device Manager → Expand Disk Drives.

- Search your SSD model online—if it lacks DRAM, it could be a performance bottleneck.

4. Your Switch is Not Actually 10GbE (Misleading Switch Descriptions)

The Problem:

Many users unknowingly purchase “10GbE” switches that only have limited 10GbE ports. Some switches advertise 10GbE speeds, but only one or two ports support it, while the rest run at 1GbE.

It’s also possible that your NAS or PC is plugged into a non-10GbE port, creating an invisible bottleneck.

The Fix:

- Check the switch model’s specifications to confirm the number of true 10GbE ports.

- Log into your switch’s admin panel and confirm the port speeds:

- If using Netgear, Ubiquiti, or Cisco, log in and check the port status.

- If using a managed switch, run the following command via SSH:

- Look for 10G/10000M to confirm that the port is running at full speed.

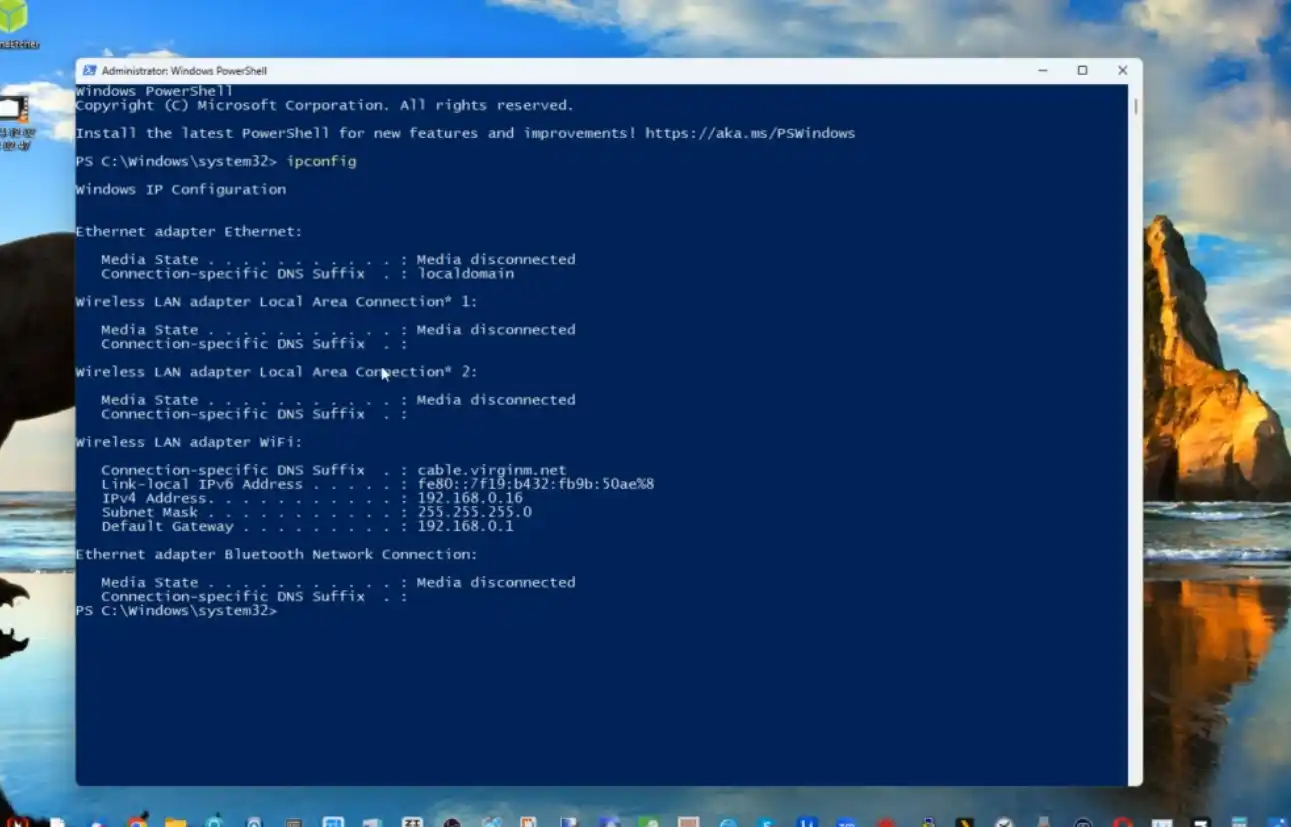

Windows (Check Network Speed)

- Open Control Panel > Network and Sharing Center.

- Click on your 10GbE adapter → Check Speed (should show 10.0Gbps).

If your switch only has 1-2 ports at 10GbE, you may need to reconfigure your network layout or upgrade to a full 10GbE switch.

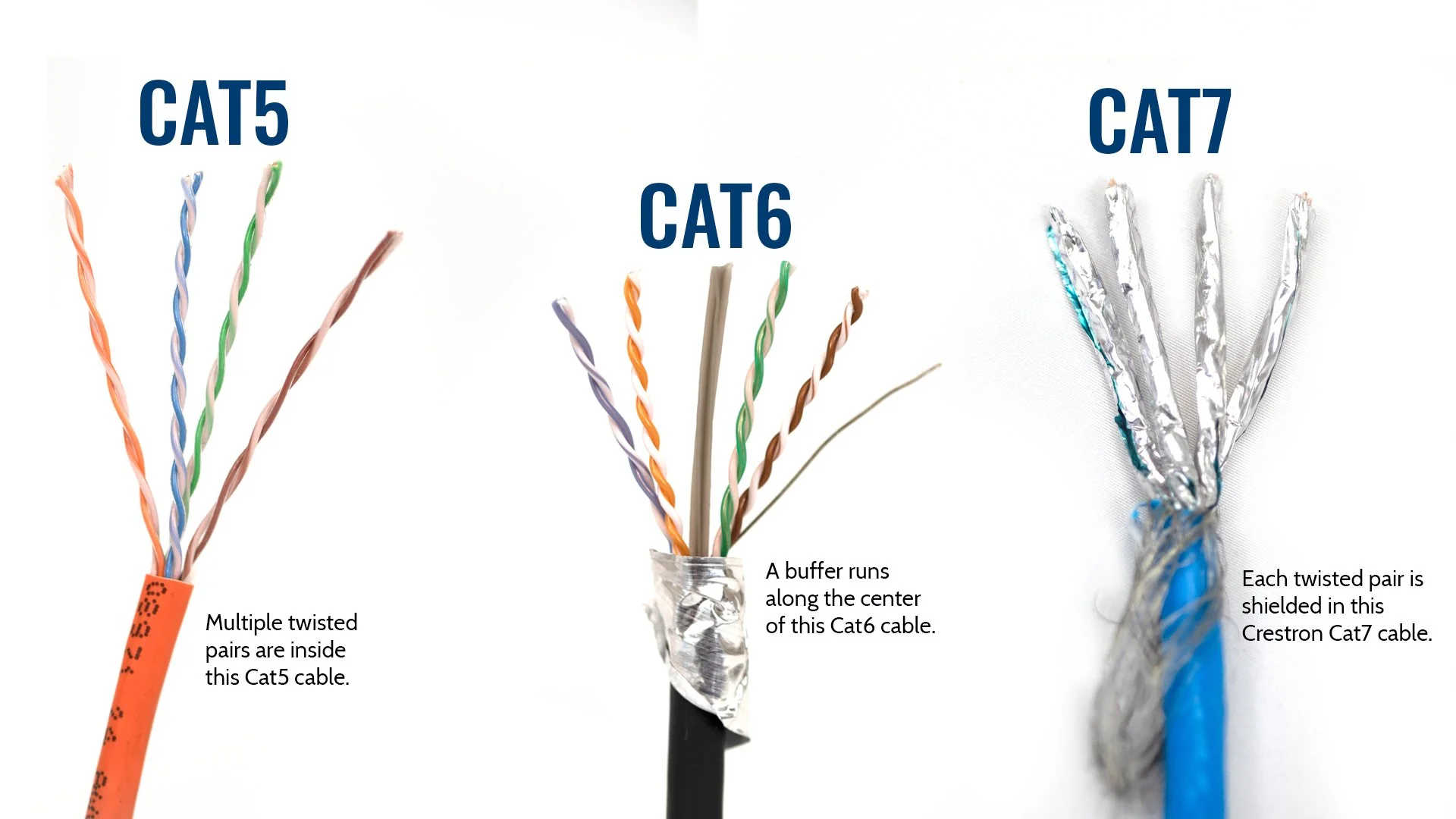

5. You’re Using the Wrong Ethernet Cables (Cat5e vs. Cat6/Cat7)

The Problem:

Not all Ethernet cables can handle 10GbE speeds over long distances. If you’re using Cat5e, performance drops significantly after 10 meters.

The Fix:

- Use at least Cat6 for short runs (up to 30 meters).

- Use Cat6a or Cat7 for long runs (30m+).

- Inspect cables—cheap or old cables may not be rated for 10GbE.

How to Check Your Cable Type

- Look at the cable jacket—it should say Cat6, Cat6a, or Cat7.

- If the cable does not specify, assume it’s Cat5e and replace it.

If using fiber, make sure your SFP+ transceivers are rated for 10GbE—many cheap adapters are 1GbE only.

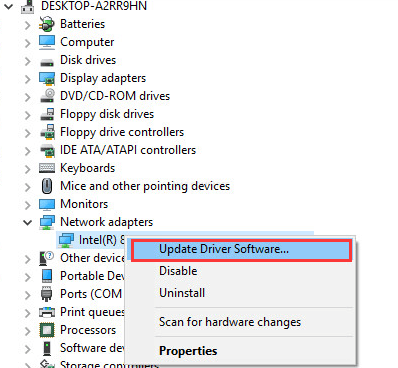

6. Your Network Adapter is Using the Wrong Driver or Firmware

The Problem:

Even if you have a 10GbE network adapter installed, outdated or incorrect drivers can limit speeds or cause inconsistent performance. Many network cards rely on manufacturer-specific drivers for optimal performance, but some operating systems may install generic drivers that lack key optimizations.

This issue is common with Intel, Mellanox, Broadcom, and Aquantia/AQC NICs—especially if they were installed manually or came pre-installed with a NAS or prebuilt server.

The Fix:

- Check your network adapter model:

- Windows: Open Device Manager > Network Adapters and find your 10GbE NIC name.

- Linux/macOS: Run the following command to list your installed NICs:

- Update the driver manually:

- Windows: Go to the manufacturer’s website (Intel, Broadcom, Mellanox, etc.) and download the latest driver.

- Linux: Update using

ethtool:

- Check and update NIC firmware: Some network cards require a firmware update for full 10GbE support. Many Aquantia NICs, for example, need firmware updates to fix link speed negotiation issues.

- Ensure your OS isn’t using a generic driver:

- In Windows, open Device Manager, right-click the NIC, and select Properties > Driver. If it says Microsoft Generic Adapter, update it manually.

- In Linux, check driver details with:

If the driver is a generic kernel driver, install the manufacturer’s official driver.

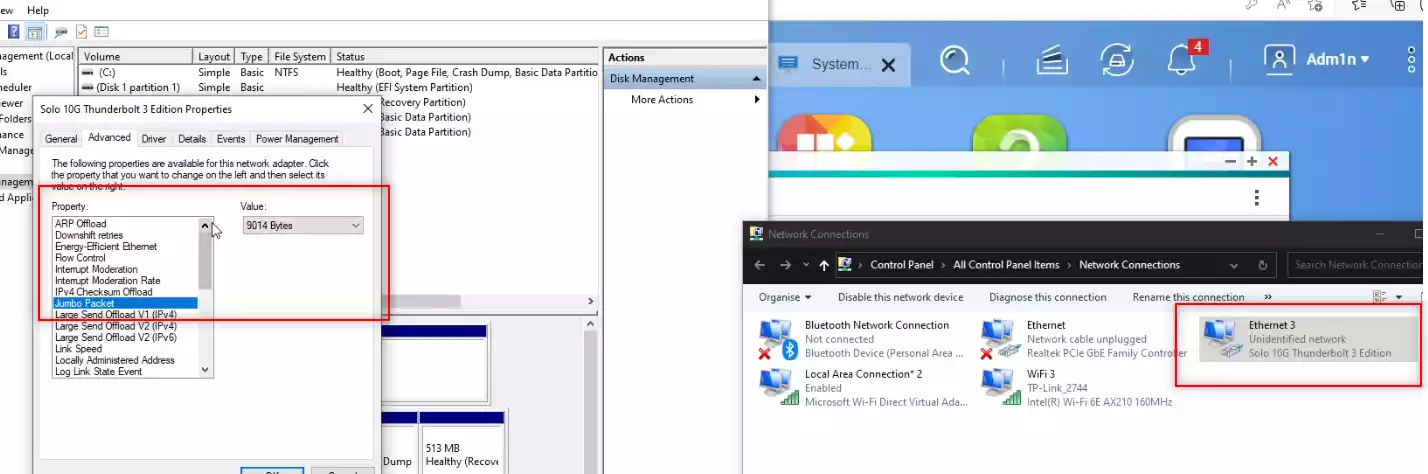

7. MTU (Jumbo Frames) is Not Set Correctly

The Problem:

By default, most network devices use a 1500-byte MTU (Maximum Transmission Unit). However, 10GbE networks can benefit from larger packet sizes (9000 bytes, known as Jumbo Frames). If one device has Jumbo Frames enabled but another doesn’t, packets get fragmented, leading to lower speeds, higher latency, and increased CPU usage.

The Fix:

- Enable Jumbo Frames (MTU 9000) on All Devices:

- Windows:

- Go to Control Panel > Network and Sharing Center > Change Adapter Settings.

- Right-click your 10GbE adapter, select Properties > Configure > Advanced.

- Set Jumbo Frame / MTU to 9000.

- Linux/macOS:

- NAS:

- Synology: Go to Control Panel > Network > Interfaces > Edit and set MTU to 9000.

- QNAP: Go to Network & Virtual Switch > Interfaces > Jumbo Frames.

- Windows:

- Check MTU Settings on Your Switch:

- If your switch does not support MTU 9000, disable Jumbo Frames or upgrade the switch.

- Verify MTU Configuration:

- Run a ping test with large packets:

If the packets fragment, MTU isn’t properly configured.

- Run a ping test with large packets:

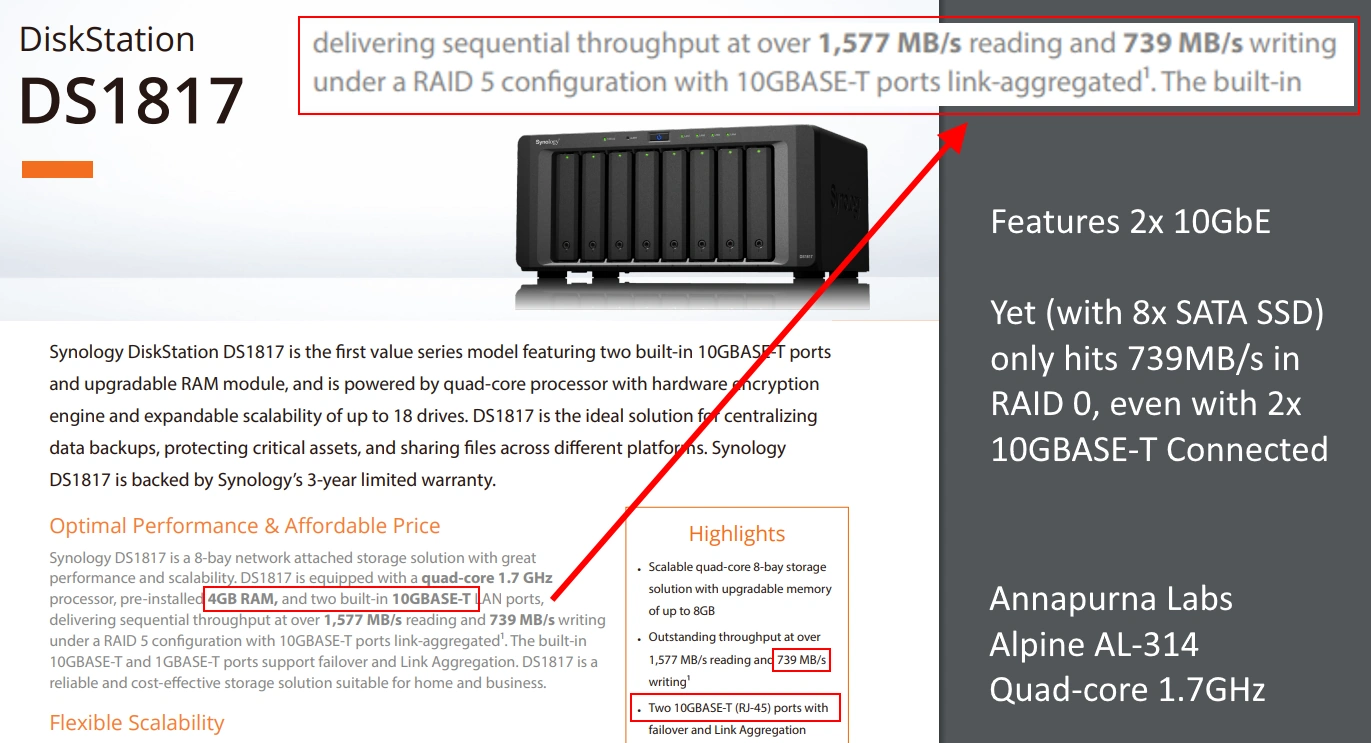

8. Your NAS or PC CPU is Too Weak to Handle 10GbE Traffic

The Problem:

Even if you have fast storage and a 10GbE adapter, a low-power CPU can bottleneck network performance. Many NAS devices use ARM-based or low-end Intel CPUs (e.g., Celeron, Atom, or N-series processors) that struggle to handle high-speed transfers, encryption, or multi-user traffic.

For example, some budget NAS units advertise 10GbE connectivity, but their CPU is too weak to push consistent 1GB/s speeds—especially if multiple users are accessing data simultaneously.

The Fix:

- Check NAS CPU specs:

- If your NAS has a quad-core ARM or low-end Intel CPU, it may not be capable of full 10GbE speeds.

- Monitor CPU Usage:

- Windows: Open Task Manager > Performance and check if the CPU is maxed out during transfers.

- Linux/macOS: Use:

- Disable resource-heavy background tasks:

- Stop or schedule RAID scrubbing, snapshots, virus scans, and indexing during off-hours.

- Use an x86 NAS with a high-performance CPU:

- Intel Core i3/i5, Ryzen, or Xeon-based NAS units handle 10GbE much better than Celeron/ARM-based models.

9. VLAN, QoS, or Network Prioritization is Throttling Your 10GbE Traffic

The Problem:

If you’re using a managed switch or router, incorrect VLAN (Virtual LAN) or QoS (Quality of Service) settings may be limiting your 10GbE speeds. Some switches automatically assign lower priority to high-bandwidth devices, throttling performance.

The Fix:

- Check VLAN settings:

- If your 10GbE NAS or PC is in a VLAN with limited bandwidth, remove it from that VLAN or adjust the priority settings.

- Disable or Adjust QoS Settings:

- Log into your switch’s admin panel and look for QoS (Quality of Service) settings.

- If enabled, check if bandwidth limits are applied to your 10GbE ports.

- In some switches (e.g., Ubiquiti, Netgear, Cisco), set QoS priority for 10GbE devices to “High”.

- Run a Speed Test Without VLAN or QoS:

- Temporarily disable VLAN/QoS, then test file transfer speeds again.

If speeds improve, your VLAN/QoS settings were throttling your network.

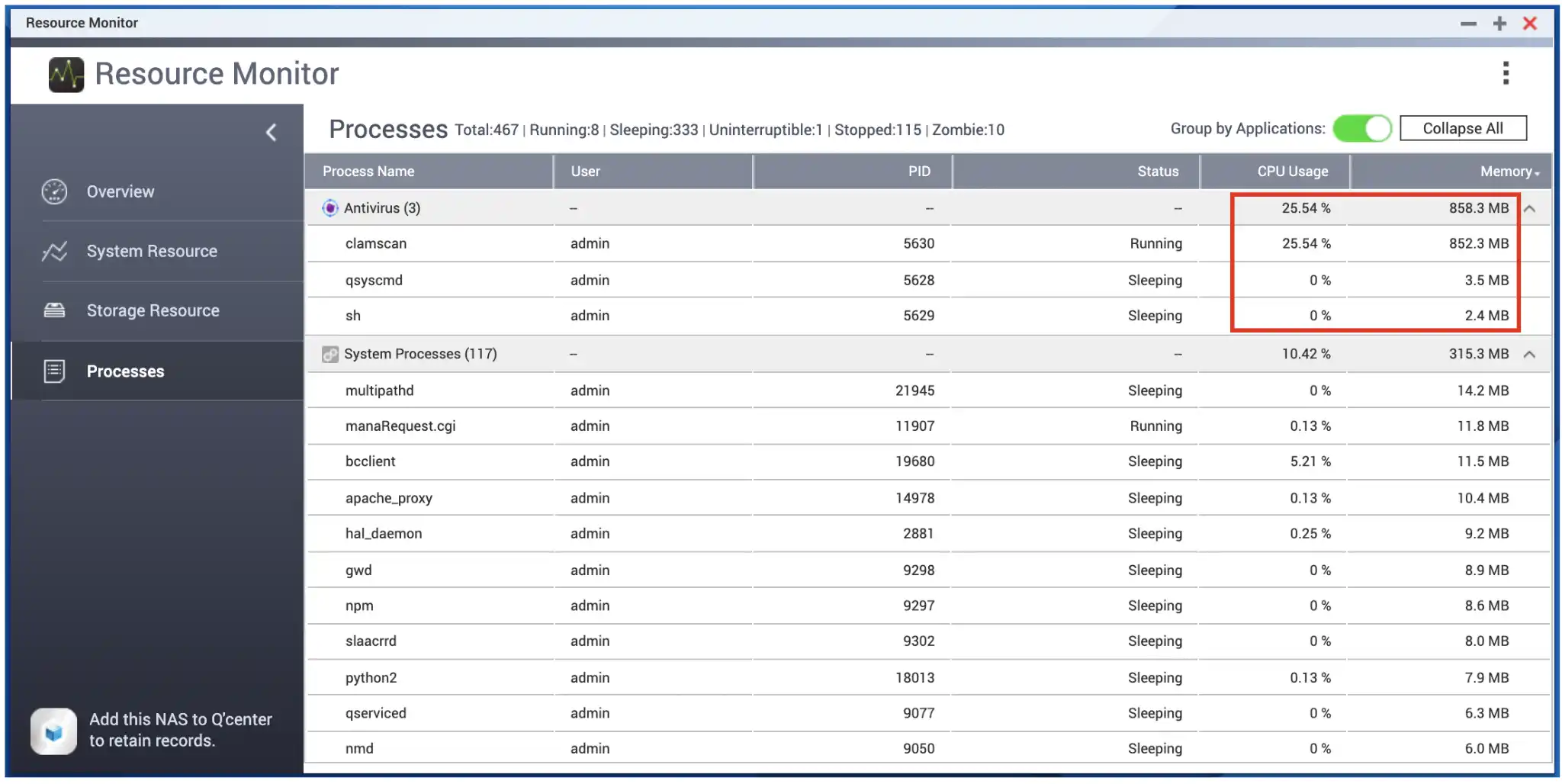

10. Background Processes or Other Network Devices Are Consuming Bandwidth

The Problem:

If you’re not getting full 10GbE speeds, it’s possible that another device is using the NAS at the same time. Even if your PC or NAS seems idle, background tasks like cloud syncing, automated backups, Plex transcoding, or surveillance camera recording can consume CPU, storage I/O, and network bandwidth.

The Fix:

- Check if other devices are using the NAS:

- Windows: Open Task Manager > Network and check if any background processes are consuming bandwidth.

- Linux/macOS: Use:

- On your NAS, check if:

- Plex or media servers are streaming.

- Security cameras are recording to the NAS.

- Backups/snapshots are running in the background.

- Pause Background Tasks:

- Temporarily disable cloud syncing, RAID scrubbing, and backups, then retest network speeds.

- Run an IPerf Network Speed Test:

- Windows/Linux:

- On NAS:

- On PC:

- If IPerf shows 1GB/s speeds but file transfers don’t, then background processes or storage limitations are the issue.

- Windows/Linux:

11. Your SFP+ Transceiver or Media Converter is Bottlenecking Performance

The Problem:

If you’re using SFP+ transceivers or fiber-to-RJ45 media converters, they might not be running at full 10GbE speeds. Many budget-friendly SFP+ modules are actually 1GbE-only or have compatibility issues with certain switches and NICs. Additionally, some fiber-to-copper converters (e.g., cheap third-party models) overheat quickly, leading to throttling and slow speeds.

The Fix:

- Check Your SFP+ Transceiver Rating:

- Run the following command on a Linux-based NAS or switch:

- If the output shows

1000Mbpsinstead of10000Mbps, your SFP+ module is not running at full speed.

- Use Verified SFP+ Modules:

- Stick to brand-certified transceivers (e.g., Intel, Mellanox, Cisco, Ubiquiti, MikroTik).

- Generic eBay/Amazon SFP+ transceivers may not properly negotiate at 10GbE.

- Check for Overheating:

- Touch the transceiver—if it’s too hot to hold, it may be thermal throttling.

- Consider active cooling (small heatsinks or airflow near the module).

- Verify Media Converters:

- Some cheap SFP-to-RJ45 converters cap speeds at 5GbE or lower.

- Try swapping the converter for a direct 10GbE-capable SFP+ transceiver.

12. Your PCIe Slot is Throttling Your 10GbE NIC

The Problem:

Your 10GbE network card (NIC) might be plugged into a PCIe slot that doesn’t provide full bandwidth. Some motherboards limit secondary PCIe slots to x1 or x2 speeds, which reduces network performance significantly.

For example:

- A PCIe 2.0 x1 slot only supports 500MB/s, far below 10GbE speeds.

- A PCIe 3.0 x4 slot is required for full 10GbE performance.

The Fix:

- Check PCIe Slot Assignment:

- Windows: Use HWiNFO64 or Device Manager to check PCIe link speed.

- Linux/macOS: Run:

If it says PCIe x1, your NIC is bottlenecked.

- Move the 10GbE NIC to a Better Slot:

- Use a PCIe 3.0/4.0 x4 or x8 slot for full bandwidth.

- Avoid chipset-controlled PCIe slots, as they share bandwidth with SATA, USB, and other devices.

- Enable Full PCIe Speed in BIOS:

- Go to BIOS > Advanced Settings > PCIe Configuration.

- Set the slot to “Gen 3” or “Gen 4” (depending on your motherboard).

13. SMB or NFS Protocol Overhead is Slowing Transfers

The Problem:

If you’re transferring files over a mapped network drive (SMB/NFS), protocol overhead can reduce real-world speeds. Windows SMB, in particular, can limit large file transfers due to encryption, signing, or buffer settings.

The Fix:

- Enable SMB Multichannel for Faster Transfers (Windows):

- Open PowerShell as Administrator and run:

- This allows multiple TCP connections for higher throughput.

- Disable SMB Signing (If Safe to Do So):

- Windows:

- Linux:

Add the following line to/etc/fstabwhen mounting an SMB share:

- Try NFS Instead of SMB (If Using Linux/macOS):

- SMB can be slow for large sequential transfers.

- NFS performs better for 10GbE direct-attached storage (NAS to PC).

- Use iSCSI for Direct Storage Access:

- If your NAS supports iSCSI, mount an iSCSI target for block-level access, which can be much faster than SMB/NFS.

14. Your Router or Network Switch is Blocking Full Speeds

The Problem:

Many consumer-grade routers and switches have built-in traffic management features that can throttle high-speed connections. Even some high-end managed switches may have bandwidth limits, VLAN misconfigurations, or QoS settings that restrict speeds.

The Fix:

- Disable Traffic Shaping or QoS:

- On a managed switch, log in and disable bandwidth limits on your 10GbE ports.

- On a router, look for:

- Smart QoS / Traffic Prioritization (disable it).

- Bandwidth Limiting (set to unlimited).

- Check VLAN Configuration:

- If your NAS and PC are in different VLANs, traffic might be routed through the main router, slowing speeds.

- Move both devices into the same VLAN for direct 10GbE connectivity.

- Ensure Your Switch Supports Full 10GbE Throughput:

- Some low-end 10GbE switches have an internal bandwidth cap.

- Example: A switch with five 10GbE ports but only a 20Gbps internal backplane will throttle performance under heavy load.

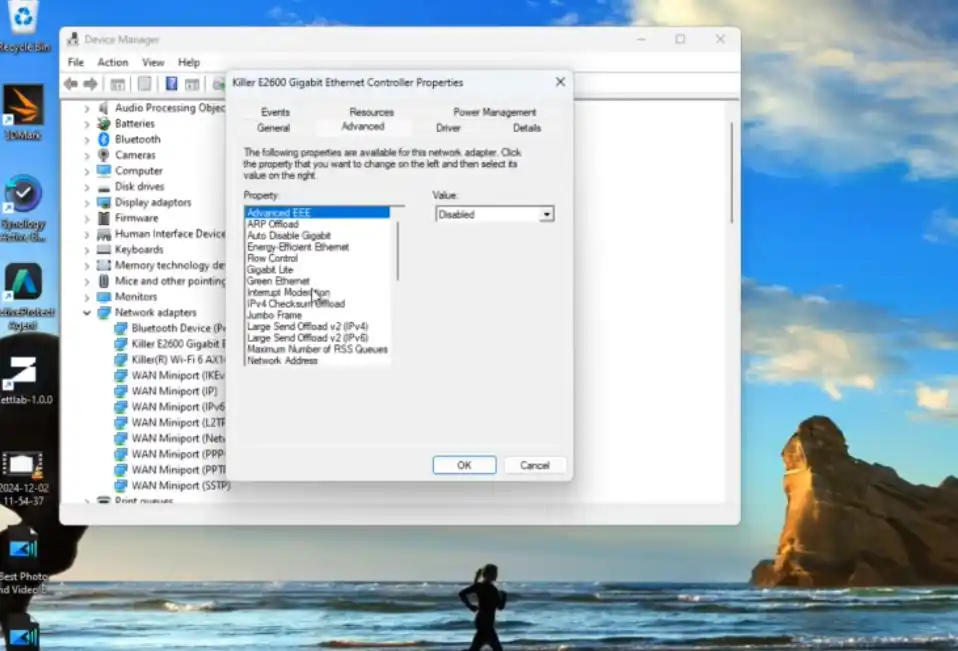

15. Windows Power Management is Throttling Your 10GbE Card

The Problem:

Windows Power Management settings may be automatically throttling your 10GbE network adapter to save energy. This can cause inconsistent speeds and unexpected slowdowns.

The Fix:

- Disable Energy-Efficient Ethernet (EEE):

- Open Device Manager → Expand Network Adapters → Right-click your 10GbE adapter → Properties.

- Under the Advanced tab, find “Energy-Efficient Ethernet” and set it to Disabled.

- Set Windows Power Plan to High Performance:

- Open Control Panel > Power Options.

- Select High Performance (or Ultimate Performance if available).

- Disable CPU Power Throttling:

- Open PowerShell as Administrator and run:

- This forces Windows to prioritize performance over power saving.

- Check for Interrupt Moderation & Adaptive Inter-Frame Spacing:

- In Device Manager, under the Advanced tab of your 10GbE adapter, disable:

- Interrupt Moderation

- Adaptive Inter-Frame Spacing

- In Device Manager, under the Advanced tab of your 10GbE adapter, disable:

16. Your NAS or PC is Routing Traffic Through the Wrong Network (Subnet Mismatch)

The Problem:

Even if you have a direct 10GbE connection between your NAS and PC, your operating system might still route traffic through a slower network interface (e.g., a 1GbE connection or even Wi-Fi). This can happen if your system prioritizes the wrong network adapter, or if your NAS and PC are on different subnets, causing traffic to be routed through a slower router or switch instead of using the direct 10GbE link.

For example:

- Your NAS has two network interfaces:

- 10GbE:

192.168.2.10 - 1GbE:

192.168.1.10

- 10GbE:

- Your PC has two interfaces:

- 10GbE:

192.168.2.20 - Wi-Fi:

192.168.1.50

- 10GbE:

If your PC is trying to reach the NAS using the 1GbE or Wi-Fi address, it may bypass the 10GbE connection entirely, leading to slow speeds.

The Fix:

- Ensure Both Devices Are on the Same Subnet

- Assign both 10GbE interfaces an IP in the same range (e.g.,

192.168.2.x). - Set the 1GbE and Wi-Fi interfaces to a different subnet (e.g.,

192.168.1.x).

- Assign both 10GbE interfaces an IP in the same range (e.g.,

- Manually Set the 10GbE Network as the Preferred Route

- Windows (CMD – Run as Administrator):

- Linux/macOS:

- A lower metric prioritizes the 10GbE connection over slower networks.

- Check Active Routes to Ensure 10GbE is Being Used

- Windows:

- Linux/macOS:

- Look for

192.168.2.xgoing through the 10GbE adapter. If another network is being used, adjust the routing table.

17. Your SATA Controller is Bottlenecking Multiple Drives

The Problem:

Even if you have fast SSDs or multiple hard drives in RAID, the SATA controller inside your NAS or PC might be the bottleneck. Some budget NAS units and lower-end PC motherboards use cheap SATA controllers (e.g., JMicron, ASMedia, Marvel) that bottleneck total disk throughput.

For example:

- Your NAS or PC has six SATA ports, but they are all routed through a single PCIe 2.0 x1 controller (which has a max bandwidth of 500MB/s).

- Even though each SSD is capable of 500MB/s, the total throughput is capped by the controller’s bandwidth.

The Fix:

- Check the SATA Controller in Use:

- Windows (Device Manager): Expand Storage Controllers and check the SATA controller manufacturer.

- Linux/macOS:

- If you see JMicron, ASMedia, or Marvel, you might have a bandwidth-limited controller.

- Use an HBA (Host Bus Adapter) Instead

- If your motherboard or NAS has limited SATA bandwidth, install a dedicated LSI/Broadcom HBA card (e.g., LSI 9211-8i, LSI 9300-8i) to get full-speed SATA connectivity.

- Check the SATA Backplane in NAS Enclosures

- Some NAS enclosures have a shared SATA controller for all drives, limiting total speed.

- If possible, upgrade to a NAS with multiple SATA controllers or use NVMe SSDs instead.

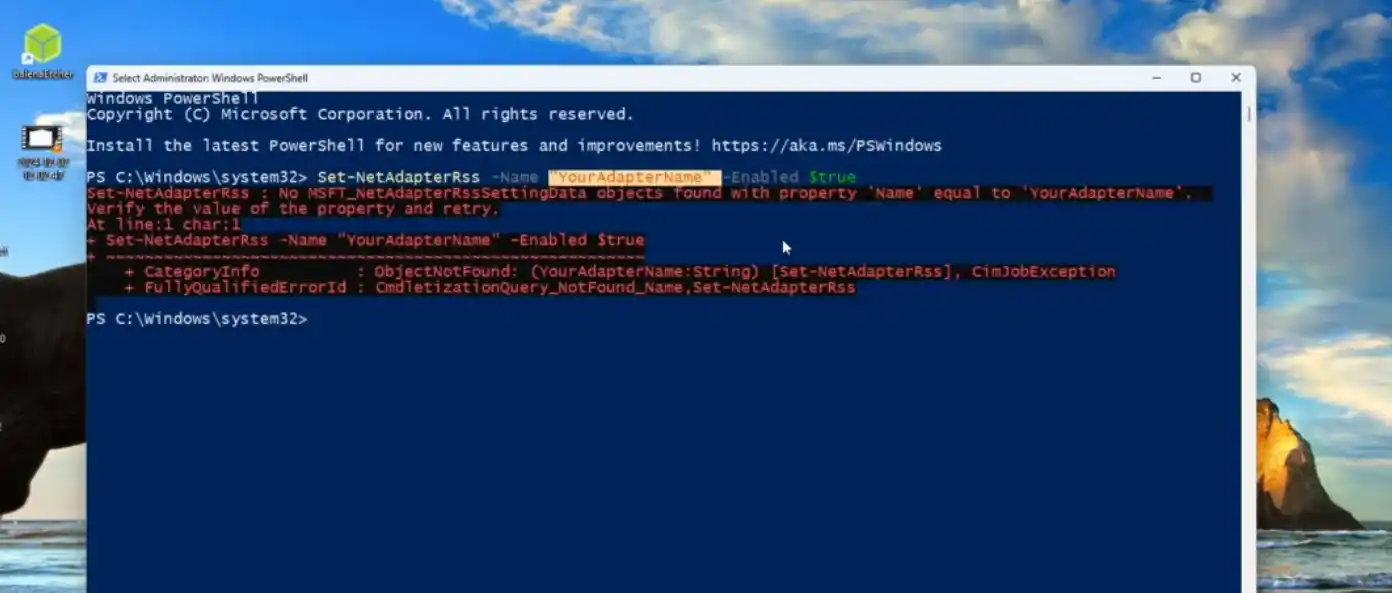

18. Your System’s TCP/IP Stack is Not Optimized for High-Speed Transfers

The Problem:

By default, most operating systems have conservative TCP settings that are optimized for 1GbE networks, but not for high-speed 10GbE connections. Without proper tuning, TCP window size, congestion control, and buffer settings can limit data transfer rates over high-bandwidth connections.

The Fix:

Windows: Optimize TCP Settings via PowerShell

- Enable TCP Window Auto-Tuning:

- Enable Receive Side Scaling (RSS) to Use Multiple CPU Cores:

- Increase TCP Receive Buffers:

Linux/macOS: Increase TCP Buffers

Edit /etc/sysctl.conf and add:

Then apply the changes:

19. Antivirus or Firewall Software is Interfering with Network Speeds

The Problem:

Many antivirus and firewall programs scan all incoming and outgoing network traffic, which can significantly slow down 10GbE speeds. Some intrusion prevention systems (IPS), such as those in Sophos, Norton, Bitdefender, and Windows Defender, can introduce latency and CPU overhead when processing large file transfers.

The Fix:

- Temporarily Disable Your Antivirus/Firewall and Run a File Transfer Test

- If speeds improve, your security software is causing the slowdown.

- Whitelist Your NAS or 10GbE Connection in Security Software

- Add your NAS IP address as an exclusion in your antivirus or firewall settings.

- Disable Real-Time Scanning for Large File Transfers

- In Windows Defender:

- Open Windows Security → Go to Virus & Threat Protection.

- Under Exclusions, add your NAS drive or network adapter.

- In Windows Defender:

- Check for Router-Level Security Features

- Some routers have Deep Packet Inspection (DPI) or Intrusion Prevention (IPS) enabled, which can slow down traffic.

- Log into your router’s admin panel and disable unnecessary security features for local transfers.

20. Your Network is Experiencing Microburst Congestion (Overloaded Buffers)

The Problem:

Some 10GbE switches have limited packet buffers, causing microburst congestion when multiple devices transfer data simultaneously. This results in random slowdowns, packet loss, and jitter, even if total traffic is well below 10GbE capacity.

The Fix:

- Enable Flow Control on Your Switch

- Log into the switch’s admin panel.

- Enable 802.3x Flow Control on your 10GbE ports.

- Use a Higher-Quality Switch with Larger Buffers

- Some cheap 10GbE switches have small packet buffers, leading to congestion.

- Consider an enterprise-grade switch (e.g., Netgear XS716T, Cisco SG550X, Ubiquiti EdgeSwitch).

- Monitor Switch Traffic for Spikes

- Use

iftoporWiresharkto monitor packet loss or delays. - If needed, upgrade your switch to one with better buffering

- Use

Recommended NAS Solutions Based on Data Storage Needs:

Budget NAS for a Family or Small Data Storage Solution – The Synology BeeStation 4TB

What We Said in our review HERE: YouTube Review HERE Synology has clearly done their homework on the development and presentation of the BeeStation private cloud. They are targeting a whole new audience with this system, and therefore, criticisms based on experiences with their other hardware are likely to fall on deaf ears. The BeeStation is probably one of the best middle grounds I have ever seen between an easy-to-use and exceptionally easy-to-set-up private cloud system, while still managing to provide smooth and seamless features for accessing and sharing your private cloud’s storage securely. Looking at this system with a more network-savvy microscope kind of defeats the point, and I’ve tried to be fair in my assessment. The lack of LAN access by default seems a little odd, and launching the BeeStation series in this single-bay, 4TB-only fashion may be a bit of a marketing misstep, but overall, what you’re seeing here is an effectively priced and scaled private cloud system. It’s a fantastic alternative to third-party clouds and existing simplified NAS systems. With many users keeping an eye on their budgets and tightening costs, Synology, known for its premium position in the market, had a challenge scaling down to this kind of user. However, I have to applaud Synology’s R&D for creating a simple and easy-to-use personal cloud solution that still carries a lot of their charm and great software reputation. It may not be as feature-rich as DSM, but BSM does exactly what it says it will do, and I think the target audience it’s designed for will enjoy the BeeStation a great deal! |

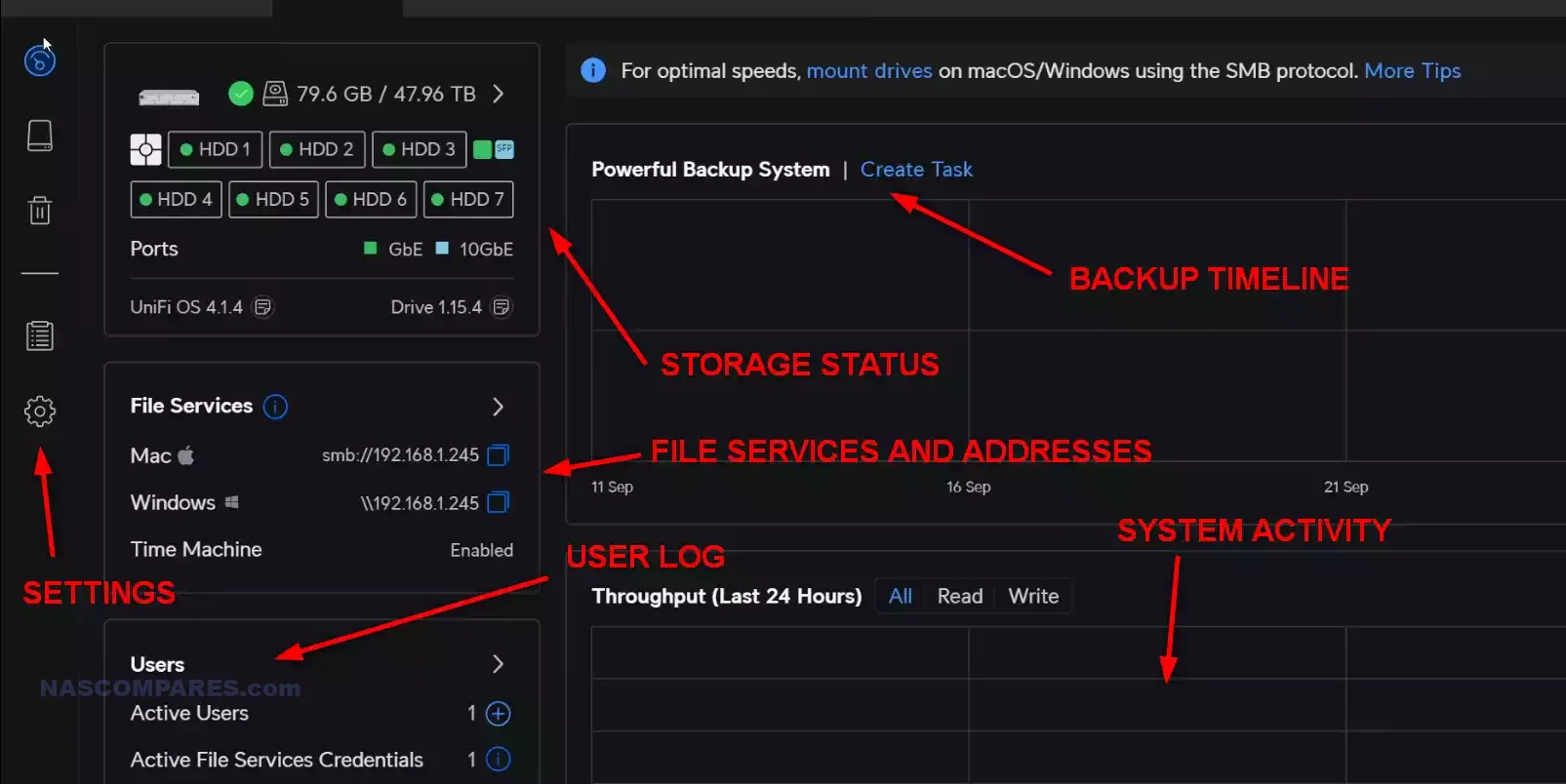

Best Value Business NAS – The UniFi UNAS Pro 10GbE Rackmount

What We Said in our review HERE: YouTube Review HERE I feel like a bit of a broken record in this review, and I keep repeating the same two words in conjunction with the UniFi UNAS Pro—fundamentals and consistency! It’s pretty clear that UniFi has prioritized the need for this system to perfectly complement their existing UniFi ecosystem and make it a true part of their hardware portfolio. In doing so, it has resulted in them focusing considerably on the fundamental storage requirements of a NAS system and making sure that these are as good as they possibly can be out of the gate. To this end, I would say that UniFi has unquestionably succeeded. The cracks in the surface begin once you start comparing this system with other offerings in the market right now—which is inevitably what users are going to do and have been doing since the first indications of a UniFi NAS system were being rumored. It may seem tremendously unfair to compare the newly released UniFi NAS with solutions from vendors that have had more than 20 years of experience in this field, but for a business that wants to fully detach themselves from the cloud and wants true user-friendly but highly featured control of their network operations, comparison is inevitable!

A solid, reliable, and stable system that will inevitably grow into a significant part of most UniFi network users. The problem for many, however, is going to be how long it takes UniFi to reach that point where this system can be software competitive with its rivals. If you are a die-hard UniFi ecosystem user and you are looking for stable, familiar, easy-to-use, and single ecosystem personal/business storage, you are going to love everything about the UniFi UNAS Pro. But just be aware that this is a system that prioritizes storage and is seemingly at its best within an existing UniFi network architecture, and if removed from that network, you are going to find a system that at launch feels quite feature-light compared with alternatives in the market. Pricing for the system is surprisingly competitive, given its position as the launch NAS—unusual when you look at the pricing philosophy of numerous larger-scale systems like the UniFi Dream Machine and UNVR from the brand.

Hopefully, over time we are going to see UniFi build upon the solid fundamentals that they have designed here and create a more competitive solution on top of this. I have no doubt that UniFi will commit to software and security updates for this system, but it would be remiss of me to say that this is the best NAS solution for your network. Right now, it just happens to be the most user-friendly and most UniFi-ready one. Bottom line: this will probably tick a lot of boxes. |

Best Value Content Creator NAS for Photo and Video EDITING – The Terramaster F4-424 MaxWhat We Said in our review HERE: YouTube Review HERE

The TerraMaster F4-424 Max is a standout NAS system in TerraMaster’s lineup, offering impressive hardware specifications and solid performance at a price point of $899.99. For users who need high-speed data transfers, intensive compute power, and flexibility in storage configurations, the F4-424 Max is an excellent option. The combination of the Intel i5-1235U CPU, dual 10GbE ports, and PCIe Gen 4 NVMe support ensures that the NAS can handle even the most demanding tasks, whether it’s virtualization, media transcoding, or large-scale backups.

That said, when compared to the F4-424 Pro, which is priced at $699.99, the Max model offers significantly more networking power and potential for speed. However, the Pro model still provides fantastic performance for most home and small office users, making it a more budget-friendly alternative for those who don’t require 10GbE or advanced NVMe functionality.

In terms of software, Synology DSM and QNAP QTS are still more evolved, offering richer multimedia experiences and better integration for business applications. However, TOS 6 continues to improve with every iteration, closing the gap between TerraMaster and these larger players. With new features like TRAID, cloud sync, and improved snapshot management, TOS is becoming more user-friendly and robust. For users prioritizing performance, flexibility, and future-proofing, the F4-424 Max is a strong contender and offers excellent value for money. While there are areas where TerraMaster could improve, such as the lack of PCIe expansion and front-facing USB ports, the F4-424 Max delivers on its promise of high performance and scalable storage solutions. |

Best NAS for Photo and Video EDITING – The QNAP TVS-h874 / TVS-h874T

What We Said in our review HERE: YouTube Review HERE In summarizing the capabilities and potential of the QNAP TVS-h874T NAS, released as a late 2023 update to its predecessor, it’s clear that this system represents a significant leap forward in desktop NAS technology. Priced over £2500, it’s a substantial investment, designed with future-proofing in mind. The TVS-h874T not only maintains the longevity and high-end status of the TVS-h874 but also brings to the table enhanced direct data access through Thunderbolt 4 integration. This advancement, supporting IP over Thunderbolt protocol, significantly boosts connectivity and speed, making the system an ideal choice for demanding tasks such as 8K video editing and high-performance computing needs in business environments. However, the question of whether Thunderbolt NAS is the right fit for all users remains. For those requiring high-speed, multi-user access and scalability, the TVS-h874T is a strong contender. Its support for the latest PCIe 4 standards ensures compatibility with high-performance upgrades, reinforcing its position as a future-proof investment. The software, featuring QTS and QuTS, might require some acclimatization, particularly for those familiar with simpler systems like Synology’s DSM. Yet, the benefits, especially for ZFS enthusiasts, are undeniable, offering advanced RAID management and a plethora of applications and services.

The TVS-h874T’s stance on open hardware and software compatibility is a significant plus in an industry increasingly leaning towards proprietary systems. It accommodates a range of third-party hardware and software, adding to its versatility. In the face of growing concerns over NAS security, the system is well-equipped with comprehensive tools and settings for enhanced security and data protection, addressing the pressing issue of ransomware attacks. In conclusion, the QNAP TVS-h874T stands out as an exceptional choice for businesses and power users who require a robust, scalable, and secure NAS solution. It offers an excellent balance of price, performance, and features, making it a worthy investment for those seeking top-tier server-side capabilities. However, for users with simpler storage needs or those not requiring the advanced features of Thunderbolt NAS, traditional Thunderbolt DAS devices might be a more suitable and cost-effective option. The TVS-h874T, with its advanced capabilities, is undoubtedly a powerhouse in NAS technology, but its full benefits will be best realized by those whose requirements align closely with what this advanced system has to offer. |

Budget NAS for Multimedia / PLEX – The Terramaster F4-424 Pro

What We Said in our review HERE: YouTube Review HERE The TerraMaster F4-424 Pro NAS is a powerful 4-bay turnkey NAS system that offers competitive pricing and robust hardware. With its Intel i3 N300 CPU, 32GB DDR5 memory, and 2x M.2 NVMe SSD bays, it provides excellent performance for various tasks, including Plex media streaming and hardware transcoding. In terms of design, the F4-424 Pro features a sleek and modern chassis with improved cooling and hot-swapping capabilities. It represents a significant step forward in design compared to TerraMaster’s older 4-bay models, aligning more closely with industry leaders like Synology and QNAP. The addition of TOS 5 software brings significant improvements in GUI clarity, backup tools, storage configurations, and security features. However, the absence of 10GbE support and limited scalability in this regard might disappoint advanced users. Additionally, while the hardware exceeds Intel’s memory limitations, full utilization of the 32GB DDR5 memory is reliant on Terramaster’s own SODIMM modules, and this could be a limitation for some users. Overall, the TerraMaster F4-424 Pro NAS offers excellent value for its price, with competitive hardware and software features. It positions itself as a strong contender in the 4-bay NAS market, particularly for those looking for an affordable yet capable private server solution. |

📧 SUBSCRIBE TO OUR NEWSLETTER 🔔

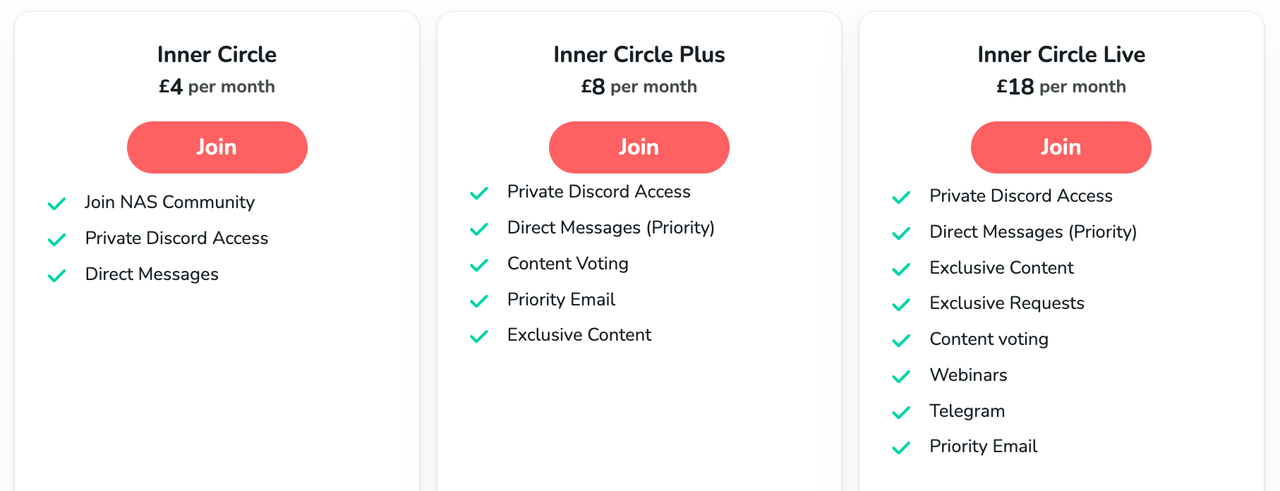

🔒 Join Inner Circle

Get an alert every time something gets added to this specific article!

This description contains links to Amazon. These links will take you to some of the products mentioned in today's content. As an Amazon Associate, I earn from qualifying purchases. Visit the NASCompares Deal Finder to find the best place to buy this device in your region, based on Service, Support and Reputation - Just Search for your NAS Drive in the Box Below

Need Advice on Data Storage from an Expert?

Finally, for free advice about your setup, just leave a message in the comments below here at NASCompares.com and we will get back to you. Need Help?

Where possible (and where appropriate) please provide as much information about your requirements, as then I can arrange the best answer and solution to your needs. Do not worry about your e-mail address being required, it will NOT be used in a mailing list and will NOT be used in any way other than to respond to your enquiry.

Need Help?

Where possible (and where appropriate) please provide as much information about your requirements, as then I can arrange the best answer and solution to your needs. Do not worry about your e-mail address being required, it will NOT be used in a mailing list and will NOT be used in any way other than to respond to your enquiry.

|

|

Minisforum G7 Pro Review

CAN YOU TRUST UNIFI REVIEWS? Let's Discuss Reviewing UniFi...

WHERE IS SYNOLOGY DSM 8? and DO YOU CARE? (RAID Room)

UniFi Routers vs OpenWRT DIY Routers - Which Should You Choose?

WHY IS PLEX A BIT S#!t NOW? IS 2026 JELLYFIN TIME? (RAID Room)

Synology FS200T NAS is STILL COMING... But... WHY?

Access content via Patreon or KO-FI

Discover more from NAS Compares

Subscribe to get the latest posts sent to your email.

Hello mate,

So I have a 10g built in on my motherboard, I have UGREEN Nas with 10g port and cat8 cable directly attached, I am still getting 300mb/s speeds@ Not sure whats wrong.

REPLY ON YOUTUBE

Could you not scroll and click everywhere hyperfast?

REPLY ON YOUTUBE

UniFi has built an awesome ecosystem at fair prices. They’ve made things fairly simple to use and very easy to adopt new equipment. I may eventually add one and repurpose my truenas server.

REPLY ON YOUTUBE

HELP !!! Is there a way where I can call you and get your help. I’ve literally spend about 2000 aud trying to set up 10G to reach at least 1 gigabyte file transfers and be able to edit 4k footage but my file transfer are only reach 250mb/s

My set up

PC have a 10G Network Card PCIE installed

QNAP NAS has a 10G Network Card PCIE Installed – Qnap 6 bay TS-x64

I have Netgear 10G TP-Link 8-Port 10G Desktop Unmanaged Switch, 10 Gbps Ports

I have 2x 4tb Crucial M.2 Nvme with read and write of up to 5000MB

My Jumbo Frames are 9000, I’ve tried running a direct to NAS to PC its still not reaching that speed.

I have all CAT 6 cables

I’m not running a Raid 0 just a stand alone static drive.

HELP!!!! IM GOING CRAZY!!!

REPLY ON YOUTUBE

The worst NAS I ever owned, it’s slow and sluggish and the transver for a 1gb file can take up to a hour when I have a blazing fast modem and no network saturation and the Iron wolf pro HDDs shouldn’t be a bottleneck so don’t buy this BS, the guy in the video is payed to make the product look good trust me

REPLY ON YOUTUBE

Dockers and VM’s do not belong on storage

REPLY ON YOUTUBE

Does everything… stores files, shares files, and books your dentist appointment. Brilliant.

REPLY ON YOUTUBE

RE: 7:30, it would be better to see dual PSU’s, but Ubiquiti’s “Power Back” (USP-RPS) isn’t a “backup battery failover” it is an external PSU. It is confusing, but that’s how they do their non-enterprise redundant PSUs.

REPLY ON YOUTUBE

ARM can be great, its just the ones qnap use are cheap. Apples M series for instance does high speed encryption pretty damn fast.

Its just about having the instruction set being hardware accelerated or specifically optimised!

REPLY ON YOUTUBE

Could you suggest a gas system for me? All I need to store is movies, st shows and photos. I have a 14TB External drive and I do use Plex for my media. Any suggestions. I had the Synology 5 bay in mind but I keep seeing Ugreen and now this Terramaster F4-24Max. I am a beginner and do not know much about NAS. I’m looking to get 4 10TB in a Nas.

REPLY ON YOUTUBE

Is there no way to disable RAID at all? Only have 2 HDD right now, kinda want to have access to both for storage.

REPLY ON YOUTUBE

Ever thought of a service (paid obviously) where you remote into someone’s system to check on all this as we are often not as tech savvy as you in a lot cases.

REPLY ON YOUTUBE

You mentioned intel processors are better choice , then synology ds923+ with AMD , is the 923 capable with the extra port added in the back?

REPLY ON YOUTUBE

Had mine for two weeks now and it’s a fine NAS but a few bugs. It does desperately need an update. SMB issues when viewing larger MP4 videos want to download instead of streaming over local network.

REPLY ON YOUTUBE

When I was a kid (sort of), we routinely backed up one of our pizza sized 2MB disk packs to the other one on our personal computer of the day. That backup took an acceptable 2 minutes or so, after which we could get back to work defining the future.

REPLY ON YOUTUBE

I have a Synology DS1819+ with a Synology E10M20-T1 M.2 SSD & 10 GbE Combo Adapter installed. Does this Adapter require any drivers or are they embedded into DSM? I checked the download page for the E10M20-T1 adapter and the only downloads available are documentation. Depending on the type and size of the files I am uploading to my NAS I see a range of 300 to 700 MB/s is that good?

REPLY ON YOUTUBE

So very informative with a massive explanation of possible bottlenecks affecting data transfer speeds. Love the point in the video while steaming information at the camera an interrupt is acknowledged, and the result of “I hate seagulls” is multiplexed. Love the video. There is so much key information in the cake, let alone the icing.

REPLY ON YOUTUBE

Great video!

REPLY ON YOUTUBE

Practical read-write speed on a spinning HDD is more like 120[5400]-160[7200] MiB/s. The 200+ numbers are for very idealized raw sequential data on the outer cylinders without so much overhead as a file system structure. Platter bit density also matters to some degree, because for a given RPM more bits pass by the head per second.

REPLY ON YOUTUBE

Windows has some interesting limitations if you are running WSL, Hyper-V etc. For example were a VM can get full 10Gbe speeds but the host OS will see something around 7-8Gbps. There are various fixes that can be used once a windows machine gets into this state. (this is beyond the fixes mentioned on this video – which are also great things to check).

REPLY ON YOUTUBE

I don’t know if it was mentioned, but it’s really important to study the capacity to writespeed drop on SSD’s. This goes for all SSD’s; NVME, SATA etc. 80-90% of consumer SSD’s have an issue with writespeed dropping off severely as the drive gets fuller.

REPLY ON YOUTUBE

A single HDD will be 2-3x faster than 1GbE connection, so a 10GbE connection is necessary to get the full speed out of even the smallest NAS.

However, the read and especially write speed really tapers off for HDD’s when trying to scale it. Adding more drives in a a RAID will increase speeds, but not linearly, and one should not expect to hit the 10GbE limit easily.

But SSD’s are completely different. Even 3 x SATA 2.5″ SSD in RAID will easily saturate a 10GbE, hitting 1.1GB/s, on both read and write. And that’s before even talking about how much better SSD is with many small files.

You can easily make 10GbE capable NAS by just putting in a sata card or two and installing 2.5″ SATA disks. This can be easier and more flexible than trying to find ports for NVME sticks.

REPLY ON YOUTUBE

Great video idea, this is a common issue. Also really nice improvement in video image quality! 🙂

REPLY ON YOUTUBE

You find really seem to have a strong understanding of this, right up calling that ‘fibre channel’ shows me you probably shouldn’t make these videos.

REPLY ON YOUTUBE

I have DS1621xs+ with 6x SSD drives in RAID5 plus Ubiquity USW-EnterpriseXG-24 switch and get 9500Mbit/s download, but upload never exceeded 3000Mbit/s. Why do you think it may be?

REPLY ON YOUTUBE

well done with the new set man! much cleaner look!

REPLY ON YOUTUBE

More people should use Kraftwerk’s “Computer World” album for sound effects.

REPLY ON YOUTUBE

8:12 I have tried just about every brand available for SFP+ to RJ45 adapters in my network gear. Yes they do get hot like you said. lol.

There is only a single chipset available righty now at least as far as I am aware that has any kind of decent heat output level. It is a much newer Broadcom chipset based adapter, and you can get the model from places like 10GTek or FS but they are quite expensive (double the price). Most of these adapters use 2.5-2.9w each and are the old Broadcom chip or Marvell chips (or Aquantia which is now Marvell). The newest Broadcom models use 1.8w. There are very few ways of differentiating which chip is in the device, but the easiest thing to watch for is the power draw spec on the product page. If it says 1.8w then it is the new Broadcom chip, if it doesnt then it is an old and hot chip. The other way to tell is the newer chip also supports copper Ethernet up to 100m, the older and hotter chips only support between 30m and 80m depending on the chip model.

The other thing to watch out for when using these adapters is that sometimes the switch you stick them in is incompatible to some extent. They will do 10gb down, but only 2.5gb up despite being rated as 10/10. That means when doing a file transfer you are limited to 2.5gb because one side is going up and that uplink is being capped by the switch or transceiver/adapter due to the incompatibility. No settings you change can fix this as it is a problem at the hardware level. I suspect that since Ethernet at these speeds use all 4 wire pairs, and since 2.5g*4 = 10gb, that somehow the incompatibility is sending the uplink side data on only as single pair of wires instead of all of them.

REPLY ON YOUTUBE

Good****

REPLY ON YOUTUBE

I want to know why so many motherboards now have 5Gbe and not 10Gbe. I am not aware of a switch that is 5Gbe so unless there is a splitter to two 2.5Gbe or you are connecting directly to another pc with 5Gbe I am not sure what the use case is

REPLY ON YOUTUBE

Great review of the elements that need to be considered when looking at a high performance NAS.

REPLY ON YOUTUBE

My Synology homemade nas is running 25G and transfers a little over 2000MB/sec read and write four PCIe U.2 7.68TB drives. I don’t need much storage just fast. Using a Ubiquiti USW-Pro-Aggregation 28port 10G 4 port 25G switch.

REPLY ON YOUTUBE

Hello Rob, 10 GBE is not 1000 or even 1024 MB/sec but instead 1250 MB/sec. 8 Bits equal 1 Byte, so 10.000 / 8 is 1250 mb/sec.

As this is a 20% difference to what you explained I am sorry but I had to clarify that, my friend.

Greetings from Germany.

REPLY ON YOUTUBE

Make a shirt of yourself saying I hate seagulls while doing nas review

REPLY ON YOUTUBE

All Hail Kraftwerk for their essential assistance on Robbie “The Robot”‘s video!

REPLY ON YOUTUBE

A lot of people forget to change the MTU/Jumbo Frames on the switch as well.

A lot of cheaper switches don’t even support MTU/Jumbo Frames.

REPLY ON YOUTUBE

The Lan wall socket has to be cat6 also. When I upgraded my house to cat6 I bought less expensive copper coated aluminum. Cat6 only ever got 768. Lots of information here to check out on my Nas thank you very much

REPLY ON YOUTUBE

Not have had any problems at all. I am not getting the transfer speeds as my Truenas pools aren’t able to get higher speeds. But the linkspeed easily reaches 10gbe in Iperf. And also when loading the ramcache I often see the 10gbe speeds.

I just bought 10gbe nics, a cheap zyxsel 10gbe capable switch. 15+ meters of cat7 (which is quite cheap) on one hand, and just regular shorter cat5e on the other end. And done. Decent quality cat5e is perfectly capable of running 10gbe on shorter lengths.

REPLY ON YOUTUBE

If the cable length is more than 5m then go for optic to prevent issues. Even with Cat6 you are not free.

REPLY ON YOUTUBE

Should you NAS be connected directly to your router or your switch for best performance ? Or does it matter ? I have seen some very vehement arguments for both.

REPLY ON YOUTUBE

I’m glad to see you mention cabling. And it’s not just what’s in the walls. I was doing some work on my network last year and found some very old pre Cat5e patch cords. Yes, they were short but those old, low band width things couldn’t have helped.

REPLY ON YOUTUBE

Thats why Unraid array user withiut cache best stick with 2.5G

Destruction write mode max about 250MB/s with modern spinning rust

Or 60-80MB/s in normal write mode

Read : about max speed of a spinning rust which about a 2.5G speed

It dont stack up speed like zfs raidz or raid 0/5/6

????

REPLY ON YOUTUBE

I’ll do you one better than that guy in the comments section. I measure my NAS speeds in Seagulls per Second.

REPLY ON YOUTUBE

Ignore my watch..too late, I was wondering what was going on with my Casio 🙂

REPLY ON YOUTUBE

Does anyone suspect UniFi will release a non-pro NAS?

REPLY ON YOUTUBE

Good summary from linux perspective.

Hi NASCompares! Quick question, how much RAM would I want to have in a media server NAS to be able to transcode large say 60gb movies smoothly?

REPLY ON YOUTUBE

The NAS hat a bunch of minor issues. It does not properly test disk drives inserted. I used an older but not failing one and this causes a ton of problems like random disconnects. Then with 2 brand new 8TB drives copying thousand of small files (like 100GB worth) can take hours or even days! I have let Unifi know about this days ago and so far just one canned response. It is NOT fast in may experience even with all 10gbe connections. Just keeping it real folks.

REPLY ON YOUTUBE

Best Review

REPLY ON YOUTUBE

Hi, can you advise please, if I connect UNAS Pro to UDM-pro via 10G SFP+, and then from the UDM-Pro connect to my windows PC using 10G SFP+, will I achieve the roughly speeds of 500-850 MB/s (RAID 5 using 3 x Seagate Exos X24 20gb drives). Or do I need a 10G SFP+ switch?

REPLY ON YOUTUBE

This NAS vs. Synology???

REPLY ON YOUTUBE

Mine just arrived and I’m excited to get it up and running. Will use it to replicate from my Unraid box as well as use it to replace Nextcloud.

REPLY ON YOUTUBE

I’ve had the F4-424 Max now for 3 weeks and had nothing but problems. I should have known when TOS uses /Volume1 instead of /volume1. Not to mention no access to root. Returning it tomorrow for a Synology DS923+.

REPLY ON YOUTUBE

Why should I buy this NAS? Out of curiosity perhaps, but certainly not for practical reasons! Look at a QNAP, Synology and even a UUGREEN NASync DXP4800 Plus. Maybe a 4 bay instead of a 7 bay, but much better hardware and software and therefore possibilities. If I would buy one of these brands, and I only use the bare NAS properties, then you still have a 20x better NAS that is not only more durable, but also many times as much as a UniFi UNAS Pro. By the way, just leave the Pro out of it, Marketing-wise it may sound nice, but it certainly is not. It is a big marketing launch from UniFi anyway. But an experienced NAS user really knows better.

REPLY ON YOUTUBE

wish this would come back in stock

REPLY ON YOUTUBE

I’ve also discovered the two ports, are not for failover. You can only use one at a time. UniFi advised you can only use SFP+ or RJ45. Not both at the same time.

REPLY ON YOUTUBE

I’ve been testing my UNAS Pro with four Seagate 18tb Exos drives in the “more protection” setting. I have uncovered a major issue it has with being unable to download files completely to any iPhone via the Identity app. Regardless of Wifi, or LTE (5G or 4G mobile), if you use Safari, it failed on every file to be able to download a file in its entirety. It would download half an image or half a wav or mp3 or mp4, but not the whole file. Despite multiple emails to UniFi and going through their escalation team they were unable to identify the issue until the end when I worked out it is an issue with Safari. Switching to Google Chrome as the browser on the iPhone, it works! I have advised UniFi and they say they will investigate and look at a possible update as they realise now there is an issue with the driver and a bug in its ability to allow for sharing and downloading to the files app on the iPhone via Safari. Thought I’d share for anyone else experiencing this issue. Would be keen to know if you have found this also.

REPLY ON YOUTUBE

FANTASTIC REVIEW, ROB!!!

Thanks a lot!

I am a long time Ubiquiti user, both UniFi (SOHO/semi-Pro use) and UISP (professional use) and although the lacking twistles that others can offer, as you say, this NAS is almost the ideal companion of a full UniFi network.

I’d lke the WORM feature as you mentioned, but hopefully I think they will put in there as soon as possible.

It is very interesting that an early firmware fron Ubiquiti would be as complete and stable as this one, at the moment of the launch.

In my memory, this is almost the first time it happens… ????

Price wise, it is a bargain!

I’ll purchase it, maybe the new revisions in mid 2025… ????

REPLY ON YOUTUBE

Can someone verify if the F4-424 Max will work with 3rd party RAM for increasing memory? I am currently setting up my new F4-424 and want to increase the ram to 32 gig. I have read other models were locked to only use official TM ram. That is EXPENSIVE and what they have in the TM store, none mention being compatible with the Max model. Thank you.

REPLY ON YOUTUBE

was he using thunderbolt 4 to another machine with a thunderbolt connection? That should be able to run like direct pcie. Am I missing something ? Why so slow

REPLY ON YOUTUBE

This is first gen. Give time and they will get up to speed.

REPLY ON YOUTUBE

Why does the software look identical to Google Drive?

REPLY ON YOUTUBE

Just bought one, spent several hours getting frustrated by it and sent it back. What a horrible experience the Terramaster was. Just the setup experience alone destroyed my trust in the platform. Finding it on the network took quite a long time. Had to reboot it manually after it booted the first time. Eventually it appeared and it is not clear what you actually do. It had an Apipa ip address. I clicked login and then it asked me to change the IP and enter the admin password. What it really meant, was wait 5-10 seconds and we will change the ip to a DHCP obtained – but I spent several rounds of accepting and logging in with the generated IP. The first time you click login and type the password it does not do anything. Nor does the login button above the interface – which actually makes it generate a new DHCP IP address. I had to right-click and only login that way – it was just weird. It could not find the internet so picking autosetup did not work. Manually I setup the name, password etc. and the boxes kept turning red indicating, I guess, I had not entered valid data – 0 feedback in the GUI. It could not send verification email during that setup. It has a code producer you need to type in 4 digits that on a large screen you could not see it. I know I am rambling but I just went through this and it was very mickey mouse. There just isn’t any feedback to anything you do – if it does not like your input it just stares into a corner. Others suggested FreeNAS and that sounds the way to go, but I decided a 423+ is just going to be better as I wanted a pretty simple experience.

REPLY ON YOUTUBE

Non-starter for me without an SHR type option. Too bad.

REPLY ON YOUTUBE

Thecus 7700 pro was a seven drive nas

REPLY ON YOUTUBE

The lack of a professional cloud backup with „time travel“ and restore options are stopping me from using this device

REPLY ON YOUTUBE

What a long way to say what a disappointment this is.

REPLY ON YOUTUBE

I just need a nas for file and picture storage

REPLY ON YOUTUBE

Great review! Thank you.

Just to clarify… I cannot have 2x 16TB disk in here without RAID, together with 4x 4TB disks in RAID10 right? Because the two 16TB ones would be consumed by the RAID as well (as 4TB disks).

Is that correct?

REPLY ON YOUTUBE

Unifi has a few great lines: wifi, switching, maybe power.

Then they have some weird stuff:

– Security cameras, where users can’t add cameras in the phone app unless they are given admin rights.

– Signage product that plays content on a TV. Nice idea, lousy software. Transitions between pictures are not suitable for public use.

I didn’t try access control yet.

I wonder how will the NAS turn out.

REPLY ON YOUTUBE

My other brand nas come with only single psu, 2 or 4 1gbe ports and cost more, so this is still way better value.

REPLY ON YOUTUBE

Say you had an Android phone and wanted to transfer videos to your NAS.

Is the software robust enough to simply ‘send to NAS’ with a couple taps on your phone?

REPLY ON YOUTUBE

Not being able to stack these or expand to more bays is such a deal breaker 🙁

REPLY ON YOUTUBE

Fantastic in depth review man! I just got the Minisforum MS-01 to replace my DS918+ in terms of server needs, so my Synology is just acting as storage now, so this would be the perfect unit for me to upgrade to 10Gbe to go with the rest of my UniFi stuff besides that it doesn’t have NFS yet :/ quick question, when you say reactive storage, do you mean you can add drives to the pool without wiping and just increase pool size?

REPLY ON YOUTUBE

Thanks for the review!

If it had NFS, I’d be down. Without, not so much. Possibly also a radius server or something reliant for auth.

REPLY ON YOUTUBE

How does this unit handle drive expansion? Say I have all 7 bays filled and I need more storage…Can I swap a drive or two with higher capacities in place and it dynamically adjust the array to make that space available?

REPLY ON YOUTUBE

Good stuff thanks for making this video

REPLY ON YOUTUBE

Would be awesome if they released the nas SW as an app for the NVR lineup.

Can’t hurt to dream…

REPLY ON YOUTUBE

16 bay version will be here soon and it’s also already at a cheaper price than Synology. They do have stacking but I would assume that’s also possible

REPLY ON YOUTUBE

You give UI too much of a pass for being an ecosystem and as such they don’t have to play nice with others. You also mention the word “Enterprise” numerous times. So does UI whenever it can. These two add up to missing one big fundamental, as you put it, which is directory integration and I’d argue licensing bc it’s a huge part of the marketing. Everything “Enterprise” must have directory integration, that’s what pretty much the term really means. UI in its AzureAD/Keycloak/Okta/ADFS/etc knockoff — none of which ALSO requires specialized branded hardware BTW — put LDAP/AD integration behind a per-user per-month subscription, despite the fact that unlike the aforementioned, they aren’t providing any service at all, only the permission to connect your own hardware to your own systems, AKA: licensing.

This storage thingy is worthless is you need to keep separate accounts for it. It opens doors for so many problems. If you want to use the Enterprise moniker you need to integrate or have a system so well thought out that you can cover any need, absolutely any need even if it’s convoluted, perhaps egregious, like Cisco’s. This ecosystem thing is cute until it starts being a headache, the pretty dashboards in day-to-day are rarely useful, and the push for a cloud dependency, the fact that your network devices tasked to guard your data are exfiltrating it from your network, the fact that UI relentlessly pushed for mobile app-based mgmt revokable at any point leaving gear unmanageable (like UniFi Video did) are headaches waiting to happen.

REPLY ON YOUTUBE

It’s underpowered, given that it has a 10gb interface , fully saturated with ssd would generate 3500 mbs yet the controller I will be only half the hd capacity transfer rate

REPLY ON YOUTUBE

I’m curious if the RAM it does have is even ECC. For me, ECC is a requirement for a NAS.

REPLY ON YOUTUBE

Hi there, thanks for the video.

I am looking to see the following:

1. iPhone and Android Applications to backup the pictures from the phone into the UNAS.

2. something similar to google documents to create office documents directly on the UNAS.

3. backup up one entire windows computer to the UNAS, similar to synology backup for business. .

REPLY ON YOUTUBE

I will get one because I have a ton of UNIFI in my house (Personally, I would not use Ubiquiti at work. Not Ent enough for that). But what I love about Synology is all the apps you have access to, and the more significant benefit to me is upgradability. On my 1821+, I tossed in a 10G card, two 2TB NVME caches, and 32 GB RAM.

REPLY ON YOUTUBE

Thanks.. good review. I have converted all my networking to Unifi and am considering while building a new house whether I want to use Unifi cameras. If this could have replaced the UDM it would have been a no brainer, but as a satisfied UnRaid user of 20 years, the lack of some networking and apparent inability to mix/match drive sizes may make me just get a UDM and keep my existing UnRaid. Definitely something to keep my eyes on though.

REPLY ON YOUTUBE

I disagree that this is not an exciting product. I think it is exciting to see such an intuitive user interface, a focus on the basics, and very capable hardware for such an unheard of low price. I don’t use the snazzy bits of my Synology NAS and would prefer the 10Gb/s connection and the intuitive interface of the UniFi NAS. UniFi don’t unecessarily prescribe any UniFi-branded hardware and are yet to remove features that one paid for, like Synology. Can’t wait to see RAID 6 support and perhaps a future model with support for media-transcoding and a USB port.

REPLY ON YOUTUBE

for me at thos time That NAS is only for hosting backup of my data and config of Synology nas becouse I need runn all my services from nas. I actuaky run old websites on my nas and run docker on my main Synology. But Synology pice me of when I see deleted apps – webstation plugin deleted from DSM 7.2 – old PHP (Update php code for that sites is not cost effective in terms of time or money to rewrite them to the latest php) and I mast be on DSM 7.1 – deleting support too apps fron old dsm on new version pice me of but I intrested to have uptodate nas.

UNAS for me is also to big and have too big energy consumption and 7 HDDs is not form me.

If UNAS will be based on 4 HDDs UNVR and have webstation like on Synology and docker support (to install DNS Server on difrent docker apps).

About Unifi apps for me will be good Use UNAS as unifi backup target storage for auto backup network or protect or UnifiOS on UNAS – If I see that things on Unifi devices as UNAS I probably will swich from Synology.. – I’m a homrlaber have a unifi network on my home.

I think UNAS Pro ss for me for a bog misness or enterprise where data access tiime or suoort alot users too data on drives, on home is too big and bare additional functions as for energy consumption.

REPLY ON YOUTUBE

no advertised hardware specs can fuck off

REPLY ON YOUTUBE

Ummm what is a ‘knicker’ ?

REPLY ON YOUTUBE

Have they changed shipping date; I saw Oct 25th. Now it says Nov 4th!

REPLY ON YOUTUBE

With the limited amount of drives we need stacking

REPLY ON YOUTUBE

Does it come with any sync app for windows?

REPLY ON YOUTUBE

can you run duel network/gateways with the two nics?

REPLY ON YOUTUBE

LOL I’m currently getting away from Unifi. Sick of their “ecosystem.” Sick of their crap. Having to use HTTPS and 2FA for my UDM that’s local.

REPLY ON YOUTUBE

The Mac of NAS. Ecosystem is Apple-like

REPLY ON YOUTUBE

I haven’t had a “modern” NAS, I have a bit of a basic question. Can I buy this NAS, throw in two 20TB drives in RAID5, and then expand and switch it over to RAID6?

REPLY ON YOUTUBE

Its their NVR with different software… I think for MOST. Home users and even small businesses it is a great design!

REPLY ON YOUTUBE

Not really a fair comparison to compare to Synology really as Synology are not just a NAS, they have an app ecosystem too, where as the Unifi NAS is just a NAS and no extra crap.

I think the hardware is identical to the UNVR Pro, except the UNAS is 8gb RAM. Price is identical as UNVR, and I feel the price point is pretty good for a 10gbp link, Directory Service integration. UI have advised they are adding Raid 6 in a firmware update, so they do listen to the users. I don’t think M.2 is really a big deal, you can get adaptors from M.2 to 2.5″ 2.5″ SATA will max out a 10gbps connection anyway. The single PSU is not an issue as it supports the Unifi USP device as well when you want redundant power supplies. It would add cost if you had a redundant PSU and this is NOT an enterprise version of the device.

I have seen several reviews of this unit and the speed tends to be consistent. Again, remember this is not an Enterprise version of the device. This is a Pro version, which is in between standard and enterprise. A lot of Synology devices at similar price point only have 2 x 1gbp NIC’s

I feel if this sells well, UI will likely release an Enterprise and standard version, based around the other UNVR form factors they have.

REPLY ON YOUTUBE

Is it true it doesn’t do iSCSI or NFS?

If so it should be called the “UniFi NAS Home”, or “UniFi NAS SMB”

I am fine with it not doing containers or VM’s, but not doing NAS features such as iSCSI or NFS, and then calling it a “PRO” NAS is ridiculous

I am even fine with the price point, but the NAS PRO name without iSCSI or NFS is ridiculous

REPLY ON YOUTUBE

No USB ports useful for local Backups and also no UPS support due to the lack of a USB port. Major failure if you ask me …

REPLY ON YOUTUBE

this iust a simple and very basic NAS / filer but lacks « business » features specially for the number of drives that it holds:

* NVMe Cache

* Better and more capable processor

* More RAM!!

* Dual 10GbE RJ45/SFP+ for LACP & redundancy

* Dual PSU for power resilience

* AD Authentication integration

* FIPS 140-2 compliance for business that is required

* No SCSI or NFS support

Wouldn’t recommend this unit for professional use.

REPLY ON YOUTUBE

Raid 6 is a curious omission, with raid 10 you have to rely on luck for more than one drive failure especially here with an odd number of drives. So three options, basic protection(raid 1), advanced protection raid 6) and performance (raid 10 would have been ideal. Perhaps a max version with raid 6 and zfs/NFS is in their future. I don’t see the point personally of adding docker etc when most will use another more powerful scalable server for virtualization (proxmox etc)

REPLY ON YOUTUBE

It is “cheap”, short depth, quiet, and Unifi. Those are the selling points. Everything else goes hard to the other guys by all appearances. Qnap has a few very short depth (13cm) NAS as well, but the price point speaks for itself.

REPLY ON YOUTUBE

But can it run Plex or Jellyfin?

REPLY ON YOUTUBE

its has ssd cache support?

REPLY ON YOUTUBE

Look Unifi is a cult like Synology BUT ….a NAS that doesn’t have docker, apps, rubbish ,,,a NAS that actually prioritizes local Network attached storage? …Well that is very interesting to me.

REPLY ON YOUTUBE

Huge question for using this at work: What is the Active Directory integration like? I saw the checkbox option being moused over many times, but it was never explored on the video. Can I manage access to shares based on group rights, and apply group rights to a share, or a folder within a share?

From a business perspective, the lack of a second PSU is definitely problematic… they really want to push their weird outboard PSU, I know, but that just doesn’t fly if you’re trying to play with the big boys. For small environments and homes, however, it seems pretty great… as long as what you want is STORAGE and not all the extra stuff Synology and the like have grown into becoming.

REPLY ON YOUTUBE

I would love this plus something like the Synology Drive software.

REPLY ON YOUTUBE

could i put my backup copies of movies on this and run plex from a computer and access from any tv?

REPLY ON YOUTUBE

Excellent review. Thank you. I would really be interesting to knowing what drive setup with RAID5 would be needed to saturate the 10b both for reads and writes. Your numbers are not that great with the drives you used. Could faster hard drives do it? Would SATA SSD’s do it and how many would be needed? I am not buying another NAS with 10g that will only do less than half the network capabilities. With this unit in particular, it really needs to have 10g file transfer capabilities.

REPLY ON YOUTUBE

Be nice if they allowed for backups to AWS or Backblaze via their AWS api thing.

REPLY ON YOUTUBE

So no PLEX available?

REPLY ON YOUTUBE

can i ask what hdd temperature at full load all 7bay?

and the cpu is too weak,that power only enough at 2 bay

REPLY ON YOUTUBE

can it present iscsi LUNs at all ?

REPLY ON YOUTUBE

How you add Reolink Cams to the unifi protect?????

REPLY ON YOUTUBE

A teeny tiny bit frustrated that I ordered a backplane mini-ITX case from AliExpress last month (and am still waiting on delivery) intending to build my homelab server/NAS for my Unifi stack then they announce this thing. Chances are it’ll be like the 2U PDU they make & stay out of stock for 10 months…

REPLY ON YOUTUBE

I might’ve missed it, but does it really not have NFS or iSCSI support?

REPLY ON YOUTUBE

A NAS that’s not trying to be a golden goose-do-it-all homeserver where you run everything but the kitchen sink on.

Take my money

REPLY ON YOUTUBE

Waiting for the NAS pro MAX if it has docker/vm support.

REPLY ON YOUTUBE

499?! Holy crap. If this does RELIABLE storage, I cannot care less about Qnap and Synology bloatware!

REPLY ON YOUTUBE

GREAT video and I love that they have nailed the fundamentals. In your comparative videos, I suspect it’s going to be REALLY hard to find something in this price range that can even come close. (Find an off the shelf 7 bay NAS for $500?)

REPLY ON YOUTUBE

7:26 There is a secondary PSU. Uni does it using the Unifi RPS… That is Unifi’s redundant power supply device. Also if you are worried about noise then RACK MOUNT HARDWARE ISN’T FOR YOU! Only a little over 7 minutes and I already dislike this review.

REPLY ON YOUTUBE

Everybody is going nuts over the price but that’s by design. V2 will charge extra for redundant power supply and other status quo features.

REPLY ON YOUTUBE

Is there currently a feature where you can backup pc clients like active backup for business on synology?

REPLY ON YOUTUBE

♥♥

REPLY ON YOUTUBE

Wonder why this isn’t just available as an app for other Unifi devices with disk storage?

REPLY ON YOUTUBE

Can this replace Synology Drive?

REPLY ON YOUTUBE

But can it run plex?…

REPLY ON YOUTUBE

Great comprehensive video. I absolutely love all things Ubiquity but I feel like I want to wait for another version or at least more apps. I have a Synology now and don’t even scratch the surface of the features available (including things like running docker images) but the one thing your video suggested is given the lack of use of file metadata I would certainly be missing some app features like the Synology photo app at least and probably video as well so I could look up pictures by person (facial recognition) or geo (show me my Aruba vacation pictures)

I have wanted all things Uniquity on my network and maybe I just need to wait a few software and maybe even a hardware revision or 2. I feel like it may need more memory and CPU once and IF they start to add more app features.

Also, I wish they would have done an M.2 drive slot at least for caching.

REPLY ON YOUTUBE

What is your opinion regarding UNIfI selecting BTRFS for their filesystem? I see many video stating that BTRFS with RAID is not ready for production. This is a topic that I would love for you to expand in one of your future videos for the UNIFI nas.

REPLY ON YOUTUBE

Does it have Docker?

REPLY ON YOUTUBE

@NASCompares Can you test throughput with SATA SSD setup? Like Samsung EVO drives?

REPLY ON YOUTUBE

If the unit included NFS, multiple volumes and mixed hard drive sizes . . . that would be then end of Synology for me.

A luxury version with larger screen – summarising all the data at a glance would be a nice option.

REPLY ON YOUTUBE

I’m running a bare metal k8s cluster, so I really don’t need to be able to run docker containers on a nas. This is exactly what I wanted and at a great price. Ubiquiti nailed their first NAS outing

REPLY ON YOUTUBE

Great video, this is almost exactly what I have been looking for, just need the ability to make immutable backups (WORM) which it sounds like you are expecting from them in the near future. Will be looking forward to your future videos.

REPLY ON YOUTUBE

wow imagine releasing a “pro” NAS that is 100% SATA and not being able to have redundant 10 Gb uplinks…. Ubiquiti has no idea what they are doing

REPLY ON YOUTUBE

When the UniFi Pro NAS dies, can you take the drives and plop it into a new UniFi Pro NAS and carry on like Synology NAS’s ?

REPLY ON YOUTUBE

Will this run Plex so I can ditch my Synology?

REPLY ON YOUTUBE

10:01 you’re forgetting that this is unifi’s “pro” line not “pro max”. “Pro” really just means rack mount entry level SMB for unifi devices. I wouldn’t expect dual 10Gb on the regular “pro” model.

Now a “pro max” NAS I would expect to have NVME, more bays, dual 10Gb and a single 25Gb.

REPLY ON YOUTUBE

I would be interested to know how the hardware compares to the Protect UNVRPro? But none of the reviews peek under the lid. Is it the same hardware but with more memory or is the more to it?

REPLY ON YOUTUBE

Thanks!

REPLY ON YOUTUBE

no x86, no good…

REPLY ON YOUTUBE

So, can you designate drives? Maybe use 5 of them for raid and 2x as backup?

REPLY ON YOUTUBE

Thinking if these do well they will come out with a ‘Max’ and ‘Enterprise’ editions.

I’ve been looking at getting a Synology or building my own TrueNAS system for home. Now this came out, I have more research to do to see this will work for my home and families needs.

REPLY ON YOUTUBE

Does it support NFS ISCSI?

REPLY ON YOUTUBE

Having already invested in Unifi gear (including a Pro switch with a spare SFP+ port) and already having a fairly beefy Proxmox host in play to host applications this seems like a great option!

REPLY ON YOUTUBE

It’s pretty clear their goal for this device is having basic file storage for users of a home/small business that are going to connect to shared folders on mac and windows systems. What a NAS by definition really is… I see a lot of people complaining about lack of redundant network ports, NFS, iSCSI, etc. IMO, I think all those features they’re wanting are things needed when you are building enterprise infrastructure and Ubiquiti knows that’s really best handled by a proper SAN product from the likes of Dell, HP, IBM, NetApp, etc.

REPLY ON YOUTUBE

Can an existing UNVR-Pro be turned into a UNAS Pro? Is the hardware identical? Is the software the only difference?

REPLY ON YOUTUBE

How can some spend nearly an hour reviewing a NAS and not address file systems?

REPLY ON YOUTUBE

Will this support SATA and SAS drives? Haven’t been able to find this anywhere.

REPLY ON YOUTUBE

11:30 on my Synology rs3614rpxs, those read and write speeds are what I was getting with five hard drives, over a SPF+ DAC cable. When I switched to a LC om4 fiber SPF+ cable, I was achieving 750MBps or 7Gbps.

Not sure why I’m getting better numbers from fiber cable over the copper cable. Both cables are rated 10gigabits per second

REPLY ON YOUTUBE

Can hardly wait for the Enterprise NVR to be released as a NAS WOW

REPLY ON YOUTUBE

How about power consumption? Did I miss that part?

REPLY ON YOUTUBE

Where is the non-pro version?

REPLY ON YOUTUBE

wudnt piss on this crap if it was on fire

REPLY ON YOUTUBE

This is just a NAS network attached storage only. Not able to complete to Synology, QNAP, UGreen, etc. at this time. Is this NAS going to support docker, plex, etc

REPLY ON YOUTUBE

Is it possible to use 1-2 HDD as a nvr Storage?

REPLY ON YOUTUBE

If this is “Pro,” I wonder about their name for the (maybe) upcoming, more powerful version of this. UNAS MegaUltraProMaxSE

REPLY ON YOUTUBE

I smell a Ultra Pro version with NVEM Cache Slot, which will be announced in 3-6 months.

REPLY ON YOUTUBE

Have you actually logged in via SSH and poked around the system? I’m curious what filesystem its running on those drives; given the feature set, it sounds like they are using btrfs.

REPLY ON YOUTUBE

Does this have a media server capability or a way to link up to Plex server??

REPLY ON YOUTUBE

VMs are heavy on the ressources, I could understand why it ain’t there, but missing Docker support is a no-go. I remain on TrueNAS for the time being.

REPLY ON YOUTUBE

What kind of raid protection is it using? What about the data if the unit fails? Are able to mix different hdds size like Sinolgy shr does?

REPLY ON YOUTUBE

Maybe I missed but is there any thermal test for this machine? The front design of those drive bay let me suspect it might have thermal issue once you use it for some years later.

REPLY ON YOUTUBE