Ceph is a powerful and scalable storage system designed to handle large volumes of data with high redundancy and performance. Integrating Ceph with NAS (Network-Attached Storage) devices can be a strategic choice, especially if you already have these devices in your infrastructure. This article will guide you through the essentials of setting up a Ceph cluster using NAS devices, covering the key considerations, configurations, and best practices.

FAQ

**1. Infrastructure and Hardware:

- What are the hardware specifications of the NAS devices and servers?

- Ensure they have sufficient CPU, RAM, and network capabilities.

- What is the network configuration and bandwidth between NAS devices and Ceph nodes?

- Ensure high-speed, low-latency connections for optimal performance.

- How will you handle power and cooling requirements?

- Plan for adequate power supply and cooling to prevent hardware failures.

**2. Configuration and Deployment:

- How many Ceph MONs (Monitors) do you need for high availability?

- Typically, 3 or 5 MONs are recommended to maintain quorum.

- How will you deploy and configure Ceph OSDs (Object Storage Daemons)?

- Plan the setup for distributing data and redundancy.

- What Ceph pool configuration will you use (e.g., replication, erasure coding)?

- Decide based on your performance and redundancy needs.

- What are your requirements for Ceph Manager (MGR) and other daemons?

- Ensure proper installation and configuration for management and monitoring.

**3. Performance and Scalability:

- How will you monitor and measure performance?

- Use tools like

ceph statusandceph osd dfto keep track of performance metrics.

- Use tools like

- How will you scale the cluster?

- Plan for adding more OSDs, MONs, or nodes as needed.

- What are your expectations for read/write performance, and how will you achieve them?

- Consider disk types (SSD vs. HDD), RAID configurations, and network performance.

**4. Data Protection and Redundancy:

- What is your data redundancy strategy (e.g., replication factor, erasure coding)?

- Choose based on the criticality of data and available storage.

- How will you handle disk failures and data recovery?

- Plan for replacing failed disks and data rebalancing.

**5. Security and Access Control:

- How will you secure data in transit and at rest?

- Implement encryption and access controls.

- What are your authentication and authorization requirements?

- Use Ceph’s built-in security features and configure access controls appropriately.

**6. Backup and Disaster Recovery:

- What is your backup strategy for Ceph and the data it stores?

- Plan for regular backups and ensure they are stored securely.

- How will you recover from disasters or significant failures?

- Develop and test disaster recovery procedures.

**7. Maintenance and Updates:

- What is your plan for routine maintenance and updates?

- Regularly update Ceph and associated components to keep the system secure and performant.

- How will you handle hardware or software failures?

- Establish procedures for troubleshooting and repair.

**8. Documentation and Training:

- Do you have comprehensive documentation for your Ceph deployment?

- Document configurations, procedures, and troubleshooting steps.

- Is your team trained on Ceph and its management?

- Ensure that staff are familiar with Ceph operations and maintenance.

**9. Cost and Budget:

- What are the costs associated with deploying and maintaining Ceph?

- Consider hardware, software, and operational costs.

- How will you budget for future expansions or upgrades?

- Plan for scaling and additional resources as needed.

**10. Vendor Support and Community Resources:

- What support options are available for Ceph?

- Consider vendor support if using commercial distributions or rely on community resources.

- Are there community resources or forums you can use for troubleshooting and advice?

- Utilize community support for additional guidance and best practices.

- Compare CEPH versus RAID storage space:

- RAID: Usable storage depends on the RAID level (e.g., RAID 5 uses (N-1) disks for data).

- Ceph: Usable storage depends on replication or erasure coding settings. More overhead for redundancy.

- Compare performance (MB/s and IOPS):

- RAID: Performance varies by RAID level and hardware. Generally good for high throughput.

- Ceph: Performance is influenced by cluster configuration, network, and number of OSDs. Typically lower than RAID for single operations but scales well.

- Possible bottlenecks in Ceph setup:

- Network: Latency and bandwidth issues can affect performance.

- OSDs: Disk speed and configuration can be a limiting factor.

- MONs: Too few MONs can affect cluster stability.

- Explain Ceph to a non-IT person:

- Ceph is like a smart file cabinet that spreads your files across multiple drawers (servers) to keep them safe and accessible. It can automatically fix things if a drawer breaks or gets lost.

- RAID on physical NAS systems:

- Not necessary: If NAS disks are exposed in JBOD mode, Ceph handles redundancy and data management.

- Installing Ceph on NAS Devices:

- Yes: Install MONs on VMs within each NAS. Map NAS disks to OSDs on other VMs or servers.

- Additional questions to consider:

- Consider hardware specs, network setup, performance expectations, redundancy strategy, and backup plans.

- Minimum NAS count for Ceph cluster:

- 3 NAS devices: Minimum to run MONs on each and provide redundancy for OSDs.

| Where to Buy a Product | |||

|

|

|

|

VISIT RETAILER ➤ |

|

|

|

VISIT RETAILER ➤ |

If you like this service, please consider supporting us.

We use affiliate links on the blog allowing NAScompares information and advice service to be free of charge to you. Anything you purchase on the day you click on our links will generate a small commission which is used to run the website. Here is a link for Amazon and B&H. You can also get me a ☕ Ko-fi or old school Paypal. Thanks! To find out more about how to support this advice service check HERE

We use affiliate links on the blog allowing NAScompares information and advice service to be free of charge to you. Anything you purchase on the day you click on our links will generate a small commission which is used to run the website. Here is a link for Amazon and B&H. You can also get me a ☕ Ko-fi or old school Paypal. Thanks! To find out more about how to support this advice service check HERE

Private 🔒 Inner Circle content in last few days :

UnifyDrive UP6 Mobile NAS Review

UniFi Travel Router Tests - Aeroplane Sharing, WiFi Portals, Power Draw, Heat and More

UGREEN iDX6011 Pro NAS Review

Beelink ME PRO NAS Review

UGREEN iDX6011 Pro - TESTING THE AI (What Can it ACTUALLY Do?)

OWC TB5 2x 10GbE Dock, UGREEN NAS Surveillance Software, AceMagic Retro PCs, Gl.iNet Comet 5G @CES

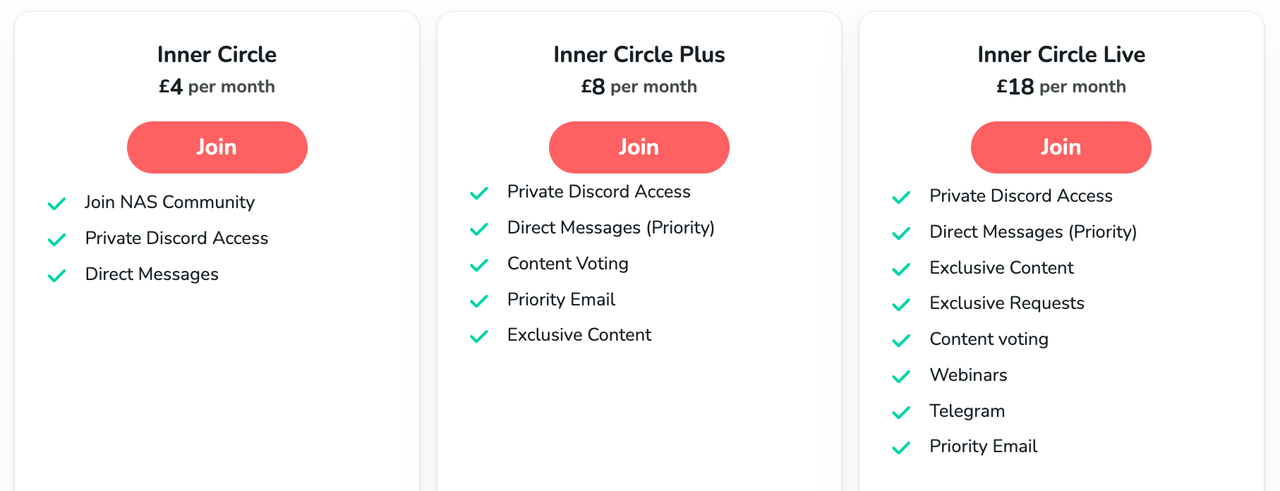

Access content via Patreon or KO-FI

UnifyDrive UP6 Mobile NAS Review

UniFi Travel Router Tests - Aeroplane Sharing, WiFi Portals, Power Draw, Heat and More

UGREEN iDX6011 Pro NAS Review

Beelink ME PRO NAS Review

UGREEN iDX6011 Pro - TESTING THE AI (What Can it ACTUALLY Do?)

OWC TB5 2x 10GbE Dock, UGREEN NAS Surveillance Software, AceMagic Retro PCs, Gl.iNet Comet 5G @CES

Access content via Patreon or KO-FI

Discover more from NAS Compares

Subscribe to get the latest posts sent to your email.

DISCUSS with others your opinion about this subject.

ASK questions to NAS community

SHARE more details what you have found on this subject

IMPROVE this niche ecosystem, let us know what to change/fix on this site